ロボティクスにおけるコンピュータビジョンの統合が、さまざまな産業において、機械が周囲の状況をどのように認識し、対応する方法を変化させているかを探ります。

ロボティクスにおけるコンピュータビジョンの統合が、さまざまな産業において、機械が周囲の状況をどのように認識し、対応する方法を変化させているかを探ります。

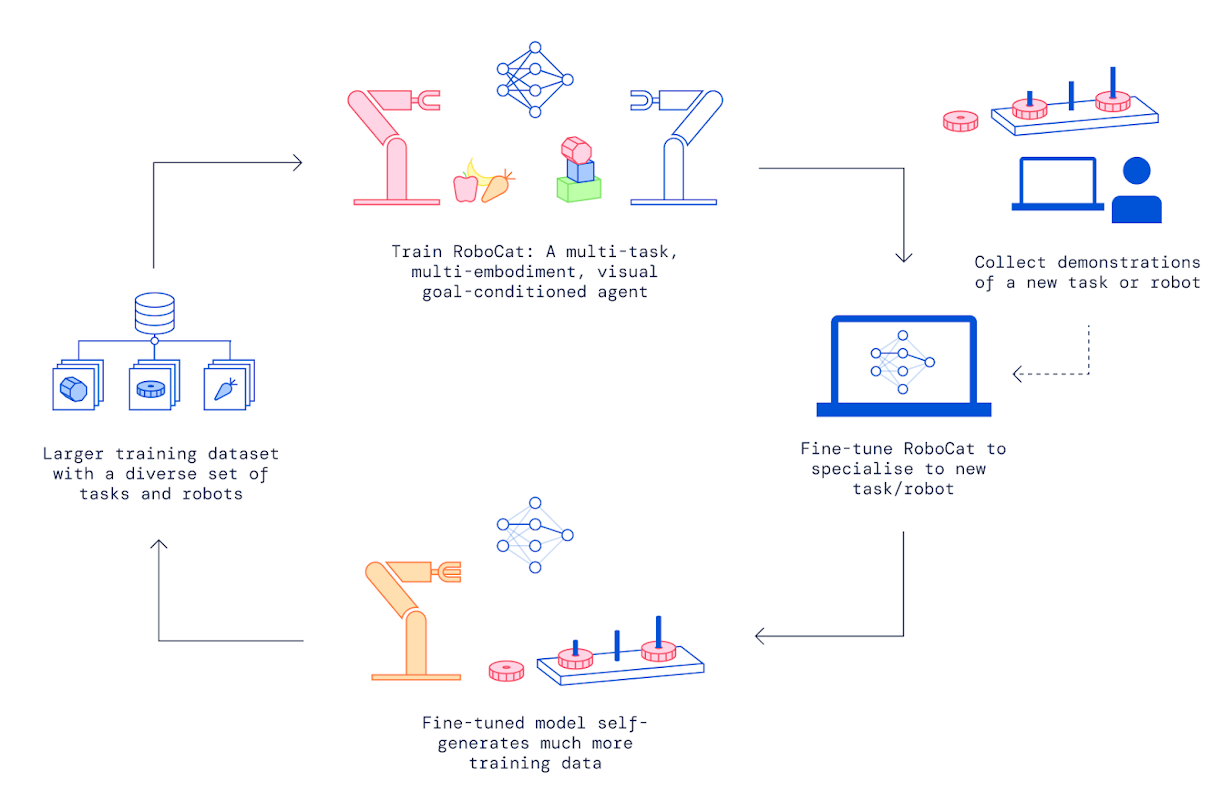

ロボティクスにおけるAIは驚くべきペースで進歩しており、ロボットは人間の介入を減らし、より複雑なタスクを実行するように構築されています。たとえば、DeepMindのRoboCatは、わずか100回のデモンストレーションで新しいタスクを学習できるAI駆動のロボットです。RoboCatは、これらのインプットを使用して、より多くのトレーニングデータを生成し、スキルを向上させ、その成功率をその後のトレーニング後、36%から74%に向上させることができます。Robocatのようなイノベーションは、最小限の人的インプットで幅広いタスクを処理できるロボットの作成に向けた大きな一歩を示しています。

AI搭載ロボットはすでに、Amazonのロボット利用による倉庫業務の効率化や、農業における農業慣行を最適化するAIロボットなど、さまざまな実用的なアプリケーションで影響を与えています。以前、ロボティクスにおけるAIの全体的な役割を探り、ロジスティクスからヘルスケアまで、業界をどのように再構築しているかを見てきました。この記事では、ロボティクスにおけるコンピュータビジョンがなぜそれほど重要なのか、そしてそれがロボットが周囲の状況を認識し、解釈するのにどのように役立つのかをより深く掘り下げていきます。

ロボットにおけるビジョンシステムは、ロボットの目として機能し、周囲の環境を認識し理解するのに役立ちます。これらのシステムは通常、カメラとセンサーを使用して視覚データを取得します。コンピュータビジョンアルゴリズムは、取得したビデオと画像を処理します。物体検出、奥行き認識、パターン認識を通じて、ロボットは物体を識別し、周囲の状況を評価し、リアルタイムで意思決定を行うことができます。

.png)

ビジョンAIまたはマシンビジョンは、ロボットが動的で構造化されていない環境で自律的に動作するために不可欠です。ロボットが物体を持ち上げる必要がある場合、コンピュータビジョンを使用してその位置を特定できる必要があります。これは非常に簡単な例です。コンピュータビジョンシステムの同じ基本的な基盤は、ロボットが製造で製品を検査したり、外科手術を高精度で支援したりするアプリケーションを構築するために必要です。リアルタイムの意思決定に必要な感覚入力を提供することにより、ビジョンシステムは、ロボットが周囲の環境とより自然に相互作用し、さまざまな業界で処理できるタスクの範囲を拡大することを可能にします。

最近、ロボット工学におけるコンピュータビジョンの使用が世界的に増加しています。実際、世界のロボットビジョン市場は、2028年までに40億ドルに達すると予測されています。Vision AIが実際のロボットアプリケーションにどのように適用され、効率を高め、複雑な問題を解決しているかを示すいくつかの事例を見てみましょう。

水中検査は、パイプライン、海洋掘削装置、水中ケーブルなどの構造物を良好な状態に保つために不可欠です。これらの検査は、すべてが安全で適切に機能していることを確認し、費用のかかる修理や環境問題を防ぐのに役立ちます。ただし、水中環境の検査は、視界が悪く、アクセスが困難な場所があるため、困難な場合があります。

コンピュータビジョンを搭載したロボットは、鮮明で高品質な視覚データを取得し、その場で分析したり、検査対象領域の詳細な3Dモデルを作成したりできます。人間の専門知識とこの技術を組み合わせることで、検査はより安全かつ効率的になり、メンテナンスや長期計画のためのより良い洞察が得られます。

例えば、大手商業ダイビング会社のNMS、濁った進入口のある難しい水中パイプの検査に、ブルー・アトラス・ロボティクスのセンティナス遠隔操作車(ROV)を使用した。コンピュータービジョンを搭載したセンティナスROVは、14個のライトで周辺を照らし、さまざまな角度から高解像度の画像を撮影しました。これらの画像は、パイプ内部の正確な3Dモデルの作成に使用され、NMS パイプの状態を徹底的に評価し、十分な情報に基づいたメンテナンスとリスク管理の決定を下すのに役立ちました。

%20Work.webp)

建設業界では、労働力不足に対処しながら一貫した品質を維持することは困難です。産業用ロボットによる建設の自動化は、建設プロセスを合理化し、手作業の必要性を減らし、正確で高品質な作業を保証する方法を提供します。コンピュータ・ビジョン技術は、ロボットによるリアルタイムの監視や検査を可能にすることで、この自動化に組み込むことができる。具体的には、コンピュータ・ビジョン・システムは、ロボットが材料のミスアライメントや欠陥をdetect し、すべてが正しく配置され、品質基準を満たしているかをダブルチェックするのに役立ちます。

この優れた例として、ABB Robotics と英国のスタートアップ企業 AUAR との提携があります。両社は協力して、ビジョン AI を搭載したロボットマイクロファクトリーを使用し、シート木材から手頃な価格で持続可能な住宅を建設しています。コンピュータビジョンにより、ロボットは材料を正確に切断および組み立てることができます。自動化されたプロセスは、労働力不足を解消し、単一の材料に焦点を当てることでサプライチェーンを簡素化します。また、これらのマイクロファクトリーは、地域のニーズに合わせて拡張でき、建設をより効率的かつ適応的にしながら、近隣の雇用をサポートできます。

.png)

EV 充電は、ロボット工学におけるビジョン AI のもう 1 つの興味深いユースケースです。3D ビジョンと AI を使用することで、ロボットは、屋外駐車場などの困難な環境でも、EV 充電ポートを自動的に見つけて接続できるようになりました。ビジョン AI は、車両とその周囲の高解像度 3D 画像をキャプチャすることにより機能し、ロボットが充電ポートの位置を正確に特定できるようにします。次に、充電器を接続するために必要な正確な位置と方向を計算できます。ビジョン対応 AI は、充電プロセスを高速化するだけでなく、信頼性を高め、人間の介入の必要性を減らします。

この例の 1 つは、Mech-Mind が大手エネルギー会社と協力して行った作業です。彼らは、困難な照明条件下でも、EV の充電ポートを正確に見つけて接続できる 3D ビジョン誘導ロボットを開発しました。EV 充電の自動化により、オフィスビルやモールなどの商業スペースでの効率と充電が向上します。

コンピュータビジョンは、ロボット工学にいくつかの利点をもたらし、機械がより自律性、精度、適応性をもってタスクを実行するのに役立ちます。以下に、ロボット工学におけるVision AIの独自の利点をいくつか示します。

Vision AIはロボット工学に多くの利点をもたらしますが、ロボット工学にコンピュータビジョンを実装することに関連する課題もあります。これらの課題は、さまざまな環境でロボットがどれだけうまく機能するか、また、どれだけ確実に動作するかに影響を与える可能性があるため、ロボットシステムの開発と展開を計画する際には、それらを念頭に置いておくことが重要です。ロボット工学でコンピュータビジョンを使用する際の主な課題を以下に示します。

ビジョンAIは、かつては想像もできなかったレベルの理解と精度をロボットに与えることで、ロボットが環境と相互作用する方法を変えています。製造やヘルスケアなどの分野では、コンピュータビジョンが大きな影響を与えており、ロボットはますます複雑なタスクを処理しています。AIの開発とコンピュータビジョンシステムの改善が進むにつれて、ロボットができることの可能性は広がり続けています。ロボット工学の進歩は、高度な技術だけではなく、私たちと協力できるロボットを作ることです。ロボットの能力が向上するにつれて、私たちの日常生活でさらに大きな役割を果たすようになり、新たな機会を開き、私たちの世界をより効率的でつながりのあるものにするでしょう。

コミュニティに参加して、GitHubリポジトリを探索し、さまざまなVision AIの使用事例について学びましょう。ソリューションページでは、自動運転および製造におけるコンピュータビジョンアプリケーションの詳細も確認できます。