Join us as we dive into Meta AI's Segment Anything Model 2 (SAM 2) and understand what real-time applications it can be used for across various industries.

Join us as we dive into Meta AI's Segment Anything Model 2 (SAM 2) and understand what real-time applications it can be used for across various industries.

On July 29th, 2024, Meta AI released the second version of their Segment Anything Model, SAM 2. The new model can pinpoint which pixels belong to a target object in both images and videos! The best part is that the model is able to consistently follow an object across all the frames of a video in real time. SAM 2 opens up exciting possibilities for video editing, mixed reality experiences, and faster annotation of visual data for training computer vision systems.

Building on the success of the original SAM, which has been used in areas like marine science, satellite imagery, and medicine, SAM 2 tackles challenges like fast-moving objects and changes in appearance. Its improved accuracy and efficiency make it a versatile tool for a wide range of applications. In this article, we'll focus on where SAM 2 can be applied and why it matters for the AI community.

The Segment Anything Model 2 is an advanced foundation model that supports promptable visual segmentation or PVS in both images and videos. PVS is a technique where a model can segment or identify different parts of an image or video based on specific prompts or inputs given by the user. These prompts can be in the form of clicks, boxes, or masks that highlight the area of interest. The model then generates a segmentation mask that outlines the specified area.

The SAM 2 architecture builds on the original SAM by expanding from image segmentation to include video segmentation as well. It features a lightweight mask decoder that uses image data and prompts to create segmentation masks. For videos, SAM 2 introduces a memory system that helps it remember information from previous frames, ensuring accurate tracking over time. The memory system includes components that store and recall details about the objects being segmented. SAM 2 can also handle occlusions, track objects through multiple frames, and manage ambiguous prompts by generating several possible masks. SAM 2’s advanced architecture makes it highly capable in both static and dynamic visual environments.

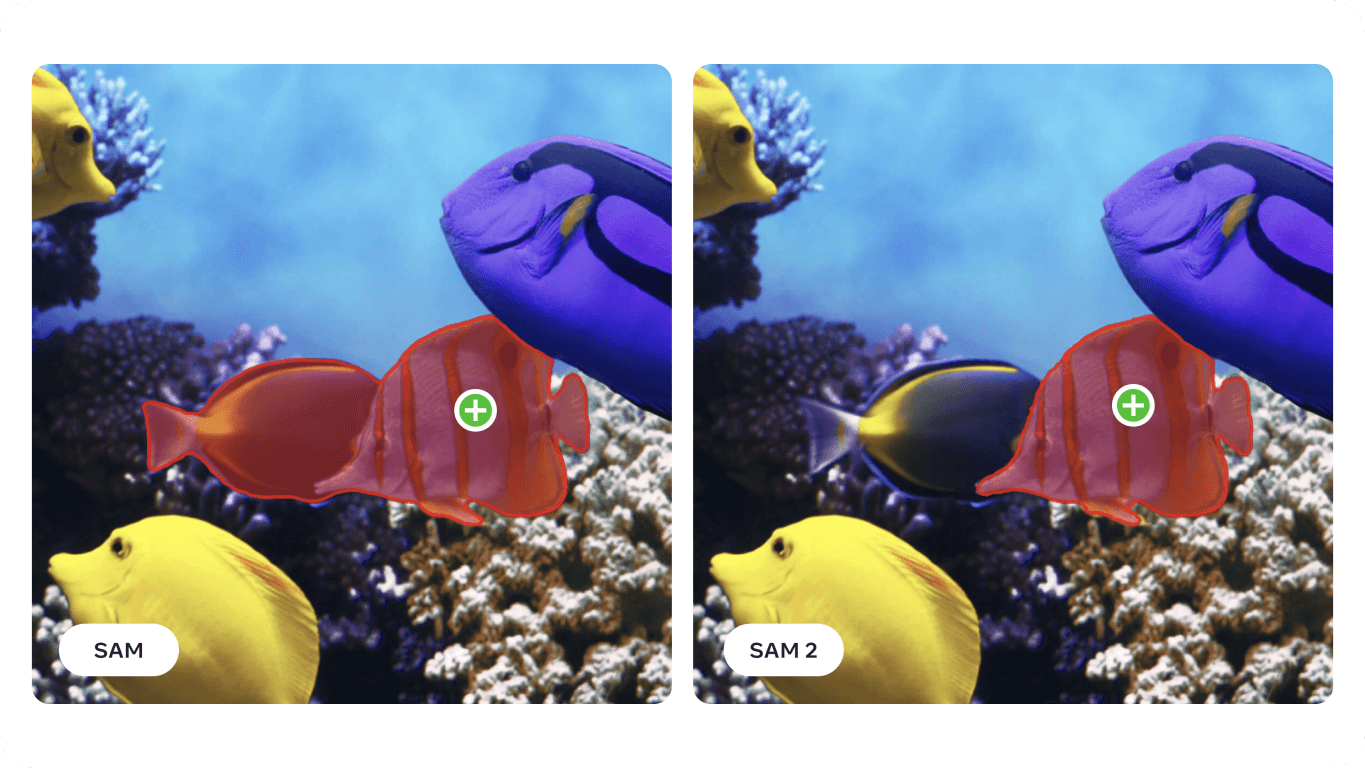

Specifically, with respect to video segmentation, SAM 2 achieves a higher accuracy with three times fewer user interactions compared to previous methods. For image segmentation, SAM 2 outperforms the original Segment Anything Model (SAM), being six times faster and more accurate. This improvement was showcased in the SAM 2 research paper across 37 different datasets, including 23 that SAM was previously tested on.

Interestingly, Meta AI’s SAM 2 was developed by creating the largest video segmentation dataset to date, the SA-V dataset. The extensive dataset includes over 50,000 videos and 35.5 million segmentation masks and was collected through interactive user contributions. Annotators provided prompts and corrections to help the model learn from a wide variety of scenarios and object types.

Thanks to its advanced capabilities in image and video segmentation, SAM 2 can be used across various industries. Let’s explore some of these applications.

Meta AI’s new segmentation model can be used for Augmented Reality (AR) and Virtual Reality (VR) applications. For instance, SAM 2 can accurately identify and segment real-world objects and make interacting with virtual objects feel more realistic. It can be useful in various fields like gaming, education, and training, where a realistic interaction between virtual and real elements is essential.

With devices like AR glasses becoming more advanced, SAM 2's capabilities could soon be integrated into them. Imagine putting on glasses and looking around your living room. When your glasses segment and notice your dog's water bowl, it might remind you to refill it, as shown in the image below. Or, if you are cooking a new recipe, the glasses could identify ingredients on your countertop and provide step-by-step instructions and tips, improving your cooking experience and ensuring you have all the necessary items at hand.

Research using the model SAM has shown that it can be applied in specialized domains such as sonar imaging. Sonar imaging comes with unique challenges due to its low resolution, high noise levels, and the complex shapes of objects within the images. By fine-tuning SAM for sonar images, researchers have demonstrated its ability to accurately segment various underwater objects like marine debris, geological formations, and other items of interest. Precise and reliable underwater imaging can be used in marine research, underwater archaeology, fisheries management, and surveillance for tasks such as habitat mapping, artifact discovery, and threat detection.

%25252525201.png)

Since SAM 2 builds on and improves upon many of the challenges SAM faces, it has the potential to improve the analysis of sonar imaging further. Its precise segmentation capabilities can aid in various marine applications, including scientific research and fisheries. For instance, SAM 2 can effectively outline underwater structures, detect marine debris, and identify objects in forward-looking sonar images, contributing to more accurate and efficient underwater exploration and monitoring.

Here are the potential benefits of using SAM 2 to analyze sonar imaging:

By integrating SAM 2 into sonar imaging processes, the marine industry can achieve higher efficiency, accuracy, and reliability in underwater exploration and analysis, ultimately leading to better outcomes in marine research.

Another application of SAM 2 is in autonomous vehicles. SAM 2 can accurately identify objects like pedestrians, other vehicles, road signs, and obstacles in real time. The level of detail SAM 2 can provide is essential for making safe navigation and collision avoidance decisions. By processing visual data precisely, SAM 2 helps create a detailed and reliable map of the environment and leads to better decision-making.

SAM 2's ability to work well in different lighting conditions, weather changes, and dynamic environments makes it reliable for autonomous vehicles. Whether it's a busy urban street or a foggy highway, SAM 2 can consistently identify and segment objects accurately so that the vehicle can respond correctly to various situations.

However, there are some limitations to keep in mind. For complex, fast-moving objects, SAM 2 can sometimes miss fine details, and its predictions can become unstable across frames. Also, SAM 2 can sometimes confuse multiple similar-looking objects in crowded scenes. These challenges are why the integration of additional sensors and technologies is pivotal in autonomous driving applications.

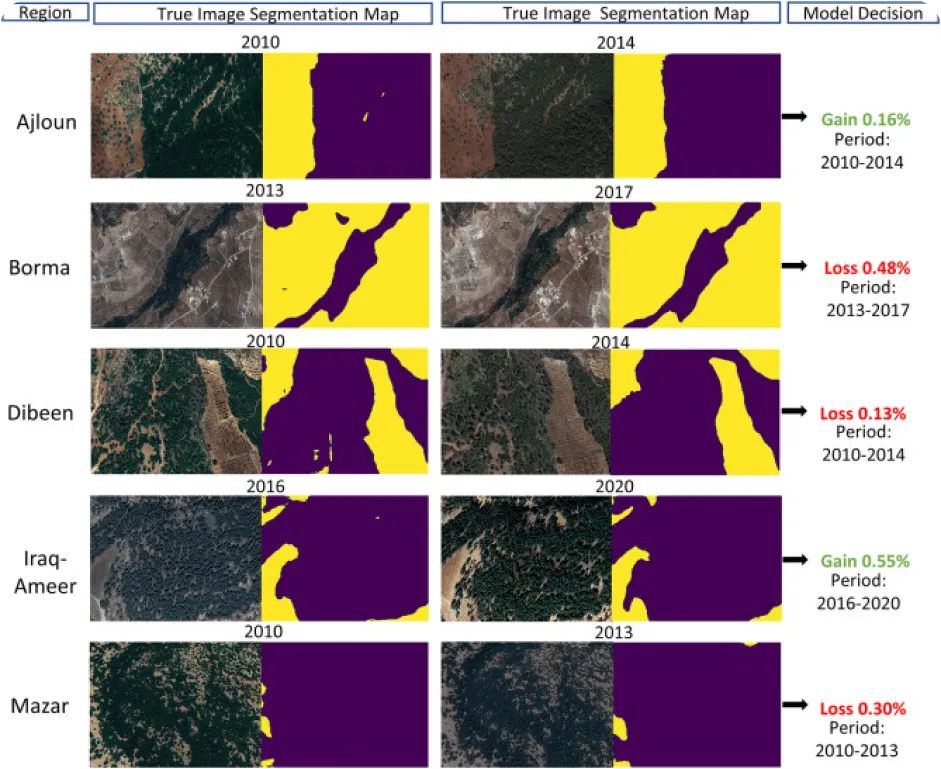

Environmental monitoring using computer vision can be tricky, especially when there's a lack of annotated data, but that's also what makes it an interesting application for SAM 2. SAM 2 can be used to track and analyze changes in natural landscapes by accurately segmenting and identifying various environmental features such as forests, water bodies, urban areas, and agricultural lands from satellite or drone images. Specifically, precise segmentation helps in monitoring deforestation, urbanization, and changes in land use over time to provide valuable data for environmental conservation and planning.

Here are some of the benefits of using a model like SAM 2 to analyze environmental changes over time:

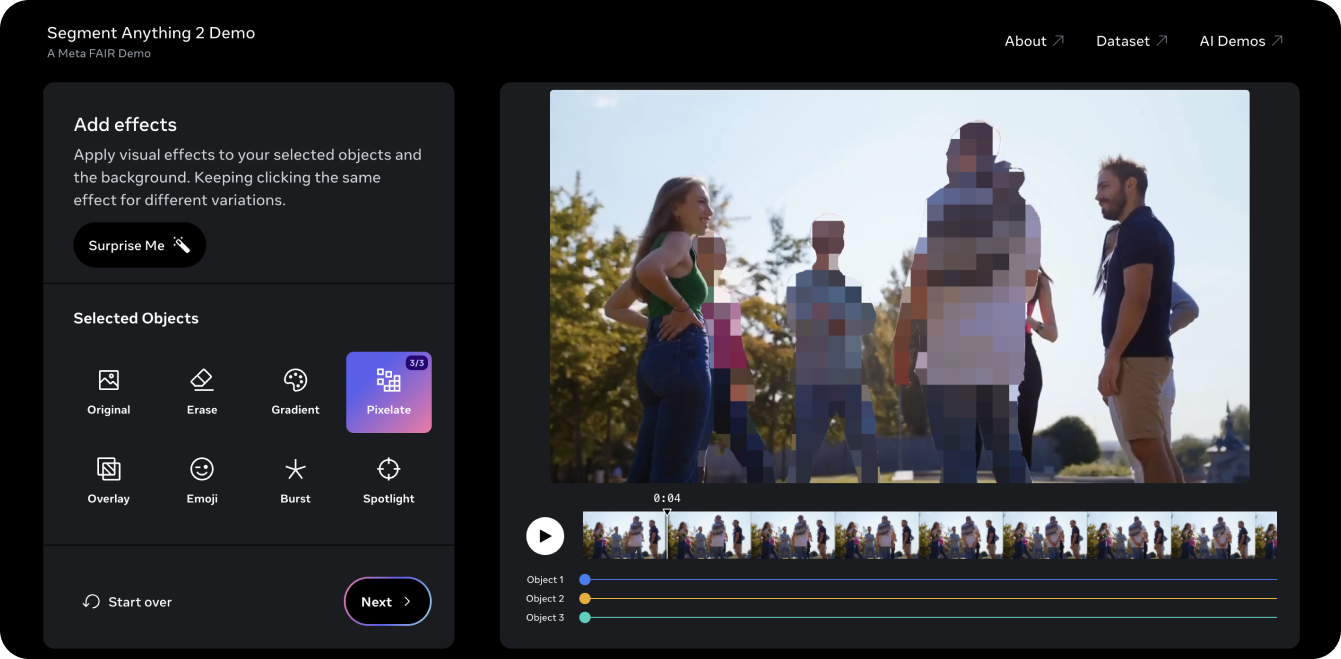

The Segment Anything 2 Demo is a great way to try the model out on a video. Using the PVS capabilities of SAM 2, we took an old Ultralytics YouTube video and were able to segment three objects or people in the video and pixelate them. Traditionally, editing three individuals out of a video like that would be time-consuming and tedious and require manual frame-by-frame masking. However, SAM 2 simplifies this process. With a few clicks on the demo, you can protect the identity of three objects of interest in a matter of seconds.

The demo also lets you try out a few different visual effects, like putting a spotlight on the objects you select for tracking and erasing the objects being tracked. If you liked the demo and are ready to start innovating with SAM 2, check out the Ultralytics SAM 2 model docs page for detailed instructions on getting hands-on with the model. Explore the features, installation steps, and examples to fully take advantage of SAM 2's potential in your projects!

Meta AI's Segment Anything Model 2 (SAM 2) is transforming video and image segmentation. As tasks like object tracking improve, we are discovering new opportunities in video editing, mixed reality, scientific research, and medical imaging. By making complex tasks easier and speeding up annotations, SAM 2 is all set up to become an important tool for the AI community. As we continue to explore and innovate with models like SAM 2, we can anticipate even more groundbreaking applications and advancements across various fields!

Get to know more about AI by exploring our GitHub repository and joining our community. Check out our solutions pages for detailed insights on AI in manufacturing and healthcare. 🚀