Deploying Ultralytics YOLO11 on Rockchip for efficient edge AI

Explore how to deploy Ultralytics YOLO11 on Rockchip using the RKNN Toolkit for efficient Edge AI, AI acceleration, and real-time object detection.

Explore how to deploy Ultralytics YOLO11 on Rockchip using the RKNN Toolkit for efficient Edge AI, AI acceleration, and real-time object detection.

A recent buzzword in the AI community is edge AI, especially when it comes to computer vision. As AI-driven applications grow, there’s a greater need to run models efficiently on embedded devices with limited power and computing resources.

For example, drones use Vision AI for real-time navigation, smart cameras detect objects instantly, and industrial automation systems perform quality control without relying on cloud computing. These applications require fast, efficient AI processing directly on edge devices to ensure real-time performance and low latency. However, running AI models on edge devices isn’t always easy. AI models often require more power and memory than many edge devices can handle.

Rockchip’s RKNN Toolkit helps solve this problem by optimizing deep learning models for Rockchip-powered devices. It uses dedicated Neural Processing Units (NPUs) to speed up inference, reducing latency and power consumption compared to CPU or GPU processing.

The Vision AI community has been eager to run Ultralytics YOLO11 on Rockchip-based devices, and we heard you. We’ve added support for exporting YOLO11 to RKNN model format. In this article, we’ll explore how exporting to RKNN works and why deploying YOLO11 on Rockchip-powered devices is a game-changer.

Rockchip is a company that designs system-on-chips (SoCs) - tiny yet powerful processors that run many embedded devices. These chips combine a CPU, GPU, and a Neural Processing Unit (NPU) to handle everything from general computing tasks to Vision AI applications that rely on object detection and image processing.

Rockchip SoCs are used in a variety of devices, including single-board computers (SBCs), development boards, industrial AI systems, and smart cameras. Many well-known hardware manufacturers, Radxa, ASUS, Pine64, Orange Pi, Odroid, Khadas, and Banana Pi, build devices powered by Rockchip SoCs. These boards are popular for edge AI and computer vision applications because they offer a balance of performance, power efficiency, and affordability.

To help AI models run efficiently on these devices, Rockchip provides the RKNN (Rockchip Neural Network) Toolkit. It lets developers convert and optimize deep learning models to use Rockchip’s Neural Processing Units (NPUs).

RKNN models are optimized for low-latency inference and efficient power usage. By converting models to RKNN, developers can achieve faster processing speeds, reduced power consumption, and improved efficiency on Rockchip-powered devices.

Let's take a closer look at how RKNN models improve AI performance on Rockchip-enabled devices.

Unlike CPUs and GPUs, which handle a wide range of computing tasks, Rockchip’s NPUs are designed specifically for deep learning. By converting AI models into RKNN format, developers can run inferences directly on the NPU. This makes RKNN models especially useful for real-time computer vision tasks, where quick and efficient processing is essential.

NPUs are faster and more efficient than CPUs and GPUs for AI tasks because they are built to handle neural network computations in parallel. While CPUs process tasks one step at a time and GPUs distribute workloads across multiple cores, NPUs are optimized to perform AI-specific calculations more efficiently.

As a result, RKNN models run faster and use less power, making them ideal for battery-powered devices, smart cameras, industrial automation, and other edge AI applications that require real-time decision-making.

Ultralytics YOLO (You Only Look Once) models are designed for real-time computer vision tasks like object detection, instance segmentation, and image classification. They are known for their speed, accuracy, and efficiency, and are widely used across industries such as agriculture, manufacturing, healthcare, and autonomous systems.

These models have improved substantially over time. For instance, Ultralytics YOLOv5 made object detection easier to use with PyTorch. Then, Ultralytics YOLOv8 added new features like pose estimation and image classification. Now, YOLO11 takes things further by increasing accuracy while using fewer resources. In fact, YOLO11m performs better on the COCO dataset while using 22% fewer parameters than YOLOv8m, making it both more precise and more efficient.

Ultralytics YOLO models also support exporting to multiple formats, allowing flexible deployment across different platforms. These formats include ONNX, TensorRT, CoreML, and OpenVINO, giving developers the freedom to optimize performance based on their target hardware.

With the added support for exporting YOLO11 to RKNN model format, YOLO11 can now take advantage of Rockchip’s NPUs. The smallest model, YOLO11n in RKNN format, achieves an impressive inference time of 99.5ms per image, enabling real-time processing even on embedded devices.

Currently, YOLO11 object detection models can be exported to the RKNN format. Also, stay tuned - we are working on adding support for the other computer vision tasks and INT8 quantization in future updates.

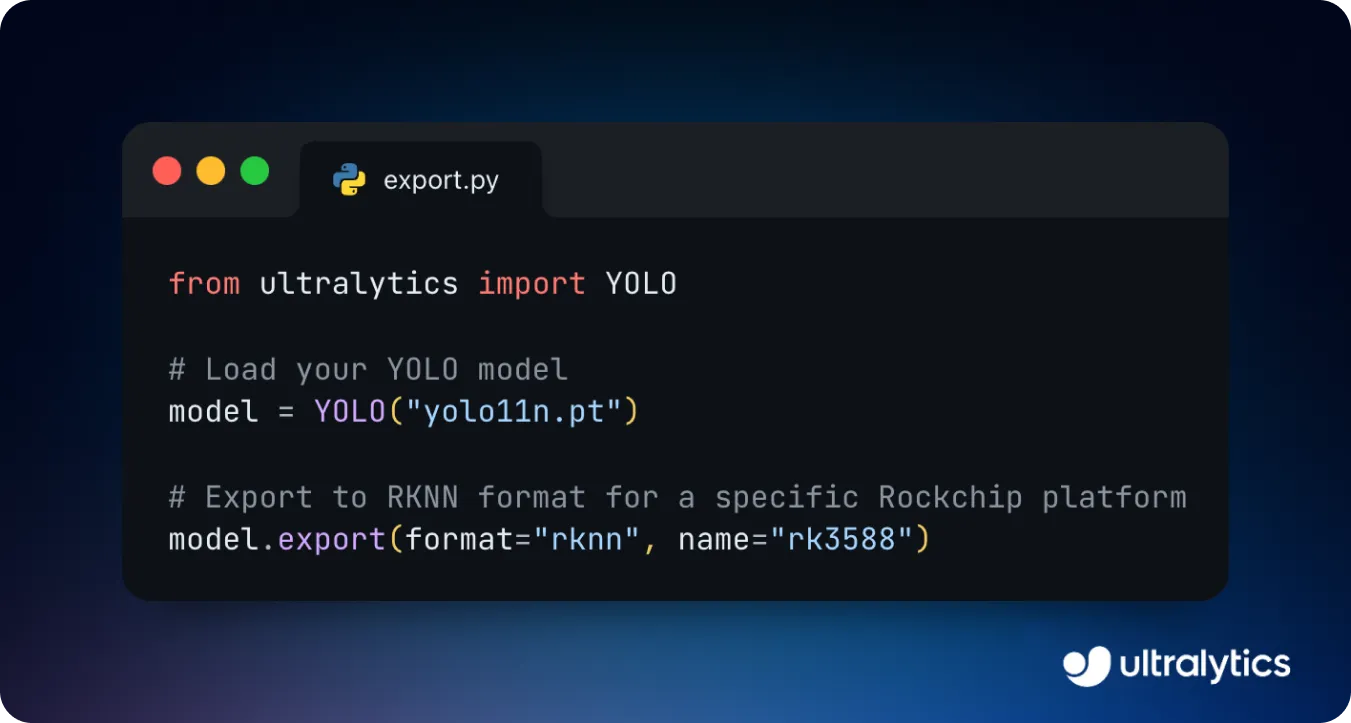

Exporting YOLO11 to RKNN format is a straightforward process. You can load your custom-trained YOLO11 model, specify the target Rockchip platform, and convert it to RKNN format with a few lines of code. The RKNN format is compatible with various Rockchip SoCs, including RK3588, RK3566, and RK3576, ensuring broad hardware support.

Once exported, the RKNN model can be deployed on Rockchip-based devices. To deploy the model, you simply load the exported RKNN file onto your Rockchip device and run inference - the process of using the trained AI model to analyze new images or video and detect objects in real time. With just a few lines of code, you can start identifying objects from images or video streams.

To get a better idea of where YOLO11 can be deployed on Rockchip-enabled devices in the real world, let's walk through some key edge AI applications.

Rockchip processors are widely used in Android-based tablets, development boards, and industrial AI systems. With support for Android, Linux, and Python, you can easily build and deploy Vision AI-driven solutions for a variety of industries.

A common application that involves running YOLO11 on Rockchip-powered devices is rugged tablets. They are durable, high-performance tablets designed for tough environments like warehouses, construction sites, and industrial settings. These tablets can leverage object detection to improve efficiency and safety.

For example, in warehouse logistics, workers can use a Rockchip-powered tablet with YOLO11 to automatically scan and detect inventory, reducing human error and speeding up processing times. Similarly, on construction sites, these tablets can be used to detect if workers are wearing the required safety gear, like helmets and vests, helping companies enforce regulations and prevent accidents.

With respect to manufacturing and automation, Rockchip-powered industrial boards can play a big role in quality control and process monitoring. An industrial board is a compact, high-performance computing module designed for embedded systems in industrial settings. These boards typically include processors, memory, I/O interfaces, and connectivity options that can integrate with sensors, cameras, and automated machinery.

Running YOLO11 models on these boards makes it possible to analyze production lines in real time, spotting issues instantly and improving efficiency. For instance, in car manufacturing, an AI system using Rockchip hardware and YOLO11 can detect scratches, misaligned parts, or paint defects as cars move down the assembly line. By identifying these defects in real time, manufacturers can reduce waste, lower production costs, and ensure higher quality standards before vehicles reach customers.

Rockchip-based devices offer a good balance of performance, cost, and efficiency, making them a great choice for deploying YOLO11 in edge AI applications.

Here are a few advantages of running YOLO11 on Rockchip-based devices:

Ultralytics YOLO11 can run efficiently on Rockchip-based devices by leveraging hardware acceleration and the RKNN format. This reduces inference time and improves performance, making it ideal for real-time computer vision tasks and edge AI applications.

The RKNN Toolkit provides key optimization tools like quantization and fine-tuning, ensuring YOLO11 models perform well on Rockchip platforms. Optimizing models for efficient on-device processing will be essential as edge AI adoption grows. With the right tools and hardware, developers can unlock new possibilities for computer vision solutions in various industries.

Join our community and explore our GitHub repository to learn more about AI. See how computer vision in agriculture and AI in healthcare are driving innovation by visiting our solutions pages. Also, check out our licensing options to start building your Vision AI solutions today!