Exploring the Claude 3 model card: What it means for vision AI

Discover the Claude 3 model card and its impact on Vision AI development.

Discover the Claude 3 model card and its impact on Vision AI development.

In recent years, Vision AI has made significant strides, revolutionizing various industries from healthcare to retail. Understanding the underlying models and their documentation is crucial for leveraging these advancements effectively. One such essential tool in the Artificial Intelligence (AI) developer's arsenal is the model card, which offers a comprehensive overview of an AI model’s characteristics and performance.

In this article, we will explore the Claude 3 model card, developed by Anthropic, and its implications for Vision AI development. Claude 3 is a new family of large multimodal models consisting of three variants: Claude 3 Opus, the most capable model; Claude 3 Sonnet, which balances performance and speed; and Claude 3 Haiku, the fastest and most cost-effective option. Each model is newly equipped with vision capabilities, enabling them to process and analyze image data.

What exactly is a model card? A model card is a detailed document that provides insights into the development, training, and evaluation of a machine learning model. It aims to promote transparency, accountability and the ethical use of AI by presenting clear information about the model's functionality, intended use cases, and potential limitations. This can be achieved by providing more detailed data about the model such as its evaluation metrics, and its comparison to previous models and other competitors.

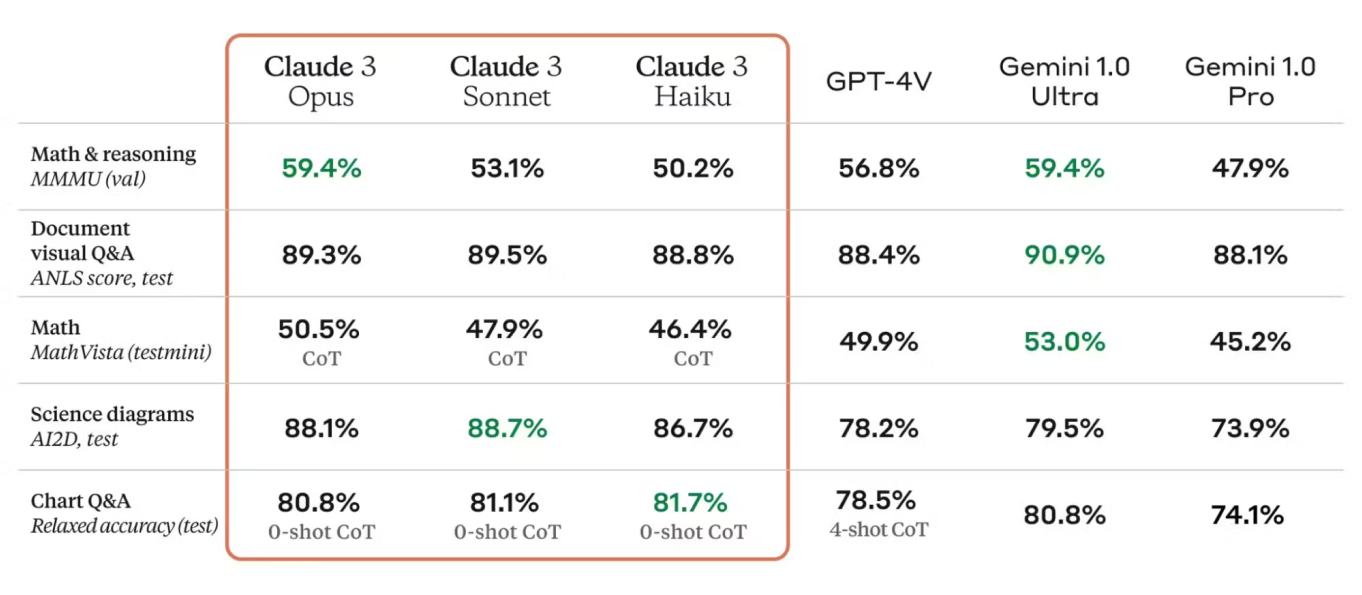

Evaluation metrics are critical for assessing model performance. The Claude 3 model card lists metrics like accuracy, precision, recall, and F1-score, providing a clear picture of the model’s strengths and areas for improvement. These metrics are benchmarked against industry standards, showcasing Claude 3’s competitive performance.

Moreover, Claude 3 builds on the strengths of its predecessors, incorporating advancements in architecture and training techniques. The model card compares Claude 3 with earlier versions, highlighting improvements in accuracy, efficiency, and applicability to new use cases.

Claude 3's architecture and training process result in reliable performance in various Natural Language Processing (NLP) and visual tasks. It consistently achieves strong results in benchmarks, demonstrating its ability to perform complex language analyses effectively.

Claude 3's training on diverse datasets and use of data augmentation techniques ensure its robustness and ability to generalize across different scenarios. This makes the model versatile and effective in a wide range of applications.

While its results are noteworthy, Claude 3 is fundamentally a Large Language Model (LLM). Although LLMs like Claude 3 can perform various computer vision tasks, they were not specifically designed for tasks such as object detection, boundary box creation, and image segmentation. As a result, their accuracy in these areas may not match that of models specifically built for computer vision, such as Ultralytics YOLOv8. Nevertheless, LLMs excel in other domains, particularly in Natural Language Processing (NLP), where Claude 3 demonstrates significant strength by merging simple visual tasks with human reasoning.

NLP capabilities refer to the ability of an AI model to understand and respond to human language. This capability is highly leveraged in Claude 3's applications within the visual field, enabling it to provide contextually rich descriptions, interpret complex visual data, and enhance overall performance in Vision AI tasks.

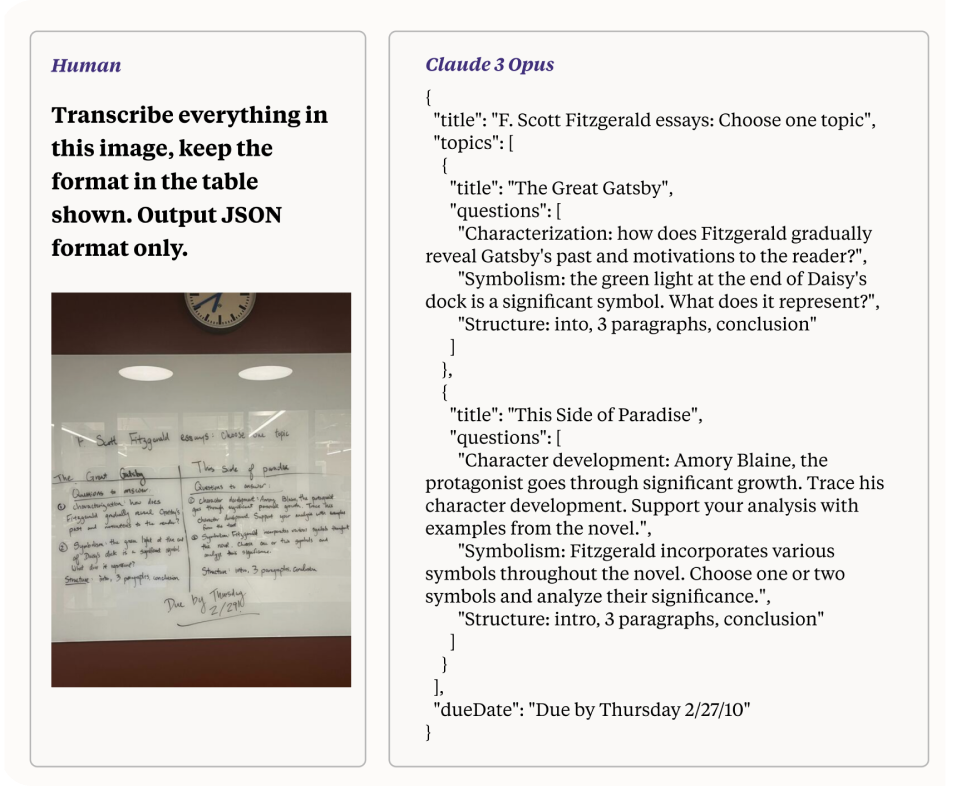

One of the impressive capabilities of Claude 3, especially when leveraged for Vision AI tasks, is its ability to process and convert low-quality images with hard-to-read handwriting into text. This feature showcases the model’s advanced processing power and multi-modal reasoning abilities. In this section, we will explore how Claude 3 accomplishes this task, highlighting the underlying mechanisms and implications for Vision AI development.

Converting a low-quality photo with hard-to-read handwriting into text is a complex task that involves several challenges:

As mentioned earlier, Claude 3 models addresses these challenges through a combination of advanced techniques in computer vision and natural language processing (NLP).

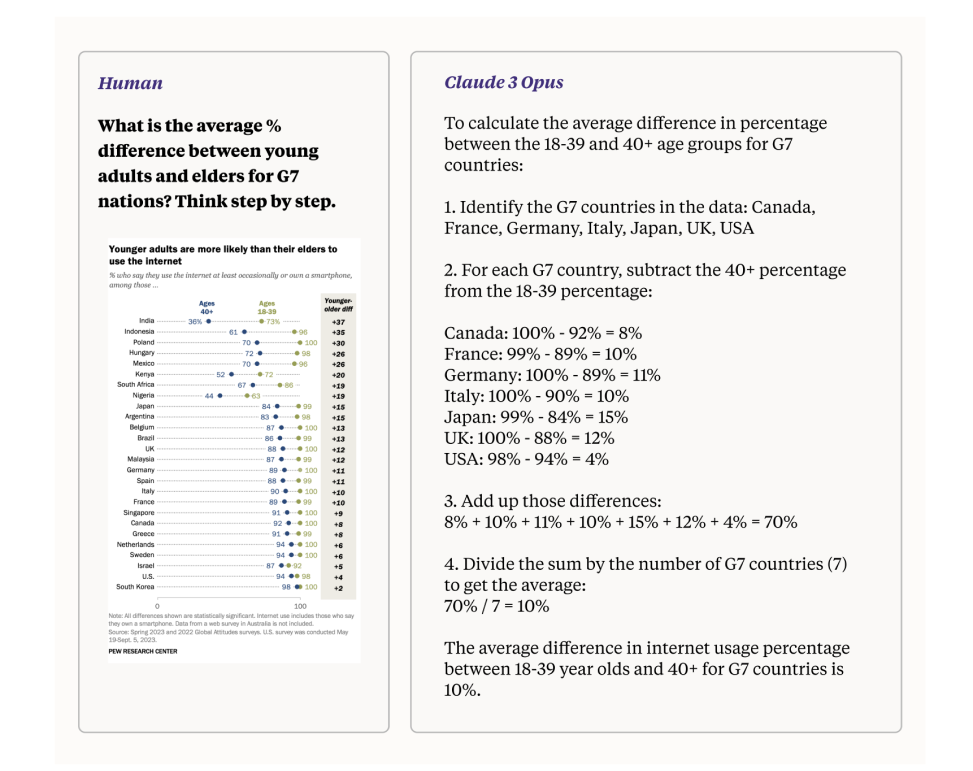

Claude 3's architecture enables it to perform complex reasoning tasks using visual inputs. For example, as shown in Figure 1, the model can interpret charts and graphs, such as identifying G7 countries in a chart about internet usage, extracting relevant data, and performing calculations to analyze trends. This multi-step reasoning, like calculating statistical differences in internet usage among age groups, enhances the model's accuracy and usefulness in real-world applications.

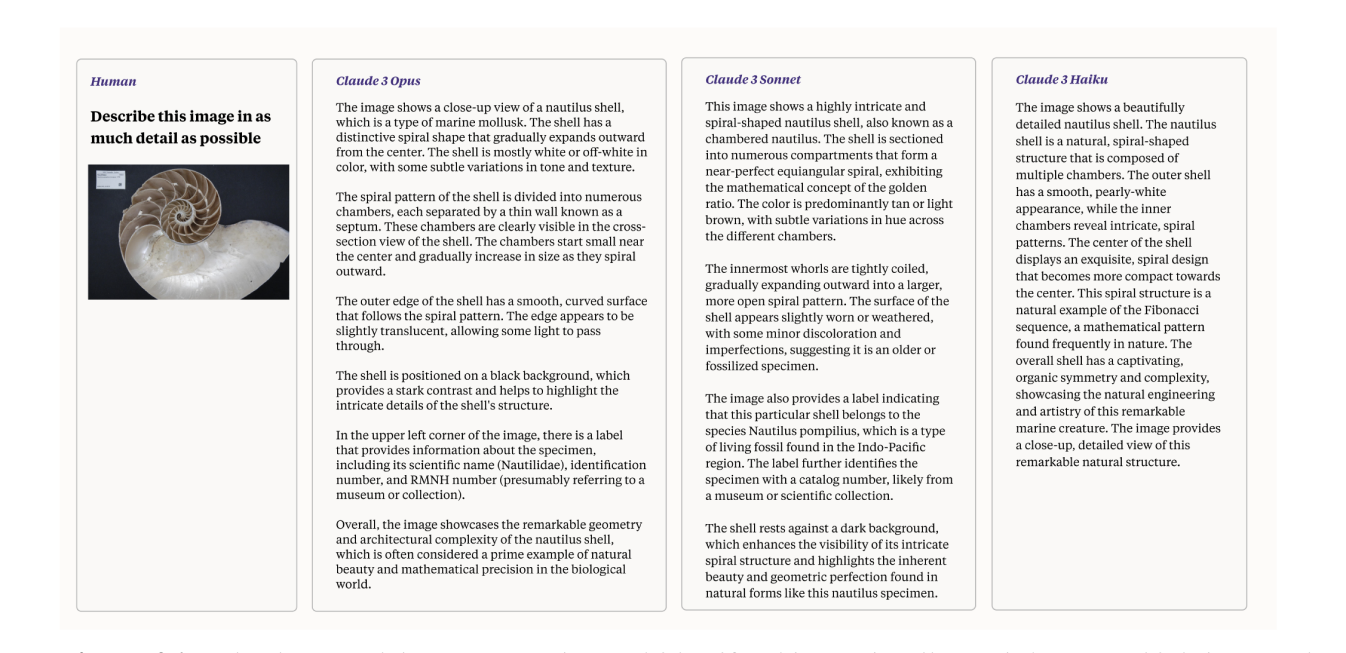

Claude 3 excels at transforming images into detailed descriptions, showcasing its powerful capabilities in both computer vision and natural language processing. When given an image, Claude 3 first employs convolutional neural networks (CNNs) to extract key features and identify objects, patterns, and contextual elements within the visual data.

Following this, transformer layers analyze these features, leveraging attention mechanisms to understand relationships and context between different elements in the image. This multi-modal approach allows Claude 3 to generate accurate, contextually rich descriptions by not only identifying objects but also understanding their interactions and significance within the scene.

Large language models (LLMs) like Claude 3 excel in natural language processing, not computer vision. While they can describe images, tasks like object detection and image segmentation are better handled by vision-oriented models like YOLOv8. These specialized models are optimized for visual tasks and provide better performance for analyzing images. Moreover, the model can not perform tasks such as bounding box creation.

Combining Claude 3 with computer vision systems can be complex and may require additional processing steps to bridge the gap between text and visual data.

Claude 3 is primarily trained on vast amounts of textual data, which means it lacks the extensive visual datasets required to achieve high performance in computer vision tasks. As a result, while Claude 3 excels in understanding and generating text, it does not have the capability to process or analyze images with the same level of proficiency found in models specifically designed for visual data. This limitation makes it less effective for applications that require interpreting or generating visual content.

Similar to other large language models, Claude 3 is set for continuous improvement. Future enhancements will likely focus on better visual tasks such as image detection and object recognition, as well as advancements in natural language processing tasks. This will enable more accurate and detailed descriptions of objects and scenes among other similar tasks.

Lastly, ongoing research on Claude 3 will prioritize enhancing interpretability, reducing bias, and improving generalization across diverse datasets. These efforts will ensure the model’s robust performance in various applications and foster trust and reliability in its outputs.

The Claude 3 model card is a valuable resource for developers and stakeholders in Vision AI, providing detailed insights into the model’s architecture, performance, and ethical considerations. By promoting transparency and accountability, it helps ensure the responsible and effective use of AI technologies. As Vision AI continues to evolve, the role of model cards like that of Claude 3 will be crucial in guiding development and fostering trust in AI systems.

At Ultralytics, we're passionate about advancing AI technology. To explore our AI solutions and stay updated with our latest innovations, visit our GitHub repository. Join our community on Discord and discover how we're transforming industries like Self-Driving Cars and manufacturing! 🚀