Learn more about Veo, Google DeepMind's latest generative video model that can effortlessly create high-quality 1080P videos from text, image, and video prompts.

Learn more about Veo, Google DeepMind's latest generative video model that can effortlessly create high-quality 1080P videos from text, image, and video prompts.

During Google's 2024 I/O presentation on May 14th, they shared the latest updates from DeepMind, their AI division. One of the most exciting advancements shared was their newest generative video model, Veo. Veo can create high-quality 1080P videos based on text, image, and video prompts. It even lets you edit generated videos with subsequent prompts. Veo takes generative AI to the next level. Let’s take a closer look at the features Veo offers.

Veo is a generative video model that uses a deep understanding of language and visuals to create videos that closely match a user's creative vision. It can capture the tone and details of longer prompts accurately, making it a powerful tool for creators who want to transform their ideas into precise video content.

The user can have groundbreaking creative control over the generated video because Veo can understand film techniques like "timelapse" and "aerial shots of a landscape." This creative control makes it possible for users to create videos where people, animals, and objects move naturally. Videos generated by Veo are engaging and visually attractive because it’s hard to spot that they are generated by an AI model.

Veo goes beyond merely creating videos from prompts. If you provide a previously generated video and a specific edit request, such as inserting kayaks into an aerial view of a coastline, Veo can seamlessly integrate this change into the original video, producing an updated version.

.webp)

Here are some more features that Veo offers:

Let’s walk through some of the videos Veo has generated and why it is so breathtaking.

Generating a video of a timelapse from a short text prompt is challenging. Typically, the short text prompt can’t accurately convey changes and movements within the scene of the timelapse. So, it is astonishing that Veo can understand what to expect from a timelapse without going into the details.

.webp)

Similarly, generating videos with accurate physics is not easy. The AI model needs to understand and simulate laws of physics such as gravity, momentum, and collisions to make movements and interactions appear realistic. It is impressive that Veo is able to accurately model these dynamics without detailed guidance from text prompts.

.webp)

Until now, we’ve only seen shorter videos generated by AI due to computational limitations and the complexity of maintaining coherence over longer sequences. At Google’s 2024 I/O presentation Veo’s mind blowing ability to create longer and more intricate videos was shown.

Like many other AI models, Veo stands on the shoulders of giants. It draws from previous advancements such as Generative Query Network (GQN), DVD-GAN, Imagen-Video, Phenaki, WALT, VideoPoet, and Lumiere, as well as Google’s proprietary Transformer architecture and Gemini. Plus, to improve Veo's ability to interpret prompts accurately, the captions of each video in its training dataset were more detailed.

Based on the rough model workflow shared by Google, here’s how Veo works:

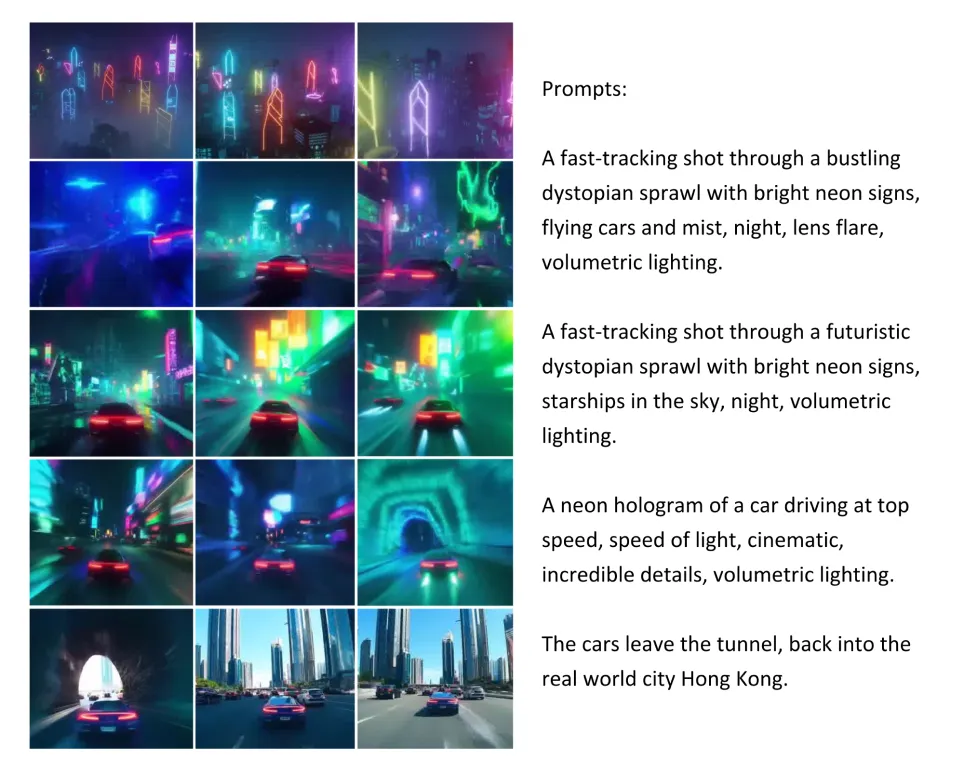

To test out Veo’s abilities, Google teamed up with filmmaker Donald Glover and his creative studio, Gilga. They used Veo to explore various creative techniques, including dynamic tracking shots, which require precise movement and consistent framing.

.webp)

Traditionally, filmmakers face limitations due to time and resource constraints. With Veo, Glover and his team could quickly experiment with and generate complex shots, which, in turn, provided more flexibility and innovation in the filmmaking process.

With Veo, Glover and his team could quickly experiment with and generate complex shots before actual filming. For example, they could test out various dynamic tracking shots to see how they would look and make adjustments as needed. This pre-visualization process helped them to refine their ideas and ensure that the shots would work as intended, ultimately reducing the number of takes required during actual filming. They were able to create a compelling case study to demonstrate Veo's potential to change the film industry. It offers a faster and more efficient way to bring creative visions to life.

Veo's advanced video generation capabilities have practical applications across many industries. In advertising, it can quickly produce customized, high-quality commercials for targeted audiences, saving time and production costs. In education, Veo can create engaging instructional videos, making complex concepts easier to understand.

Businesses can use Veo for training and corporate communications. Healthcare professionals might use Veo to simulate medical procedures for training purposes. Regarding virtual events and conferences, Veo can create lifelike simulations of venues and stages, offering attendees an engaging and interactive experience from anywhere. Organizers benefit from expanded reach and valuable insights for future events. Thanks to Veo, countless opportunities have opened up.

When an AI model has the potential to touch different industries, it’s important to keep in mind safety and ethical AI. To enable broader adoption and ensure responsible use, Google has implemented several safety measures. Videos created by Veo are watermarked using SynthID, a tool for watermarking and identifying AI-generated content. The SynthId ensures transparency and helps mitigate privacy, copyright, and bias risks. Other than this, all generated videos pass through safety filters and memorization-checking processes. These safeguards make Veo a valuable and ethical tool that supports responsible and innovative video production.

In the upcoming weeks, Google will start offering some of Veo’s groundbreaking features to select creators through VideoFX, a new tool available at labs.google. This initiative allows early access to Veo’s advanced video generation capabilities, giving creators the opportunity to experiment with its innovative features. The waitlist for Veo is currently open, inviting interested creators to sign up and use Veo's powerful tools in their projects.

Aside from Veo, DeepMind has introduced several cutting-edge updates in generative AI for 2024. One of these updates is Imagen 3, their most advanced text-to-image model yet. Imagen 3 excels at creating photorealistic, lifelike images. It understands natural language prompts deeply and captures intricate details while minimizing visual artifacts.

.webp)

DeepMind has also developed Lyria, its most advanced model for AI music generation. As part of this effort, DeepMind has created a suite of music AI tools called Music AI Sandbox. These tools enable musicians and producers to explore new creative possibilities in music composition and sound transformation.

.webp)

Similar to Veo, DeepMind has implemented several safety measures regarding its other updates as well. The SynthID will be used across these updates as a tool for watermarking and identifying AI-generated content. These updates from DeepMind promise to transform various industries by offering advanced, efficient, and responsible tools for creating high-quality visual and audio content.

DeepMind's 2024 generative AI advancements, including Veo, Imagen 3, and Lyria, mark a considerable jump in AI capabilities. Veo transforms video creation with its ability to generate high-quality 1080p videos from simple prompts, making it a versatile tool for filmmakers and content creators. Imagen 3 shines in producing photorealistic images, while Lyria introduces new possibilities in music generation with advanced AI tools.

These technologies promise to transform various industries by providing efficient and responsible tools for creating high-quality visual and audio content. With safety measures like SynthID ensuring ethical use, DeepMind continues to expand the boundaries of AI, paving the way for innovative applications in the future.

Dive into AI by visiting our GitHub repository and joining our community. Explore our solutions pages to learn how AI is applied in manufacturing and agriculture.