Integrating computer vision in robotics with Ultralytics YOLO11

Take a closer look at how computer vision models like Ultralytics YOLO11 are making robots smarter and shaping the future of robotics.

Take a closer look at how computer vision models like Ultralytics YOLO11 are making robots smarter and shaping the future of robotics.

Robots have come a long way since Unimate, the first industrial robot, which was invented in the 1950s. What began as pre-programmed, rule-based machines have now advanced to intelligent systems capable of performing complex tasks and interacting seamlessly with the real world.

Today, robots are being used across industries from manufacturing and healthcare, to agriculture, for diverse process automations. A key factor in the evolution of robotics is AI and computer vision, a branch of AI that helps machines understand and interpret visual information.

For example, computer vision models like Ultralytics YOLO11 are improving the intelligence of robotic systems. When integrated into these systems, Vision AI enables robots to recognize objects, navigate environments, and make real-time decisions.

In this article, we will take a look at how YOLO11 can enhance robots with advanced computer vision capabilities and explore its applications across various industries.

A robot’s core functionality depends on how well it understands its surroundings. This awareness connects its physical hardware to smart decision-making. Without it, robots can only follow fixed instructions and struggle to adapt to changing environments or handle complex tasks. Just as humans rely on sight to navigate, robots use computer vision to interpret their environment, understand the situation, and take appropriate actions.

In fact, computer vision is fundamental to most robotic tasks. It helps robots detect objects and avoid obstacles while moving around. However, to do so, seeing the world isn’t enough; robots also have to be able to react quickly. In real-world situations, even a slight delay can lead to costly errors. Models like Ultralytics YOLO11 enable robots to gather insights in real-time and respond instantly, even in complex or unfamiliar situations.

Before we dive into how YOLO11 can be integrated into robotic systems, let's first explore YOLO11’s key features.

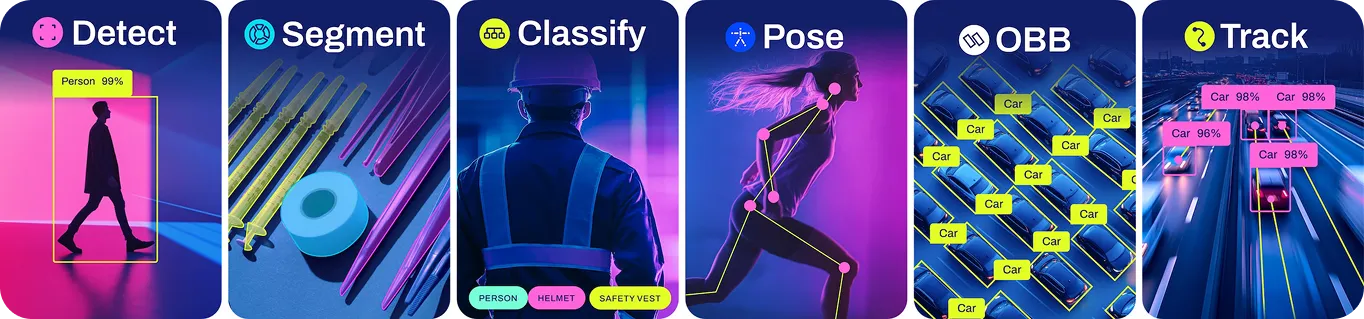

Ultralytics YOLO models support various computer vision tasks that help deliver fast, real-time insights. In particular, Ultralytics YOLO11 offers faster performance, lower computational costs, and improved accuracy. For instance, it can be used to detect objects in images and videos with high precision, making it perfect for applications in fields like robotics, healthcare, and manufacturing.

Here are some impactful features that make YOLO11 a great option for robotics:

User-friendly: YOLO11’s easy-to-understand documentation and interface help reduce the learning curve, making it simple to integrate into robotic systems.

Here’s a closer look at some of the computer vision tasks that YOLO11 supports:

From intelligent learning to industrial automation, models like YOLO11 can help redefine what robots can do. Its integration into robotics demonstrates how computer vision models are driving advancements in automation. Let’s explore some key domains where YOLO11 can make a significant impact.

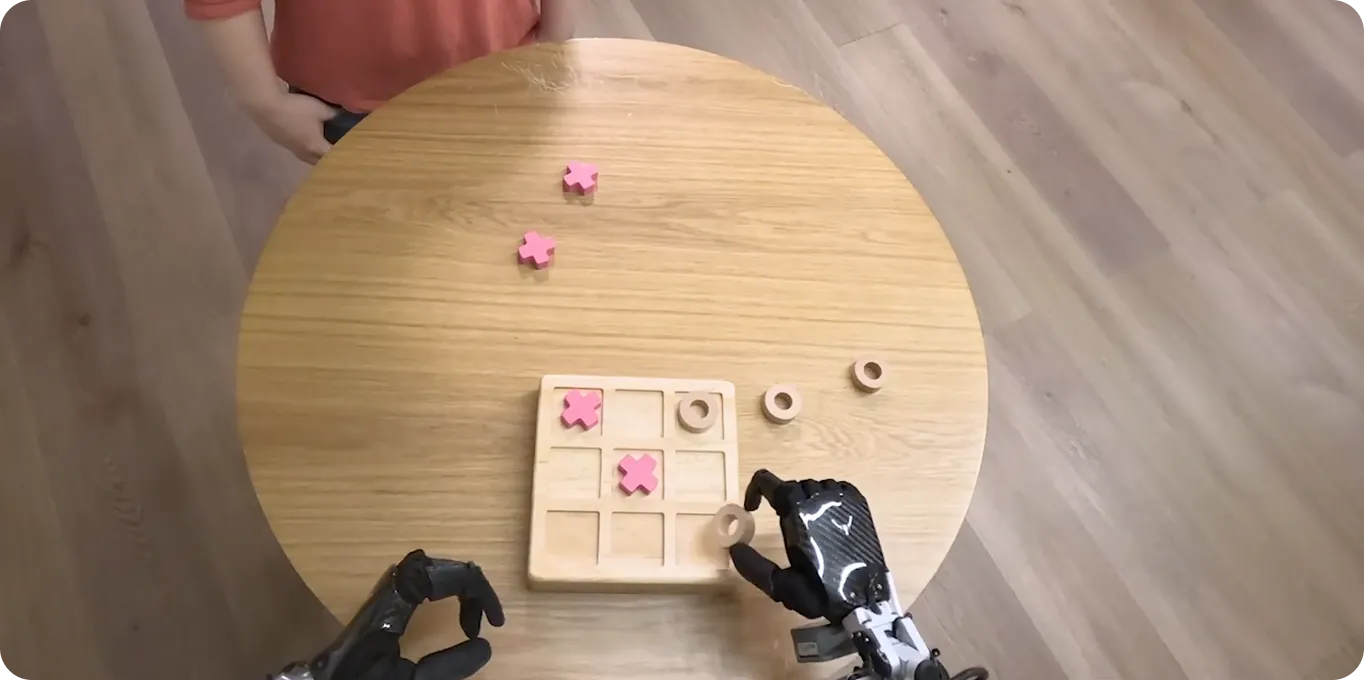

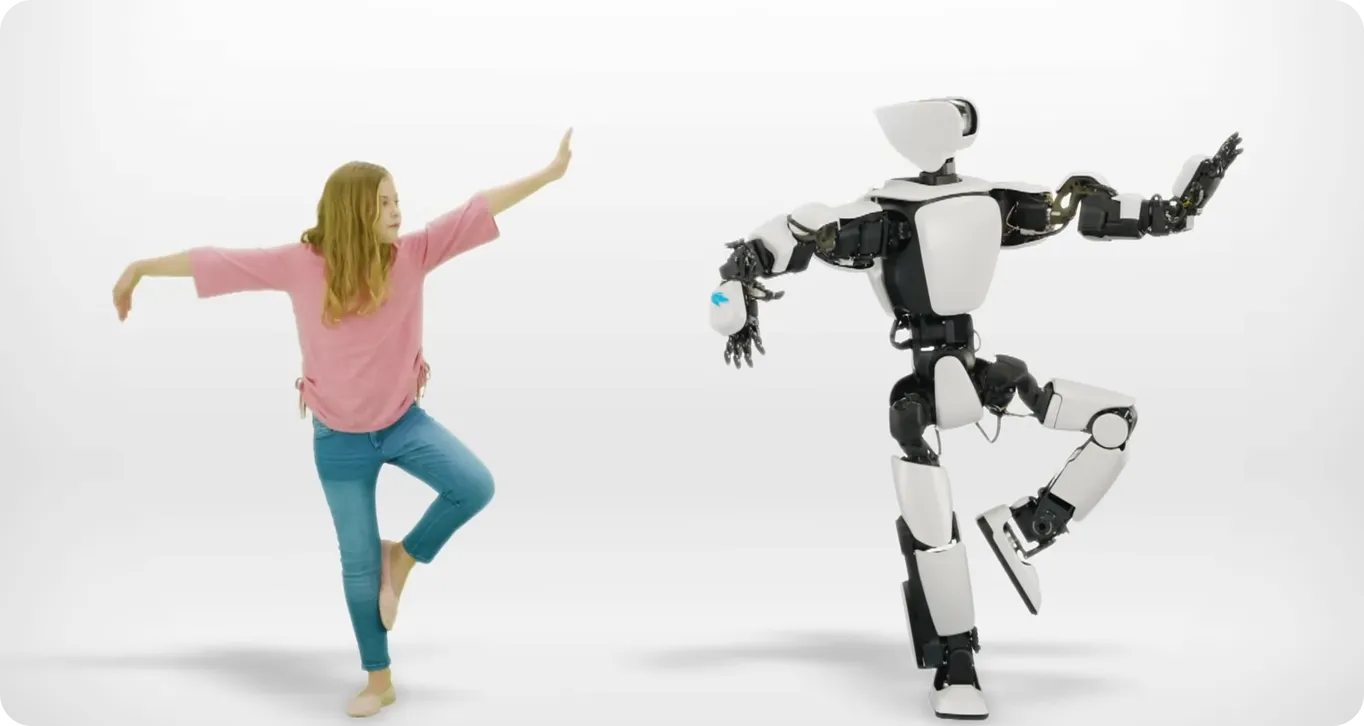

Computer vision is widely used in humanoid robots, enabling them to learn by observing their environment. Models like YOLO11 can help to enhance this process by providing advanced object detection and pose estimation, which helps robots accurately interpret human actions and behaviors.

By analyzing subtle movements and interactions in real-time, robots can be trained to replicate complex human tasks. This lets them go beyond pre-programmed routines and learn tasks, such as using a remote control or a screwdriver, simply by watching a person.

This type of learning can be useful in different industries. For instance, in agriculture, robots can watch human workers learn tasks like planting, harvesting, and managing crops. By copying how humans do these tasks, robots can adjust to different farming conditions without needing to be programmed for every situation.

Similarly, in healthcare, computer vision is becoming more and more important. For example, YOLO11 can be used in medical devices to help surgeons with complex procedures. With features like object detection and instance segmentation, YOLO11 can help robots spot internal body structures, manage surgical tools, and make precise movements.

While this might sound like something out of science fiction, recent research demonstrates the practical application of computer vision in surgical procedures. In an interesting study on autonomous robotic dissection for cholecystectomy (gallbladder removal), researchers integrated YOLO11 for tissue segmentation (classifying and separating different tissues in an image) and surgical instrument keypoint detection (identifying specific landmarks on the tools).

The system was able to accurately distinguish between different tissue types - even as the tissues deformed (changed shape) during the procedure - and dynamically adjusted to these changes. This made it possible for the robotic instruments to follow precise dissection (surgical cutting) paths.

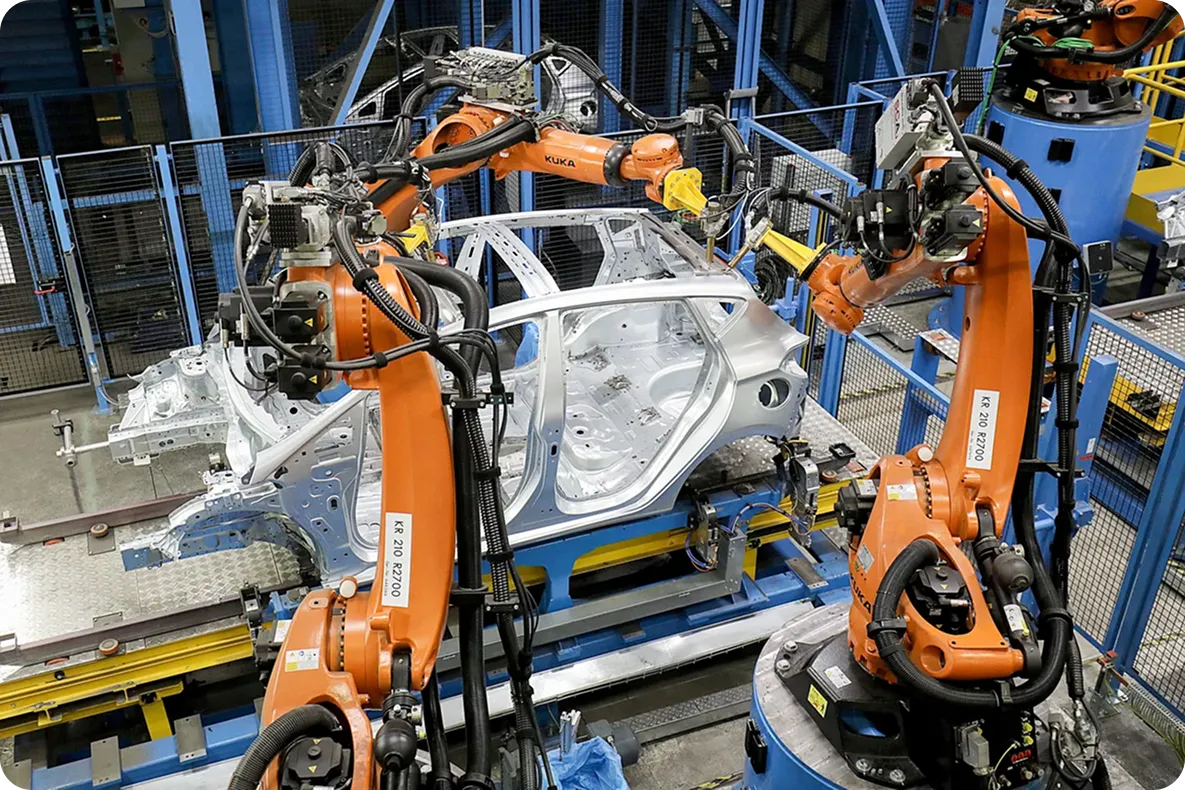

Robots that can pick and place objects are playing a key role in automating manufacturing operations and optimizing supply chains. Their speed and accuracy enable them to perform tasks with minimal human input, such as identifying and sorting items.

With YOLO11’s precise instance segmentation, robotic arms can be trained to detect and segment objects moving on a conveyor belt, accurately pick them up, and place them in designated locations based on their type and size.

For example, popular car manufacturers are using vision-based robots to assemble different car parts, improving assembly line speed and precision. Computer vision models like YOLO11 can enable these robots to work alongside human workers, ensuring seamless integration of automated systems in dynamic production settings. This advancement can lead to faster production times, fewer errors, and higher-quality products.

YOLO11 offers several key benefits that make it ideal for seamless integration into autonomous robotics systems. Here are some of the main advantages:

While computer vision models provide powerful tools for robotic vision, there are some limitations to consider when integrating them into real-world robotics systems. Some of these limitations include:

Computer vision systems are not just tools for today's robots; they are building blocks for a future where robots can operate autonomously. With their real-time detection abilities and support for multiple tasks, they are perfect for next-generation robotics.

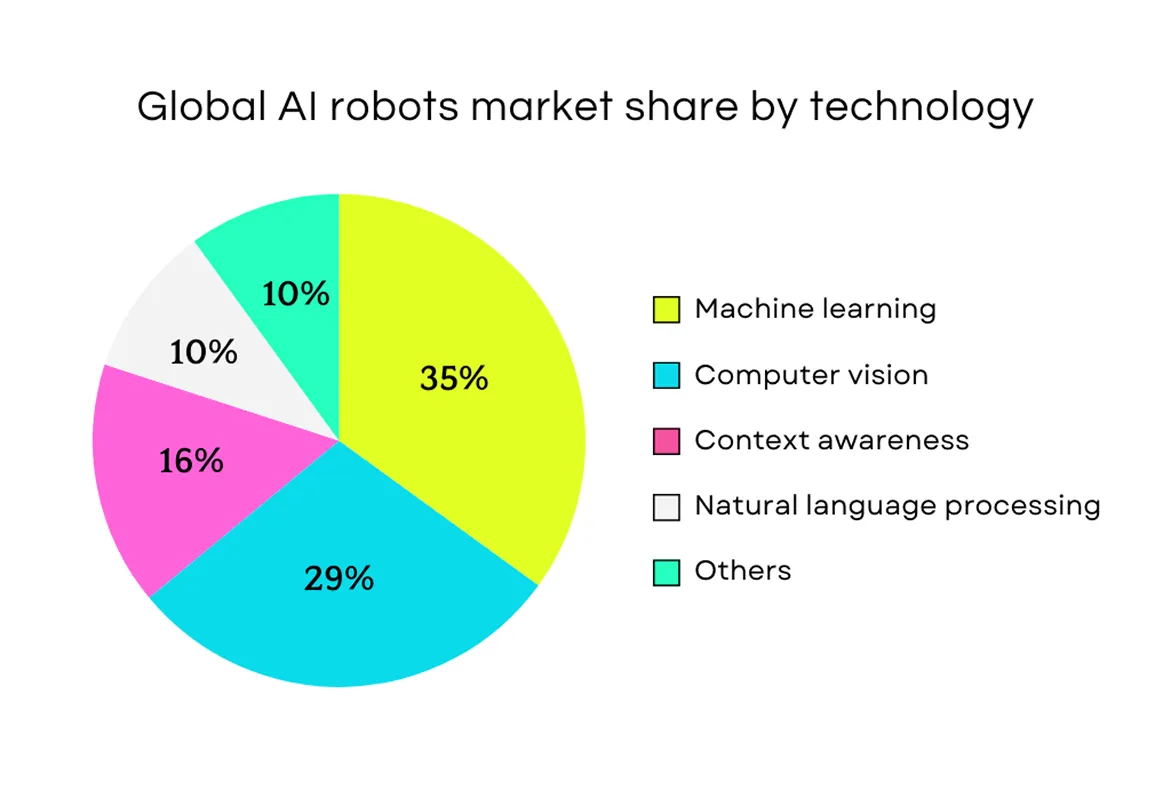

As a matter of fact, current market trends show that computer vision is becoming increasingly essential in robotics. Industry reports highlight that computer vision is the second most widely used technology in the global AI robotics market.

With its ability to process real-time visual data, YOLO11 can help robots detect, identify, and interact with their surroundings more accurately. This makes a huge difference in fields like manufacturing, where robots can collaborate with humans, and healthcare, where they can assist in complex surgeries.

As robotics continues to advance, the integration of computer vision into such systems will be crucial for enabling robots to handle a wide range of tasks more efficiently. The future of robotics looks promising, with AI and computer vision driving even smarter and more adaptable machines.

Join our community and check our GitHub repository to learn more about recent developments in AI. Explore various applications of AI in healthcare and computer vision in agriculture on our solution pages. Check out our licensing plans to build your own computer vision solutions.