Explore OpenAI's new GPT-4o, featuring advanced AI with lifelike interactions that change how we communicate with technology. Explore its groundbreaking features!

Explore OpenAI's new GPT-4o, featuring advanced AI with lifelike interactions that change how we communicate with technology. Explore its groundbreaking features!

On Monday, May 13th, 2024, OpenAI announced the launch of its new flagship model, GPT-4o, where the 'o' stands for 'omni'. GPT-4o is an advanced multimodal AI model for real-time text, audio, and vision interactions, offering faster processing, multilingual support, and enhanced safety.

It’s bringing to the table never-before-seen generative AI capabilities. Building on the conversational strengths of ChatGPT, GPT-4o’s features mark a substantial step forward in how people perceive AI. We can now talk to GPT-4o as though it’s a real person. Let’s dive in and see exactly what GPT-4o is capable of!

At OpenAI’s spring update, it was revealed that while GPT-4o is just as intelligent as GPT-4, it can process data faster and is better equipped to handle text, vision, and audio. Unlike prior releases that focused on making the models smarter, this release has been made keeping in mind the need to make AI easier to use by the general audience.

.png)

ChatGPT’s voice mode, which was released late last year, involved three different models coming together to transcribe vocal inputs, understand and generate written replies, and convert text to speech so the user could hear a response. This mode dealt with latency issues and didn’t feel very natural. GPT-4o can natively process text, vision, and audio in one go to give the user the impression that they are taking part in a natural conversation.

Also, unlike in voice mode, you can now interrupt GPT-4o while it’s talking, and it’ll react just like a person would. It’ll pause and listen, then give its real-time response based on what you said. It can also express emotions through its voice and understand your tone as well.

GPT-4o’s model evaluation shows just how advanced it is. One of the most interesting results found was that GPT-4o greatly improves speech recognition compared to Whisper-v3 in all languages, especially those that are less commonly used.

Audio ASR (Automatic Speech Recognition) performance measures how accurately a model transcribes spoken language into text. GPT-4o's performance is tracked by the Word Error Rate (WER), which shows the percentage of words incorrectly transcribed (lower WER means better quality). The chart below showcases GPT-4o's lower WER across various regions, demonstrating its effectiveness in improving speech recognition for lower-resourced languages.

.png)

Here’s a look at some more of GPT-4o's unique features:

You can now pull out GPT-4o on your phone, turn on your camera, and ask GPT-4o, like you would a friend, to guess your mood based on your facial expression. GPT-4o can view you through the camera and answer.

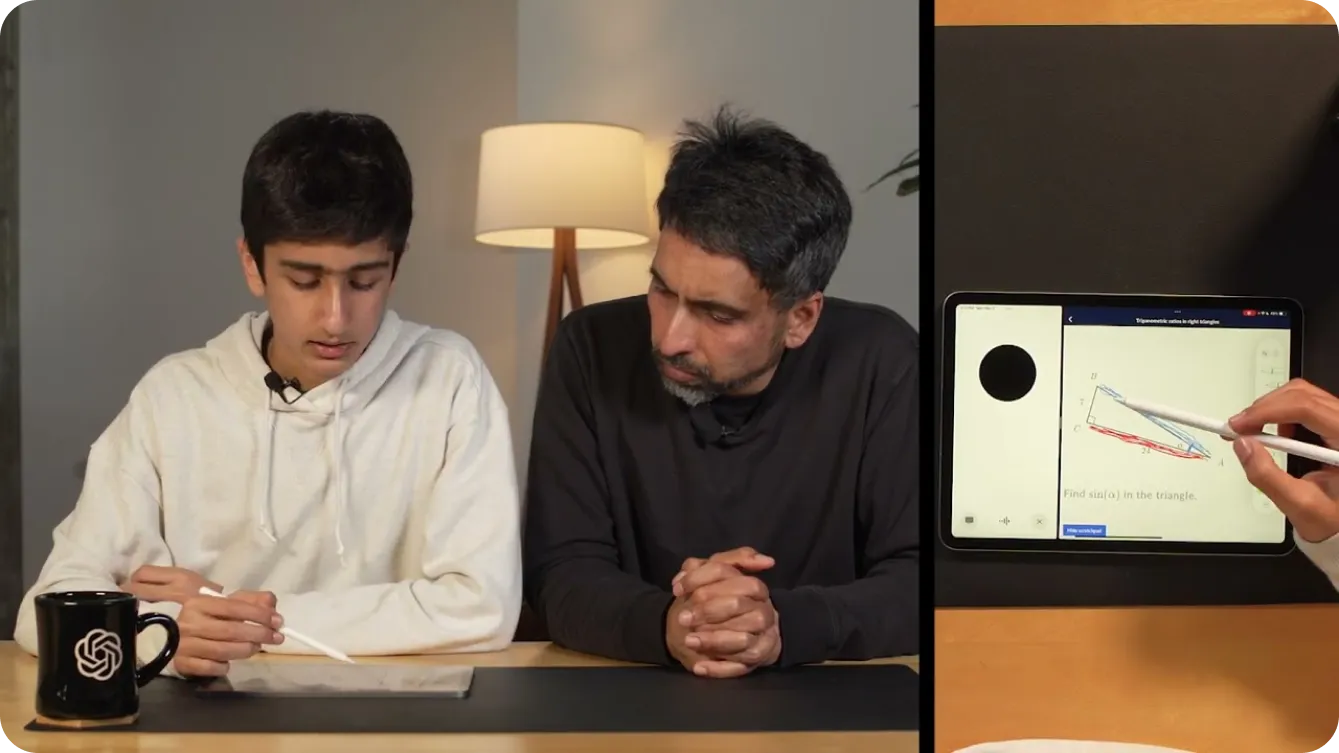

You can even use it to help you solve math problems by showing GPT-4o what you are writing through video. Alternatively, you could share your screen, and it can become a helpful tutor on Khan Academy, asking you to point out different parts of a triangle in geometry, as shown below.

Beyond helping kids with math, developers can have conversations with GPT-4o to debug their code. This is possible thanks to the introduction of ChatGPT as a desktop app. If you highlight and copy your code using CTRL “C” while talking to the desktop GPT-4o voice app, it’ll be able to read your code. Or, you could use it to translate conversations between developers speaking different languages.

The possibilities with GPt-4o seem endless. One of the most interesting demos from OpenAI used two phones to show GPt-4o talking to different instances of itself and singing together.

As shown in a demo, GPT-4o can make the world more accessible for people with vision impairments. It can help them interact and move around more safely and independently. For example, users can turn on their video and show GPT-4o a view of the street. GPT-4o can then provide real-time descriptions of the environment, such as identifying obstacles, reading street signs, or guiding them to a specific location. It can even help them hail a taxi by alerting them when a taxi is approaching.

.png)

Similarly, GPT-4o can transform various industries with its advanced capabilities. In retail, it can improve customer service by providing real-time assistance, answering queries, and helping customers find products both online and in-store. Let’s say you are looking at a shelf of products and can’t pick out the product you are looking for, GPT-4o can help you.

In healthcare, GPT-4o can assist with diagnostics by analyzing patient data, suggesting possible conditions based on symptoms, and offering guidance on treatment options. It can also support medical professionals by summarizing patient records, providing quick access to medical literature, and even offering real-time language translation to communicate with patients who speak different languages. These are only a couple of examples. GPT-4o’s applications make daily life easier by offering tailored, context-aware assistance and breaking down barriers to information and communication.

Just like the previous versions of GPT, which have impacted hundreds of millions of lives, GPT-4o will likely interact with real-time audio and video globally, making safety a crucial element in these applications. OpenAI has been very careful to build GPT-4o with a focus on mitigating potential risks.

To ensure safety and reliability, OpenAI has implemented rigorous safety measures. These include filtering training data, refining the model’s behavior after training, and incorporating new safety systems for managing voice outputs. Moreover, GPT-4o has been extensively tested by over 70 external experts in fields such as social psychology, bias and fairness, and misinformation. External testing makes sure that any risks introduced or amplified by the new features are identified and addressed.

To maintain high safety standards, OpenAI is releasing GPT-4o’s features gradually over the next few weeks. A phased rollout allows OpenAI to monitor performance, address any issues, and gather user feedback. Taking a careful approach ensures that GPT-4o delivers advanced capabilities while maintaining the highest standards of safety and ethical use.

GPT-4o is available for free access. To try out the real-time conversation abilities mentioned above, you can download the ChatGPT app from the Google Play Store or Apple App Store directly onto your phone.

After logging in, you’ll be able to select GPT-4o from the list displayed by tapping on the three dots in the upper right-hand corner of the screen. After navigating to a chat enabled with GPT-4o, if you tap the plus sign in the lower-left corner of the screen, you’ll see multiple input options. In the lower right corner of the screen, you’ll see a headphone icon. Upon selecting the headphone icon, you’ll be asked if you’d like to experience a hands-free version of GPT-4o. After agreeing, you’ll be able to try out GPT-4o, as shown below.

If you’d like to integrate GPT-4o's advanced capabilities into your own projects, it is available as an API for developers. It allows you to incorporate GPT-4o's powerful speech recognition, multilingual support, and real-time conversational abilities into your applications. By using the API, you can enhance user experiences, build smarter apps, and bring cutting-edge AI technology to different sectors.

While GPT-4o is far more advanced than previous AI models, it’s important to remember that GPT-4o comes with its own limitations. OpenAI has mentioned that it can sometimes randomly switch languages while talking, going from English to French. They’ve also seen GPT-4o incorrectly translate between languages. As more people try out the model, we’ll understand where GPT-4o excels and what it needs further improvement on.

OpenAI's GPT-4o opens new doors for AI with its advanced text, vision, and audio processing, offering natural, human-like interactions. It excels in terms of speed, cost-efficiency, and multilingual support. GPT-4o is a versatile tool for education, accessibility, and real-time assistance. As users explore GPT-4o's capabilities, feedback will drive its evolution. GPT-4o proves that AI is truly changing our world and becoming a part of our daily lives.

Explore our GitHub repository and join our community to dive deeper into AI. Visit our solutions pages to see how AI is transforming industries like manufacturing and agriculture.