Join us as we revisit a keynote talk from YOLO Vision 2024 that focuses on exploring how Hugging Face’s open-source tools are advancing AI development.

Join us as we revisit a keynote talk from YOLO Vision 2024 that focuses on exploring how Hugging Face’s open-source tools are advancing AI development.

Choosing the right algorithms is just one part of building impactful computer vision solutions. AI engineers often work with large datasets, fine-tune models for specific tasks, and optimize AI systems for real-world performance. As AI applications are adopted more rapidly, the need for tools that simplify these processes is also growing.

At YOLO Vision 2024 (YV24), the annual hybrid event powered by Ultralytics, AI experts and tech enthusiasts came together to explore the latest innovations in computer vision. The event sparked discussions on various topics, such as ways to speed up AI application development.

A key highlight from the event was a keynote on Hugging Face, an open-source AI platform that streamlines model training, optimization, and deployment. Pavel Lakubovskii, a Machine Learning Engineer at Hugging Face, shared how its tools improve workflows for computer vision tasks such as detecting objects in images, categorizing images into different groups, and making predictions without prior training on specific examples (zero-shot learning).

Hugging Face Hub hosts and provides access to various AI and computer vision models like Ultralytics YOLO11. In this article, we’ll recap the key takeaways from Pavel’s talk and see how developers can use Hugging Face’s open-source tools to build and deploy AI models quickly.

Pavel started his talk by introducing Hugging Face as an open-source AI platform offering pre-trained models for a variety of applications. These models are designed for various branches of AI, including natural language processing (NLP), computer vision, and multimodal AI, enabling systems to process different types of data, such as text, images, and audio.

Pavel mentioned that Hugging Face Hub has now hosted over 1 million models, and developers can easily find models suited to their specific projects. Hugging Face aims to simplify AI development by offering tools for model training, fine-tuning, and deployment. When developers can experiment with different models, it simplifies the process of integrating AI into real-world applications.

While Hugging Face was initially known for NLP, it has since expanded into computer vision and multimodal AI, enabling developers to tackle a broader range of AI tasks. It also has a strong community where developers can collaborate, share insights, and get support through forums, Discord, and GitHub.

Going into more detail, Pavel explained how Hugging Face’s tools make it easier to build computer vision applications. Developers can use them for tasks like image classification, object detection, and vision-language applications.

He also pointed out that many of these computer vision tasks can be handled with pre-trained models available on the Hugging Face Hub, saving time by reducing the need for training from scratch. In fact, Hugging Face offers over 13,000 pre-trained models for image classification tasks, including ones for food classification, pet classification, and emotion detection.

Emphasizing the accessibility of these models, he said, "You probably don't even need to train a model for your project - you might find one on the Hub that’s already trained by someone from the community."

Giving another example, Pavel elaborated on how Hugging Face can help with object detection, a key function in computer vision that is used to identify and locate objects within images. Even with limited labeled data, pre-trained models available on the Hugging Face Hub can make object detection more efficient.

He also gave a quick overview of several models built for this task that you can find on Hugging Face:

Pavel then shifted the focus to getting hands-on with the Hugging Face models, explaining three ways developers can leverage them: exploring models, quickly testing them, and customizing them further.

He demonstrated how developers can browse models directly on the Hugging Face Hub without writing any code, making it easy to test models instantly through an interactive interface. "You can try it without writing even a line of code or downloading the model on your computer," Pavel added. Since some models are large, running them on the Hub helps avoid storage and processing limitations.

Also, the Hugging Face Inference API lets developers run AI models with simple API calls. It's great for quick testing, proof-of-concept projects, and rapid prototyping without the need for a complex setup.

For more advanced use cases, developers can use the Hugging Face Transformers framework, an open-source tool that provides pre-trained models for text, vision, and audio tasks while supporting both PyTorch and TensorFlow. Pavel explained that with just two lines of code, developers can retrieve a model from the Hugging Face Hub and link it to a preprocessing tool, such as an image processor, to analyze image data for Vision AI applications.

Next, Pavel explained how Hugging Face can streamline AI workflows. One key topic he covered was optimizing the attention mechanism in Transformers, a core feature of deep learning models that helps it focus on the most relevant parts of input data. This improves the accuracy of tasks involving language processing and computer vision. However, it can be resource-intensive.

Optimizing the attention mechanism can significantly reduce memory usage while improving speed. Pavel pointed out, "For example, by switching to a more efficient attention implementation, you could see up to 1.8x faster performance."

Hugging Face provides built-in support for more efficient attention implementations within the Transformers framework. Developers can enable these optimizations by simply specifying an alternative attention implementation when loading a model.

He also talked about quantization, a technique that makes AI models smaller by reducing the precision of the numbers they use without affecting performance too much. This helps models use less memory and run faster, making them more suitable for devices with limited processing power, like smartphones and embedded systems.

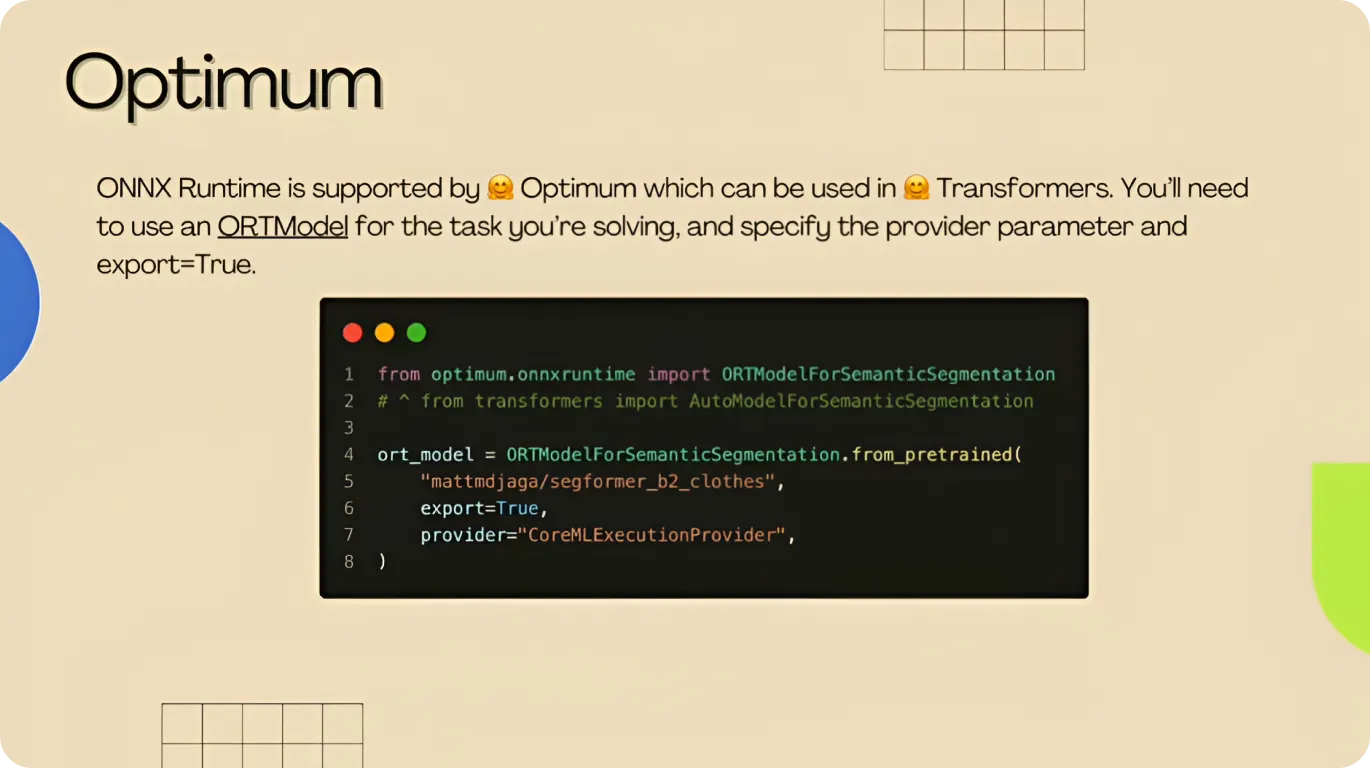

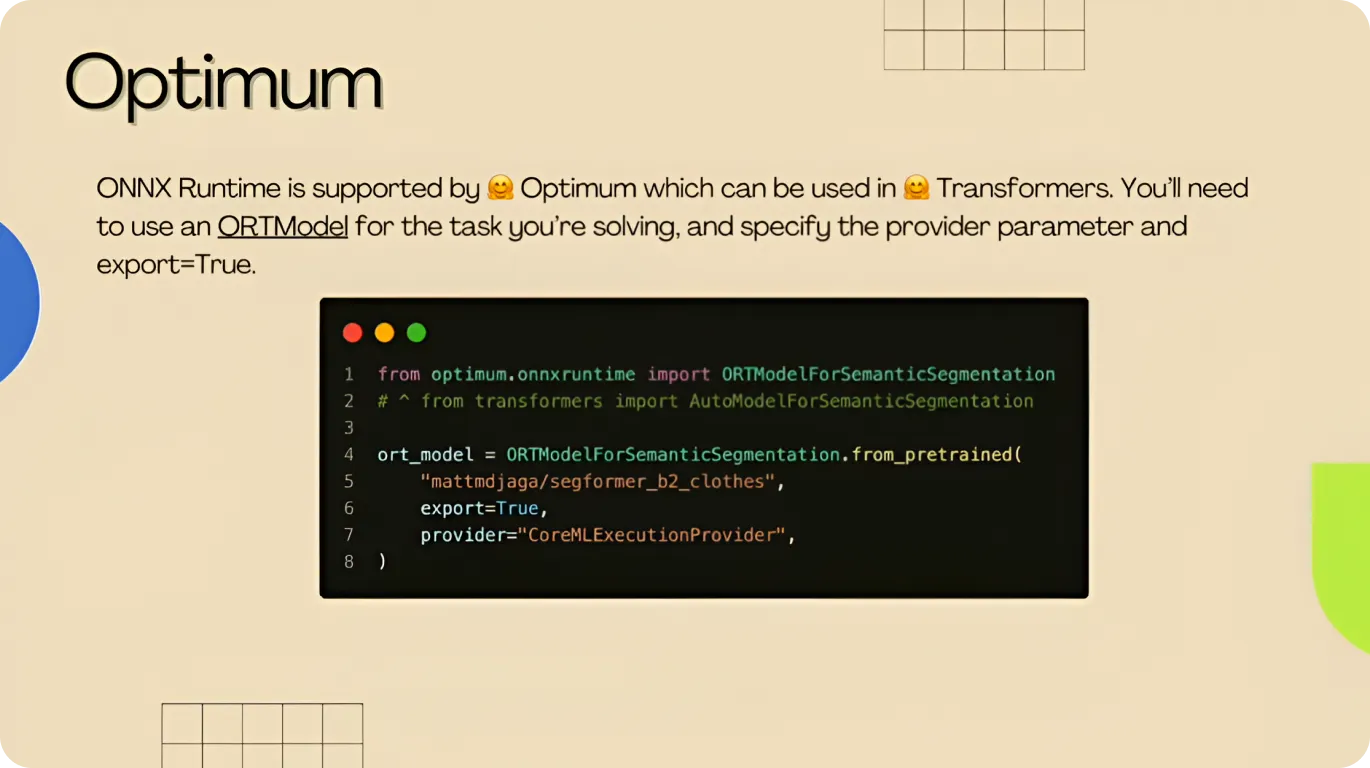

To further improve efficiency, Pavel introduced the Hugging Face Optimum library, a set of tools designed to optimize and deploy models. With just a few lines of code, developers can apply quantization techniques and convert models into efficient formats like ONNX (Open Neural Network Exchange), allowing them to run smoothly on different types of hardware, including cloud servers and edge devices.

Finally, Pavel mentioned the benefits of Torch Compile, a feature in PyTorch that optimizes how AI models process data, making them run faster and more efficiently. Hugging Face integrates Torch Compile within its Transformers and Optimum libraries, letting developers take advantage of these performance improvements with minimal code changes.

By optimizing the model’s computation structure, Torch Compile can speed up inference times and increase frame rates from 29 to 150 frames per second without compromising accuracy or quality.

Moving on, Pavel briefly touched on how developers can extend and deploy Vision AI models using Hugging Face tools after selecting the right model and choosing the best approach for development.

For instance, developers can deploy interactive AI applications using Gradio and Streamlit. Gradio allows developers to create web-based interfaces for machine learning models, while Streamlit helps build interactive data applications with simple Python scripts.

Pavel also pointed out, “You don’t need to start writing everything from scratch,” referring to the guides, training notebooks, and example scripts Hugging Face provides. These resources help developers quickly get started without having to build everything from the ground up.

Wrapping up his keynote, Pavel summarized the advantages of using Hugging Face Hub. He emphasized how it simplifies model management and collaboration. He also called attention to the availability of guides, notebooks, and tutorials, which can help both beginners and experts understand and implement AI models.

"There are lots of cool spaces already on the Hub. You can find similar ones, clone the shared code, modify a few lines, replace the model with your own, and push it back," he explained, encouraging developers to take advantage of the platform’s flexibility.

During his talk at YV24, Pavel shared how Hugging Face provides tools that support AI model training, optimization, and deployment. For example, innovations like Transformers, Optimum, and Torch Compile can help developers enhance model performance.

As AI models become more efficient, advancements in quantization and edge deployment are making it easier to run them on resource-limited devices. These improvements, combined with tools like Hugging Face and advanced computer vision models like Ultralytics YOLO11, are key to building scalable, high-performance Vision AI applications.

Join our growing community! Explore our GitHub repository to learn about AI, and check out our yolo licenses to start your Vision AI projects. Interested in innovations like computer vision in healthcare or computer vision in agriculture? Visit our solutions pages to discover more!