Learn why it's essential to approach AI ethically, how AI regulations are being handled worldwide, and what role you can play in promoting ethical AI use.

Learn why it's essential to approach AI ethically, how AI regulations are being handled worldwide, and what role you can play in promoting ethical AI use.

As AI technology becomes more and more popular, discussions about using Artificial Intelligence (AI) ethically have become very common. With many of us using AI-powered tools like ChatGPT on a day-to-day basis, there’s good reason to be concerned about whether we are adopting AI in a manner that’s safe and morally correct. Data is the root of all AI systems, and many AI applications use personal data like images of your face, financial transactions, health records, details about your job, or your location. Where does this data go, and how is it handled? These are some of the questions that ethical AI tries to answer and make users of AI aware of.

When we discuss ethical issues related to AI, it’s easy to get carried away and jump to conclusions thinking of scenarios like the Terminator and robots taking over. However, the key to understanding how to approach ethical AI practically is simple and pretty straightforward. It’s all about building, implementing, and using AI in a manner that’s fair, transparent, and accountable. In this article, we’ll explore why AI should remain ethical, how to create ethical AI innovations, and what you can do to promote the ethical use of AI. Let’s get started!

Before we dive into the specifics of ethical AI, let’s take a closer look at why it’s become such an essential topic of conversation in the AI community and what exactly it means for AI to be ethical.

Ethics in relation to AI isn’t a new topic of conversation. It’s been debated about since the 1950s. At the time, Alan Turing introduced the concept of machine intelligence and the Turing Test, a measure of a machine's ability to exhibit human-like intelligence through conversation, which initiated early ethical discussions on AI. Since then, researchers have commented on and emphasized the importance of considering the ethical aspects of AI and technology. However, only recently have organizations and governments started to create regulations to mandate ethical AI.

There are three main reasons for this:

With AI becoming more advanced and getting more attention globally, the conversation on ethical AI becomes inevitable.

To truly understand what it means for AI to be ethical, we need to analyze the challenges that ethical AI faces. These challenges cover a range of issues, including bias, privacy, accountability, and security. Some of these gaps in ethical AI have been discovered over time by implementing AI solutions with unfair practices, while others may crop up in the future.

.webp)

Here are some of the key ethical challenges in AI:

By addressing these challenges, we can develop AI systems that benefit society.

Next, let’s walk through how to implement ethical AI solutions that handle each of the challenges mentioned above. By focusing on key areas like building unbiased AI models, educating stakeholders, prioritizing privacy, and ensuring data security, organizations can create AI systems that are both effective and ethical.

Creating unbiased AI models starts with using diverse and representative datasets for training. Regular audits and bias detection methods help identify and mitigate biases. Techniques like re-sampling or re-weighting can make the training data fairer. Collaborating with domain experts and involving diverse teams in development can also help recognize and address biases from different perspectives. These steps help prevent AI systems from favoring any particular group unfairly.

.webp)

The more you know about the black box of AI, the less daunting it becomes, making it essential for everyone involved in an AI project to understand how the AI behind any application works. Stakeholders, including developers, users, and decision-makers, can address the ethical implications of AI better when they have a well-rounded understanding of different AI concepts. Training programs and workshops on topics like bias, transparency, accountability, and data privacy can build this understanding. Detailed documentation explaining AI systems and their decision-making processes can help build trust. Regular communication and updates about ethical AI practices can also be a great addition to organizational culture.

Prioritizing privacy means developing robust policies and practices to protect personal data. AI systems should use data obtained with proper consent and apply data minimization techniques to limit the amount of personal information processed. Encryption and anonymization can further protect sensitive data.

Compliance with data protection regulations, such as GDPR (General Data Protection Regulation), is essential. GDPR sets guidelines for collecting and processing personal information from individuals within the European Union. Being transparent about data collection, use, and storage is also vital. Regular privacy impact assessments can identify potential risks and support maintaining privacy as a priority.

In addition to privacy, data security is essential for building ethical AI systems. Strong cybersecurity measures protect data from breaches and unauthorized access. Regular security audits and updates are necessary to keep up with evolving threats.

AI systems should incorporate security features like access controls, secure data storage, and real-time monitoring. A clear incident response plan helps organizations quickly address any security issues. By showing a commitment to data security, organizations can build trust and confidence among users and stakeholders.

At Ultralytics, ethical AI is a core principle that guides our work. As Glenn Jocher, Founder & CEO, puts it: "Ethical AI is not just a possibility; it's a necessity. By understanding and adhering to regulations, we can ensure that AI technologies are developed and used responsibly across the globe. The key is to balance innovation with integrity, ensuring that AI serves humanity in a positive and beneficial way. Let's lead by example and show that AI can be a force for good."

This philosophy drives us to prioritize fairness, transparency, and accountability in our AI solutions. By integrating these ethical considerations into our development processes, we aim to create technologies that push the boundaries of innovation and adhere to the highest standards of responsibility. Our commitment to ethical AI helps our work positively impact society and sets a benchmark for responsible AI practices worldwide.

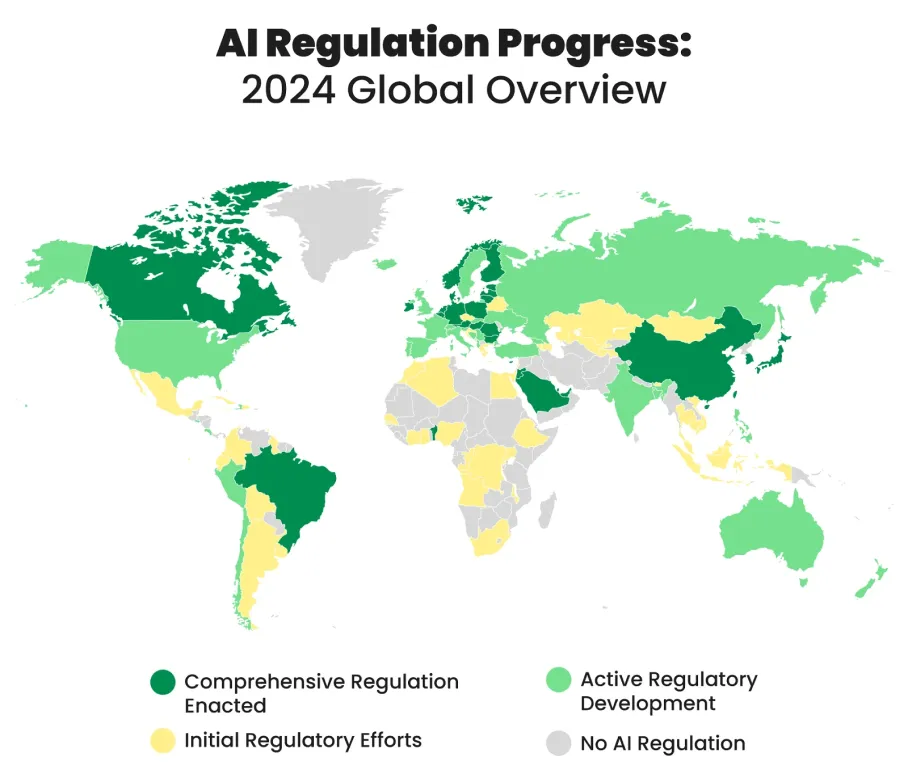

Multiple countries globally are developing and implementing AI regulations to guide the ethical and responsible use of AI technologies. These regulations aim to balance innovation with moral considerations and protect individuals and society from potential risks associated with AI innovations.

Here are some examples of steps taken around the world towards regulating the use of AI:

Promoting ethical AI is easier than you might think. By learning more about issues like bias, transparency, and privacy, you can become an active voice in the conversation surrounding ethical AI. Support and follow ethical guidelines, regularly check for fairness and protect data privacy. When using AI tools like ChatGPT, being transparent about their use helps build trust and makes AI more ethical. By taking these steps, you can help promote AI that is developed and used fairly, transparently, and responsibly.

At Ultralytics, we are committed to ethical AI. If you want to read more about our AI solutions and see how we maintain an ethical mindset, check out our GitHub repository, join our community, and explore our latest solutions in industries like healthcare and manufacturing! 🚀