Learn about RCNN and its impact on object detection. We'll cover its key components, applications, and role in advancing techniques like Fast RCNN and YOLO.

Learn about RCNN and its impact on object detection. We'll cover its key components, applications, and role in advancing techniques like Fast RCNN and YOLO.

Object detection is a computer vision task that can recognize and locate objects in images or videos for applications like autonomous driving, surveillance, and medical imaging. Earlier object detection methods, such as the Viola-Jones detector and Histogram of Oriented Gradients (HOG) with Support Vector Machines (SVM), relied on handcrafted features and sliding windows. These methods often struggled to accurately detect objects in complex scenes with multiple objects of various shapes and sizes.

Region-based Convolutional Neural Networks (R-CNN) have changed how we tackle object detection. It is an important milestone in computer vision history. To understand how models like YOLOv8 came around, we need to first understand models like R-CNN.

Created by Ross Girshick and his team, the R-CNN model architecture generates region proposals, extracts features with a pre-trained Convolutional Neural Network (CNN), classifies objects, and refines bounding boxes. While that might seem daunting, by the end of this article, you'll have a clear understanding of how R-CNN works and why it's so impactful. Let's take a look!

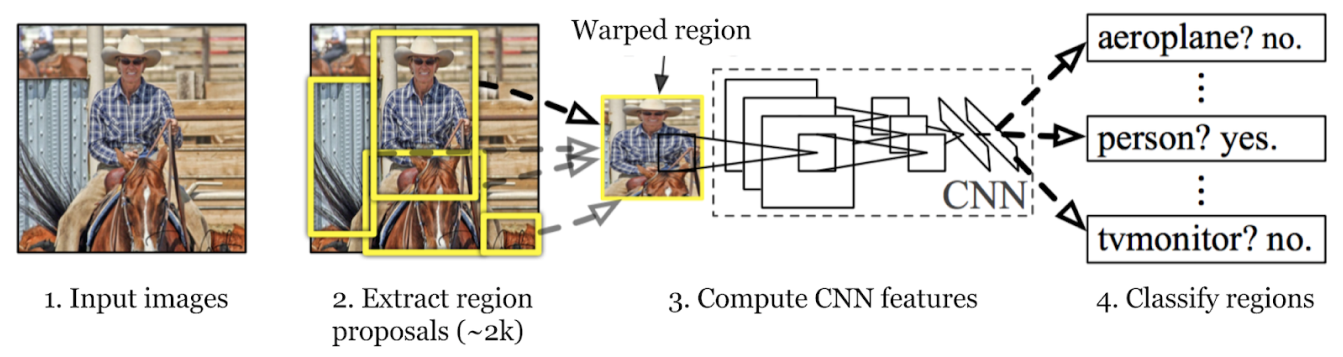

The R-CNN model's object detection process involves three main steps: generating region proposals, extracting features, and classifying objects while refining their bounding boxes. Let’s walk through each step.

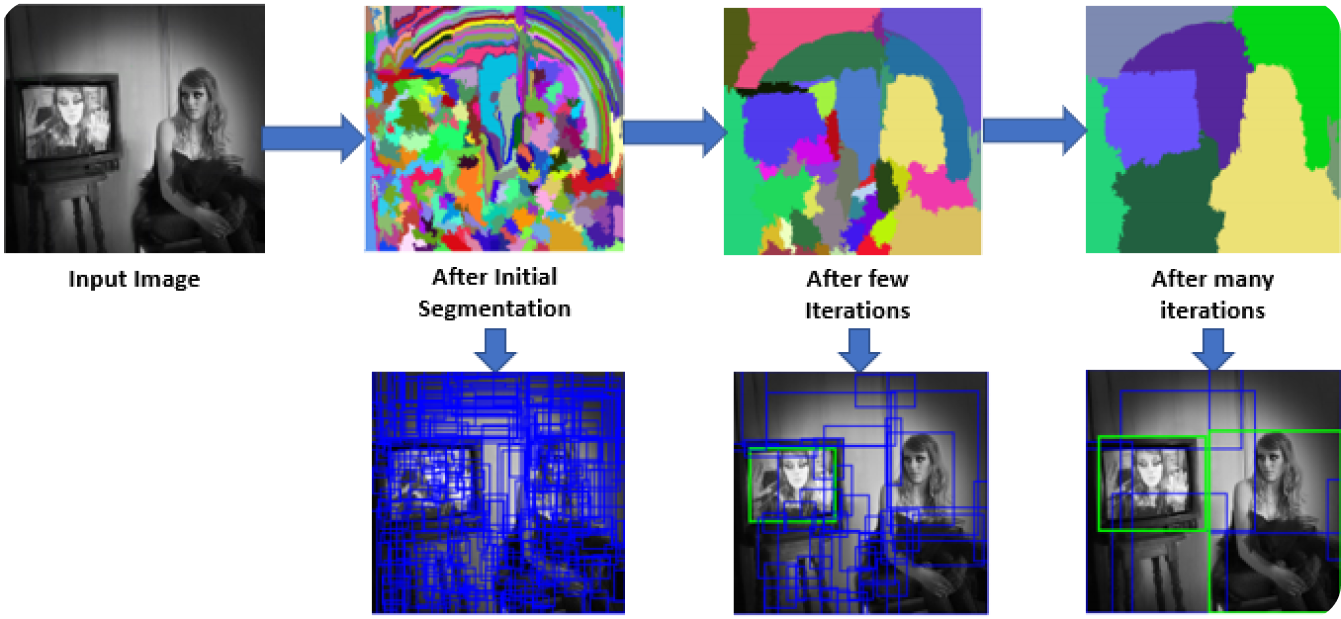

In the first step, the R-CNN model scans the image to create numerous region proposals. Region proposals are potential areas that might contain objects. Methods like Selective Search are used to look at various aspects of the image, such as color, texture, and shape, breaking it down into different parts. Selective Search starts by dividing the image into smaller parts, then merging similar ones to form larger areas of interest. This process continues until about 2,000 region proposals are generated.

These region proposals help identify all the possible spots where an object might be present. In the following steps, the model can efficiently process the most relevant areas by focusing on these specific areas rather than the entire image. Using region proposals balances thoroughness with computational efficiency.

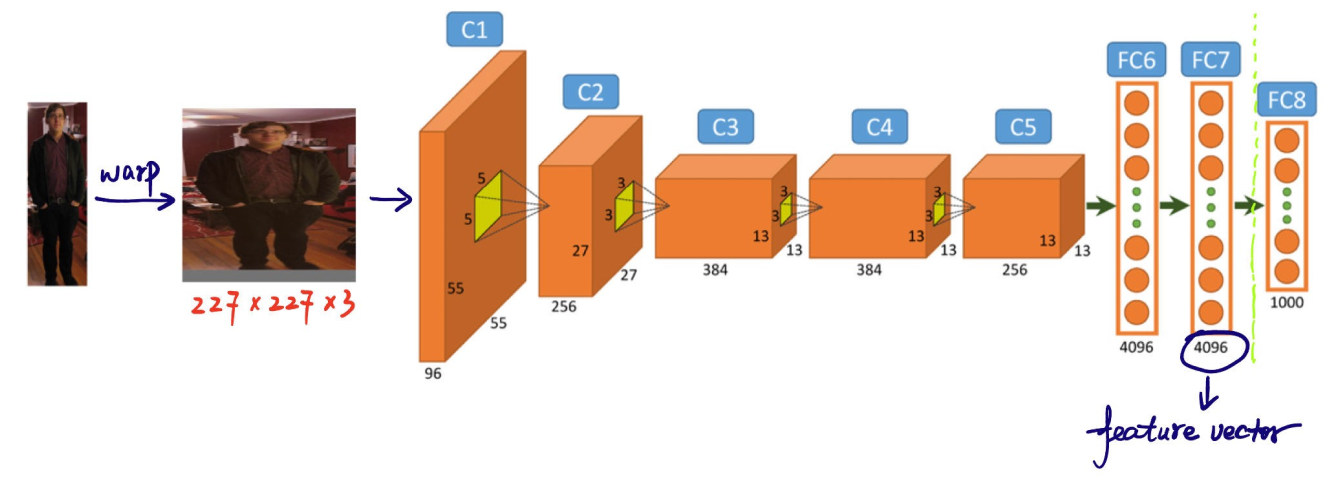

The next step in the R-CNN model's object detection process is to extract features from region proposals. Each region proposal is resized to a consistent size that the CNN expects (for example, 224x224 pixels). Resizing helps the CNN process each proposal efficiently. Before warping, the size of each region proposal is expanded slightly to include 16 pixels of additional context around the region to provide more surrounding information for better feature extraction.

Once resized, these region proposals are fed into a CNN like AlexNet, which is usually pre-trained on a large dataset like ImageNet. The CNN processes each region to extract high-dimensional feature vectors that capture important details such as edges, textures, and patterns. These feature vectors condense the essential information from the regions. They transform the raw image data into a format the model can use for further analysis. Accurately classifying and locating objects in the next stages depends on this crucial conversion of visual information into meaningful data.

The third step is to classify the objects within these regions. This means determining the category or class of each object found within the proposals. The extracted feature vectors are then passed through a machine learning classifier.

In the case of R-CNN, Support Vector Machines (SVMs) are commonly used for this purpose. Each SVM is trained to recognize a specific object class by analyzing the feature vectors and deciding whether a particular region contains an instance of that class. Essentially, for every object category, there is a dedicated classifier checking each region proposal for that specific object.

During training, the classifiers are given labeled data with positive and negative samples:

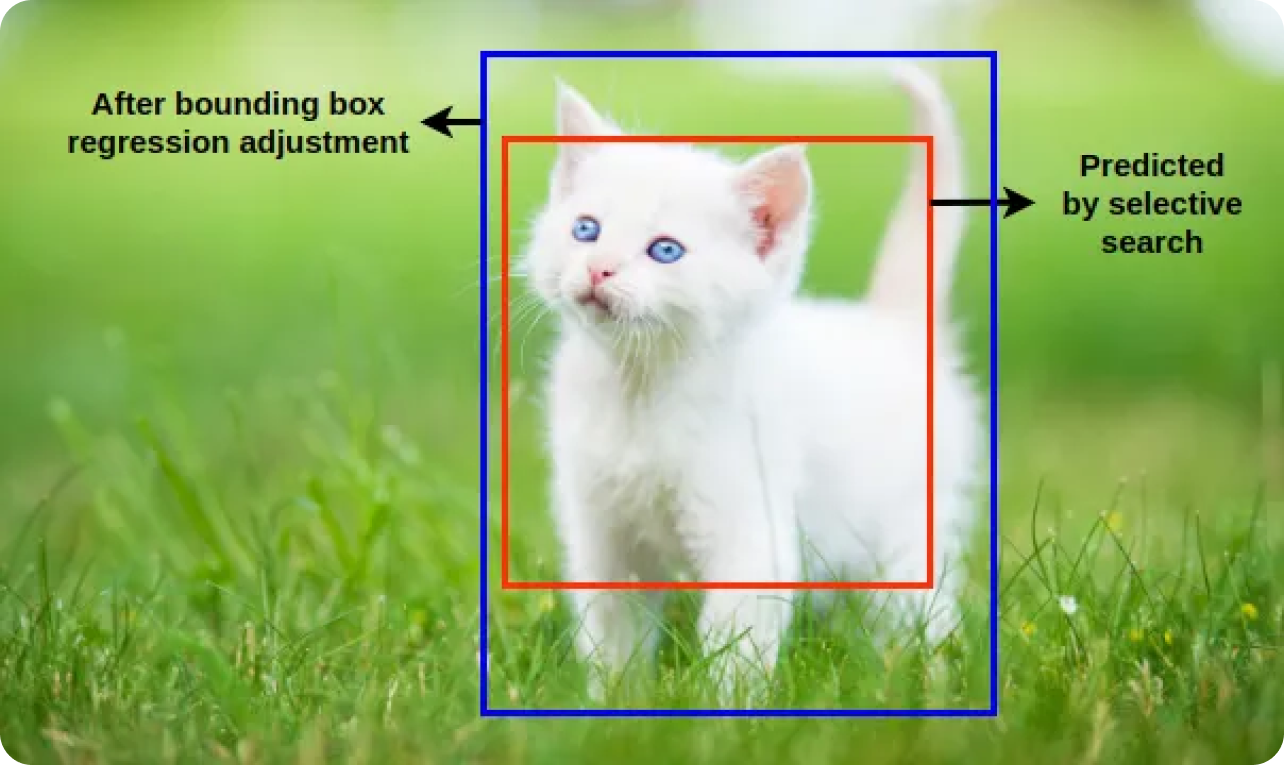

The classifiers learn to distinguish between these samples. Bounding box regression further refines the position and size of detected objects by adjusting the initially proposed bounding boxes to better match the actual object boundaries. The R-CNN model can identify and accurately locate objects by combining classification and bounding box regression.

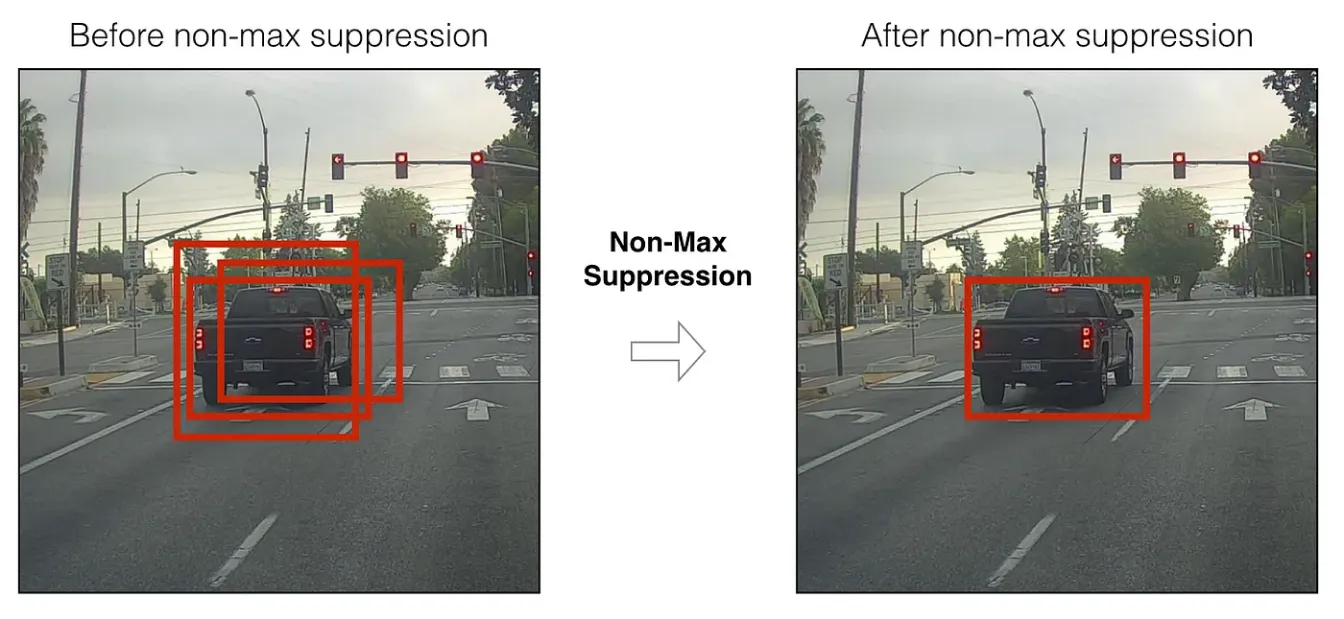

After the classification and bounding box regression steps, the model often generates multiple overlapping bounding boxes for the same object. Non-Maximum Suppression (NMS) is applied to refine these detections, keeping the most accurate boxes. The model eliminates redundant and overlapping boxes by applying NMS and keeps only the most confident detections.

NMS works by evaluating the confidence scores ( indicating how likely a detected object is actually present) of all bounding boxes and suppressing those that significantly overlap with higher-scoring boxes.

Here’s a breakdown of the steps in NMS:

To put it all together, The R-CNN model detects objects by generating region proposals, extracting features with a CNN, classifying objects and refining their positions with bounding box regression, and using Non-Maximum Suppression (NMS) keeping only the most accurate detections.

R-CNN is a landmark model in the history of object detection because it introduced a new approach that greatly improved accuracy and performance. Before R-CNN, object detection models struggled to balance speed and precision. R-CNN's method of generating region proposals and using CNNs for feature extraction allow for precise localization and identification of objects within images.

R-CNN paved the way for models like Fast R-CNN, Faster R-CNN, and Mask R-CNN, which further enhanced efficiency and accuracy. By combining deep learning with region-based analysis, R-CNN set a new standard in the field and opened up possibilities for various real-world applications.

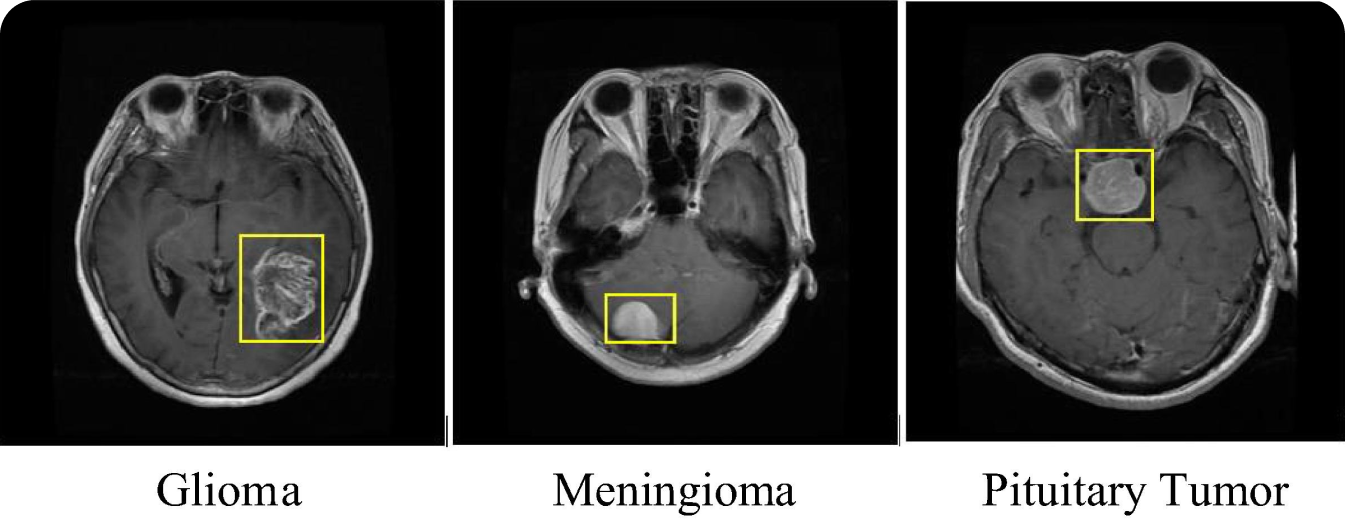

An interesting use case of R-CNN is in medical imaging. R-CNN models have been used to detect and classify different types of tumors, such as brain tumors, in medical scans such as MRIs and CT scans. Using the R-CNN model in medical imaging improves diagnostic accuracy and helps radiologists identify malignancies at an early stage. R-CNN's ability to detect even small and early-stage tumors can make a significant difference in the treatment and prognosis of diseases like cancer.

The R-CNN model can be applied to other medical imaging tasks in addition to tumor detection. For instance, it can identify fractures, detect retinal diseases in eye scans, and analyze lung images for conditions like pneumonia and COVID-19. Regardless of the medical issue, early detection can lead to better patient outcomes. By applying R-CNN's precision in identifying and localizing anomalies, healthcare providers can improve the reliability and speed of medical diagnostics. With object detection streamlining the diagnosis process, patients can benefit from timely and accurate treatment plans.

While impressive, R-CNN has certain drawbacks, like high computational complexity and slow inference times. These drawbacks make the R-CNN model unsuitable for real-time applications. Separating region proposals and classifications into distinct steps, can lead to less efficient performance.

Over the years, various object detection models have come out that have addressed these concerns. Fast R-CNN combines region proposals and CNN feature extraction into a single step, speeding up the process. Faster R-CNN introduces a Region Proposal Network (RPN) to streamline proposal generation, while Mask R-CNN adds pixel-level segmentation for more detailed detections.

Around the same time as Faster R-CNN, the YOLO (You Only Look Once) series began advancing real-time object detection. YOLO models predict bounding boxes and class probabilities in a single pass through the network. For example, the Ultralytics YOLOv8 offers improved accuracy and speed with advanced features for many computer vision tasks.

RCNN changed the game in computer vision, showing how deep learning can change object detection. Its success inspired many new ideas in the field. Even though newer models like Faster R-CNN and YOLO have come up to fix RCNN's flaws, its contribution is a huge milestone that’s important to remember.

As research keeps going, we'll see even better and faster object detection models. These advancements will not only improve how machines understand the world but also lead to progress in many industries. The future of object detection looks exciting!

Want to keep exploring about AI? Become part of the Ultralytics community! Explore our GitHub repository to see our latest artificial intelligence innovations. Check out our AI solutions spanning various sectors like agriculture and manufacturing. Join us to learn and advance!