Perzeptronen und neuronale Netze: Grundprinzipien der Computer Vision

Verstehen Sie, wie neuronale Netze die moderne Technologie verändern, von der Qualitätskontrolle in Lieferketten bis hin zu autonomen Versorgungsinspektionen mit Drohnen.

Verstehen Sie, wie neuronale Netze die moderne Technologie verändern, von der Qualitätskontrolle in Lieferketten bis hin zu autonomen Versorgungsinspektionen mit Drohnen.

In den letzten Jahrzehnten sind neuronale Netze zu den Bausteinen vieler wichtiger Innovationen im Bereich der künstlichen Intelligenz (KI) geworden. Neuronale Netze sind Rechenmodelle, die versuchen, die komplexen Funktionen des menschlichen Gehirns nachzubilden. Sie helfen Maschinen, aus Daten zu lernen und Muster zu erkennen, um fundierte Entscheidungen zu treffen. Auf diese Weise ermöglichen sie Teilbereiche der KI wie Computer Vision und Deep Learning in Sektoren wie Gesundheitswesen, Finanzwesen und selbstfahrende Autos.

Zu verstehen, wie ein neuronales Netz funktioniert, kann Ihnen eine bessere Vorstellung von der "Black Box" der KI vermitteln und dazu beitragen, zu entmystifizieren, wie Spitzentechnologie in unsere täglichen Funktionen integriert wird. In diesem Artikel werden wir untersuchen, was neuronale Netze sind, wie sie funktionieren und wie sie sich im Laufe der Jahre entwickelt haben. Wir werden uns auch ansehen, welche Rolle sie in Computer-Vision-Anwendungen spielen. Fangen wir an!

Bevor wir neuronale Netze im Detail besprechen, wollen wir uns Perzeptrone ansehen. Sie sind die einfachste Art von neuronalem Netz und die Grundlage für den Aufbau komplexerer Modelle.

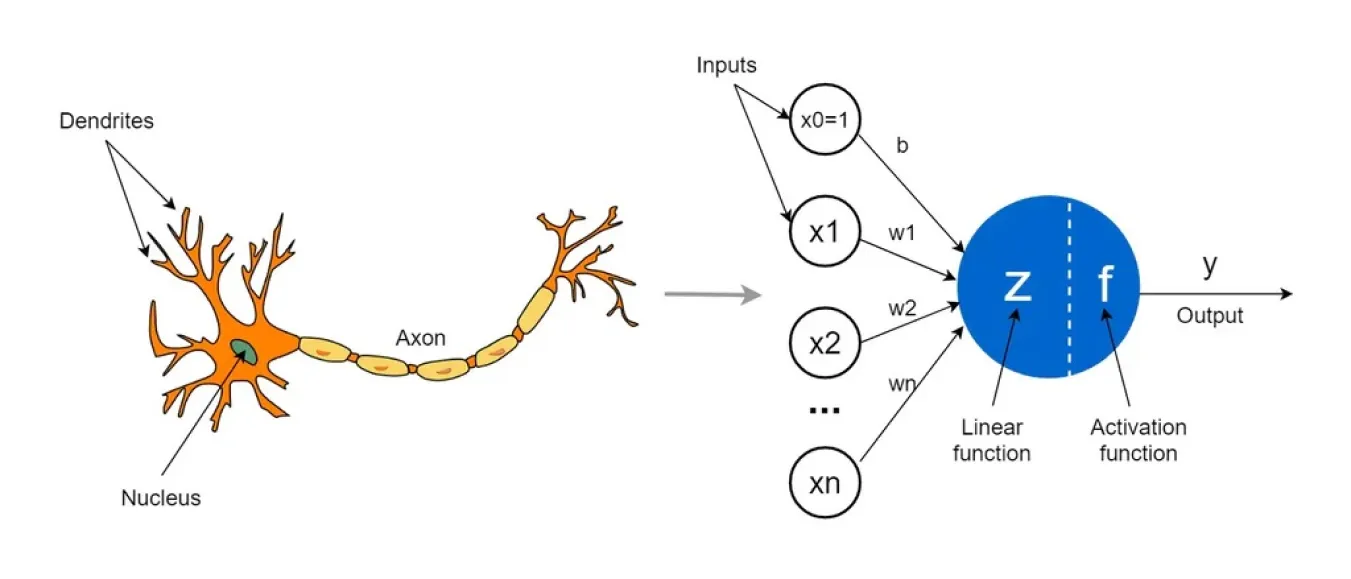

Ein Perzeptron ist ein linearer Algorithmus für maschinelles Lernen, der für überwachtes Lernen verwendet wird (Lernen aus gelabelten Trainingsdaten). Es ist auch als einschichtiges neuronales Netz bekannt und wird typischerweise für binäre Klassifizierungsaufgaben verwendet, die zwischen zwei Datenklassen unterscheiden. Wenn Sie versuchen, sich ein Perzeptron vorzustellen, können Sie es sich als ein einzelnes künstliches Neuron vorstellen.

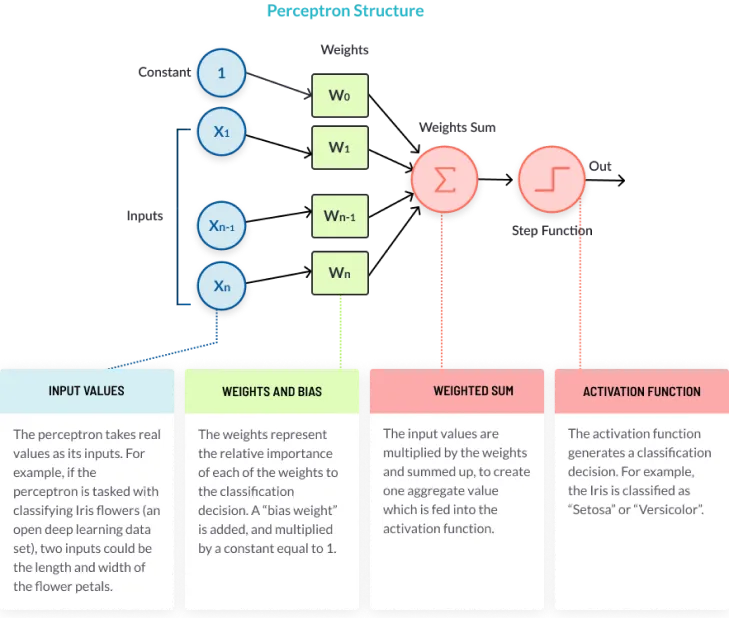

Ein Perzeptron kann mehrere Eingaben entgegennehmen, sie mit Gewichten kombinieren, entscheiden, zu welcher Kategorie sie gehören, und als einfacher Entscheidungsträger fungieren. Es besteht aus vier Hauptparametern: Eingabewerte (auch Knoten genannt), weights and biases, der Netzsumme und einer Aktivierungsfunktion.

So funktioniert es:

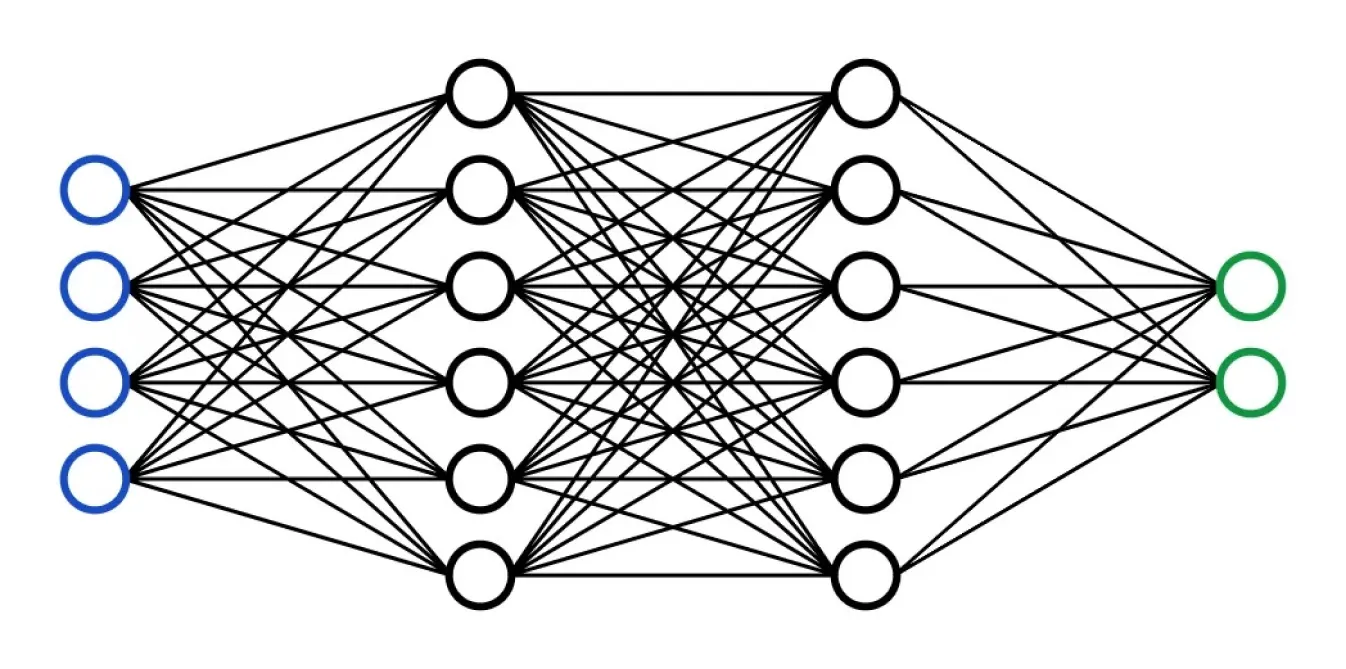

Perzeptronen spielen eine wichtige Rolle beim Verständnis der Grundlagen der Computer Vision. Sie sind die Basis von fortschrittlichen neuronalen Netzen. Im Gegensatz zu Perzeptronen sind neuronale Netze nicht auf eine einzige Schicht beschränkt. Sie bestehen aus mehreren Schichten von miteinander verbundenen Perzeptronen, wodurch sie komplexe, nichtlineare Muster lernen können. Neuronale Netze können komplexere Aufgaben bewältigen und sowohl binäre als auch kontinuierliche Ausgaben erzeugen. Zum Beispiel können neuronale Netze für fortschrittliche Computer-Vision-Aufgaben wie Instanzsegmentierung und Pose-Schätzung verwendet werden.

Die Geschichte der neuronalen Netze reicht mehrere Jahrzehnte zurück und ist voller Forschung und interessanter Entdeckungen. Werfen wir einen genaueren Blick auf einige dieser Schlüsselereignisse.

Hier ist ein kurzer Überblick über die frühen Meilensteine:

Als wir ins 21. Jahrhundert eintraten, nahm die Forschung an neuronalen Netzen Fahrt auf und führte zu noch größeren Fortschritten. In den 2000er Jahren spielte Hintons Arbeit an Restricted Boltzmann Machines - einer Art neuronalem Netz, das Muster in Daten findet - eine Schlüsselrolle bei der Weiterentwicklung des Deep Learning. Sie erleichterte das Training tiefer Netze, trug zur Überwindung von Herausforderungen bei komplexen Modellen bei und machte Deep Learning praktischer und effektiver.

In den 2010er Jahren beschleunigte sich die Forschung dann rapide aufgrund des Aufkommens von Big Data und paralleler Datenverarbeitung. Ein Höhepunkt in dieser Zeit war der Sieg von AlexNet beim ImageNet Wettbewerb (2012). AlexNet, ein tiefes neuronales Faltungsnetzwerk, war ein bedeutender Durchbruch, da es zeigte, wie leistungsfähig tiefes Lernen für Computer-Vision-Aufgaben wie die genaue Erkennung von Bildern sein kann. Es trug dazu bei, das schnelle Wachstum der KI im Bereich der visuellen Erkennung zu fördern.

Heute entwickeln sich neuronale Netze mit neuen Innovationen wie Transformern weiter, die sich hervorragend zum Verständnis von Sequenzen eignen, und Graph Neural Networks, die gut mit komplexen Beziehungen in Daten funktionieren. Techniken wie Transfer Learning - die Verwendung eines für eine Aufgabe trainierten Modells für eine andere - und Self-Supervised Learning, bei dem Modelle ohne beschriftete Daten lernen, erweitern ebenfalls die Möglichkeiten neuronaler Netze.

Nachdem wir nun unsere Grundlagen abgedeckt haben, wollen wir verstehen, was genau ein neuronales Netzwerk ist. Neuronale Netze sind eine Art von Machine-Learning-Modell, das miteinander verbundene Knoten oder Neuronen in einer geschichteten Struktur verwendet, die einem menschlichen Gehirn ähnelt. Diese Knoten oder Neuronen verarbeiten Daten und lernen aus ihnen, wodurch sie Aufgaben wie Mustererkennung ausführen können. Außerdem sind neuronale Netze adaptiv, sodass sie aus ihren Fehlern lernen und sich im Laufe der Zeit verbessern können. Dies gibt ihnen die Möglichkeit, komplexe Probleme, wie z. B. Gesichtserkennung, genauer zu lösen.

Neuronale Netze bestehen aus mehreren Prozessoren, die parallel arbeiten und in Schichten organisiert sind. Sie bestehen aus einer Eingabeschicht, einer Ausgabeschicht und mehreren verborgenen Schichten dazwischen. Die Eingabeschicht empfängt Rohdaten, ähnlich wie unsere Sehnerven visuelle Informationen aufnehmen.

Jede Schicht gibt dann ihre Ausgabe an die nächste weiter, anstatt direkt mit der ursprünglichen Eingabe zu arbeiten, ähnlich wie Neuronen im Gehirn Signale von einem zum anderen senden. Die letzte Schicht erzeugt die Ausgabe des Netzwerks. Mit diesem Verfahren kann ein künstliches neuronales Netz (KNN) lernen, Computer-Vision-Aufgaben wie die Bildklassifizierung auszuführen.

Nachdem wir nun verstanden haben, was neuronale Netze sind und wie sie funktionieren, wollen wir uns eine Anwendung ansehen, die das Potenzial von neuronalen Netzen in der Computer Vision demonstriert.

Neuronale Netze bilden die Grundlage für Computer-Vision-Modelle wie Ultralytics YOLO11 und können zur visuellen Inspektion von Stromleitungen mit Drohnen eingesetzt werden. Die Versorgungsbranche steht vor logistischen Herausforderungen, wenn es um die Inspektion und Wartung ihrer ausgedehnten Netze von Stromleitungen geht. Diese Leitungen erstrecken sich oft über belebte Stadtgebiete bis hin zu abgelegenen, zerklüfteten Landschaften. Traditionell wurden diese Inspektionen von einem Bodenteam durchgeführt. Diese manuellen Methoden sind zwar effektiv, aber auch kostspielig und zeitaufwändig und können die Arbeiter Umwelt- und elektrischen Gefahren aussetzen. Untersuchungen zeigen, dass die Arbeit an Versorgungsleitungen zu den zehn gefährlichsten Berufen in Amerika gehört, mit einer jährlichen Sterblichkeitsrate von 30 bis 50 pro 100.000 Beschäftigten.

Die Drohneninspektionstechnologie kann jedoch Inspektionen aus der Luft zu einer praktischen und kostengünstigen Option machen. Dank modernster Technologie können Drohnen längere Strecken fliegen, ohne dass die Batterien während der Inspektion häufig gewechselt werden müssen. Viele Drohnen sind inzwischen mit KI ausgestattet und verfügen über automatische Hindernisvermeidungsfunktionen und eine bessere Fehlererkennung. Dank dieser Funktionen können sie überfüllte Gebiete mit vielen Stromleitungen inspizieren und qualitativ hochwertige Bilder aus größerer Entfernung aufnehmen. In vielen Ländern wird der Einsatz von Drohnen und Computer Vision für die Inspektion von Stromleitungen eingeführt. In Estland beispielsweise werden 100 % aller Inspektionen von Stromleitungen mit solchen Drohnen durchgeführt.

Neuronale Netze haben einen langen Weg von der Forschung zu den Anwendungen zurückgelegt und sind zu einem wichtigen Bestandteil moderner technologischer Fortschritte geworden. Sie ermöglichen es Maschinen, zu lernen, Muster zu erkennen und auf der Grundlage des Gelernten fundierte Entscheidungen zu treffen. Vom Gesundheitswesen und Finanzwesen bis hin zu autonomen Fahrzeugen und der Fertigung treiben diese Netze Innovationen voran und verändern Branchen. Während wir neuronale Netzwerkmodelle weiter erforschen und verfeinern, wird ihr Potenzial, noch mehr Aspekte unseres täglichen Lebens und unserer Geschäftsabläufe neu zu definieren, immer deutlicher.

Um mehr zu erfahren, besuchen Sie unser GitHub-Repository und treten Sie mit unserer Community in Kontakt. Entdecken Sie KI-Anwendungen in der Fertigung und Landwirtschaft auf unseren Lösungsseiten. 🚀