Join us for a deep dive into AI's role in music, from analyzing audio data to generating new music. Explore its impact and applications in the music industry.

Join us for a deep dive into AI's role in music, from analyzing audio data to generating new music. Explore its impact and applications in the music industry.

Artificial Intelligence (AI) is all about recreating human intelligence in machines. An important part of being human is our connection with the arts, especially music. Music deeply influences our culture and emotions. Thanks to advances in AI, machines can now create music that sounds like it was composed by humans. AI music opens up new possibilities for innovative collaborations between humans and AI and transforms the way we experience and interact with music.

In this article, we'll explore how AI is used to create music. We'll also discuss the connection between AI and music tagging tools like MusicBrainz Picard and their impact on artists, producers, and the entertainment industry overall.

AI can handle various types of data, including sound. Sound data, often called audio data, is a mix of wave frequencies at different intensities over time. Just like images or time series data, audio data can be transformed into a format that AI systems can process and analyze. Sound waves can be converted into numeric data that can be analyzed by AI models.

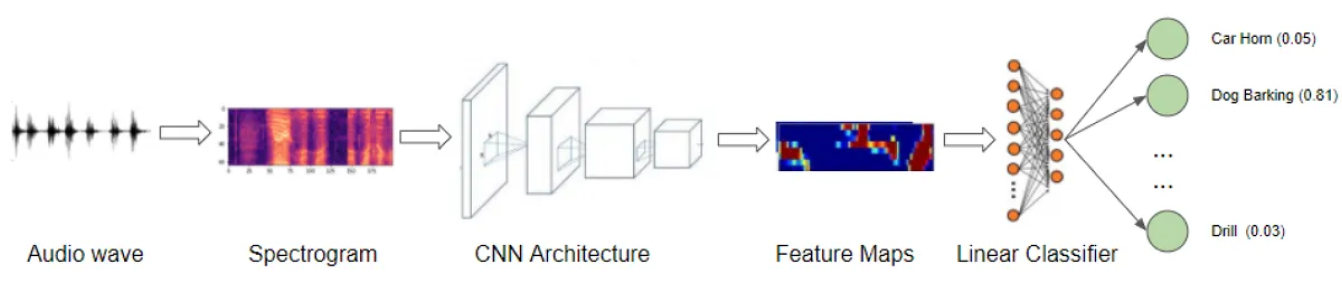

Another interesting method is to use Fourier Transforms, which convert sound waves into a spectrogram. A spectrogram is a visual representation that shows how different frequencies of sound vary over time. AI models can apply image recognition techniques to analyze and interpret the audio data by treating this spectrogram like an image. AI can identify patterns and features within the sound, much like it would with visual data.

Using AI to analyze, manipulate, and generate audio data creates a range of applications. Here are some examples:

AI song generators work by analyzing and learning from existing music, similar to image generation. It's important to understand the difference between using AI to understand music and using AI to generate it. Understanding music involves analyzing and identifying patterns, while generating music involves creating new compositions based on those learned patterns.

.png)

The AI music generation process starts with collecting a large dataset of music that includes various genres and styles. The dataset is then broken down into smaller components like notes, chords, and rhythms, which are converted into numerical data that the AI can process.

There are many different generative AI models that can be trained to generate music. For example, AI models like Transformers and Variational Autoencoders (VAEs) can work together to generate music. VAEs can compress input sounds into a latent space by grouping similar pieces of music closely together to capture the diversity and richness of music. Transformers then use this latent space to generate new music by understanding patterns and focusing on important notes in a sequence.

Once an AI model is trained on this data, the AI can generate new music by predicting the next note or chord based on what it has learned. It can create entire compositions by stringing these predictions together. The generated music can be fine-tuned to match specific styles or preferences.

We are starting to see more music generators using this technology. Here are some examples:

AI innovation is creating new opportunities and challenges for musicians, listeners, and producers, leading to situations they may not have experienced before. It's interesting to see how each group is adapting to these advancements, using new tools, and navigating concerns about originality and ethics. Besides generating music, AI has other exciting potential in the music industry, like enhancing live performances, improving music discovery, and assisting in production processes. Let’s take a closer look at how AI is affecting musicians, listeners, and producers in the music industry.

.png)

AI is changing the way musicians create music. Tools integrated with generative AI can help generate new melodies, chord progressions, and lyrics, making it easier for musicians to overcome creative blocks. AI has also been used to complete unfinished works, such as The Beatles' new song "Now And Then," created with John Lennon's vocals from an old demo. However, the rise of AI-generated music that mimics the style of established artists raises concerns about originality. For example, artists like Bad Bunny are worried about AI replicating their voices and styles without consent.

Beyond creating music, AI and computer vision can help musicians put together better performances and music videos. A music video consists of many different elements, and one of those elements is dancing. Pose estimation models like Ultralytics YOLOv8 can understand human poses in images and videos and play a role in creating choreographed dance sequences that are synchronized with music.

Another good example of how AI can be used for choreography is NVIDIA's "Dance to Music" project. In this project, they used AI and a two-step process to generate new dance moves that are diverse, style-consistent, and match the beat. First, pose estimation and a kinematic beat detector were used to learn various on-the-beat dance movements from a large collection of dance videos. Then, a generative AI model was used to organize these dance movements into choreography that matched the rhythm and style of the music. AI-choreographed dance moves add an interesting visual element to music videos and help artists be more creative.

For listeners, AI can improve the music discovery and listening experience. Platforms like Spotify and Apple Music are using AI to curate personalized playlists and recommend new music based on users' listening habits. When you discover new artists and genres on these platforms, that’s AI’s magic.

AI-powered virtual reality (VR) is also improving live concert experiences. For example, Travis Scott uses VR to create virtual performances that reach global audiences. However, the abundance of AI-generated music on platforms like TikTok can make music discovery overwhelming. It might make it hard for new artists to stand out.

Producers benefit from AI in several ways. AI tools that assist with pitch correction, mixing, and mastering streamline the production process. AI-powered virtual instruments and synthesizers, like IBM's Watson Beat, can create new sounds and textures that expand creative possibilities.

AI on streaming platforms isn't just a benefit for listeners; it also helps producers by creating a wider audience. However, just as musicians are concerned, AI's ability to mimic the style of established artists raises ethical and legal issues about exploiting artists' unique voices and styles. This has resulted in legal disputes, such as lawsuits from major music companies like Universal, Sony, and Warner against AI startups like Suno and Udio for allegedly using copyrighted works to train their models without permission.

We've briefly explored some applications of AI in music by understanding its impact on different stakeholders in the music industry. Now, let's understand a more specific application of AI in music: AI-enhanced music management tools like MusicBrainz Picard. These tools are incredibly useful for organizing and managing digital music libraries.

They automatically identify and tag music files with accurate metadata, such as artist names, album titles, and track numbers. MusicBrainz Picard makes it easier to keep music collections well-organized. One of the key technologies integrated into MusicBrainz Picard is AcoustID audio fingerprints. These fingerprints identify music files based on their actual audio content, even if the files lack metadata.

Why is this so important? Major organizations like the BBC, Google, Amazon, Spotify, and Pandora rely on MusicBrainz data to enhance their music-related services. The metadata created by tools like MusicBrainz Picard is crucial for developers building music databases, tagger applications, or other music-related software. The backbone of AI is data, and without tools like Picard, it would be very difficult to have the clean, accurate data necessary for analysis and application development. It's fascinating that AI-enhanced tools use AI and help create the data needed for AI applications, forming a beneficial cycle of improvement and innovation.

We've discussed the waves that AI in music is making. The legal landscape surrounding AI-generated music is also evolving. Current regulations, such as those by the U.S. Copyright Office, stipulate that works generated entirely by AI cannot be copyrighted as they lack human authorship. However, if a human significantly contributes to the creative process, the work may qualify for copyright protection. As AI continues to integrate into the music industry, ongoing legal and ethical discussions will be vital to navigate these challenges. Looking ahead, AI has tremendous potential in music, combining technology with human creativity to expand the possibilities in music creation and production.

Explore AI by visiting our GitHub repository and joining our vibrant community. Learn about AI applications in manufacturing and agriculture on our solutions pages.