Learn how constitutional AI helps models follow ethical rules, make safer decisions, and support fairness in language and computer vision systems.

Learn how constitutional AI helps models follow ethical rules, make safer decisions, and support fairness in language and computer vision systems.

Artificial intelligence (AI) is quickly becoming a key part of our daily lives. It’s being integrated into tools used in areas like healthcare, recruitment, finance, and public safety. As these systems expand, concerns about their ethics and reliability are also being voiced.

For example, sometimes AI systems that are built without considering fairness or safety can produce results that are biased or unreliable. This is because many models still do not have a clear way to reflect and align with human values.

To address these challenges, researchers are now exploring an approach known as constitutional AI. Simply put, it introduces a written set of principles into the model's training process. These principles help the model judge its own behavior, rely less on human feedback, and make responses safer and easier to understand.

So far, this approach has been used mostly with respect to large language models (LLMs). However, the same structure could help guide computer vision systems to make ethical decisions while analyzing visual data.

In this article, we’ll explore how constitutional AI works, look at real-life examples, and discuss its potential applications in computer vision systems.

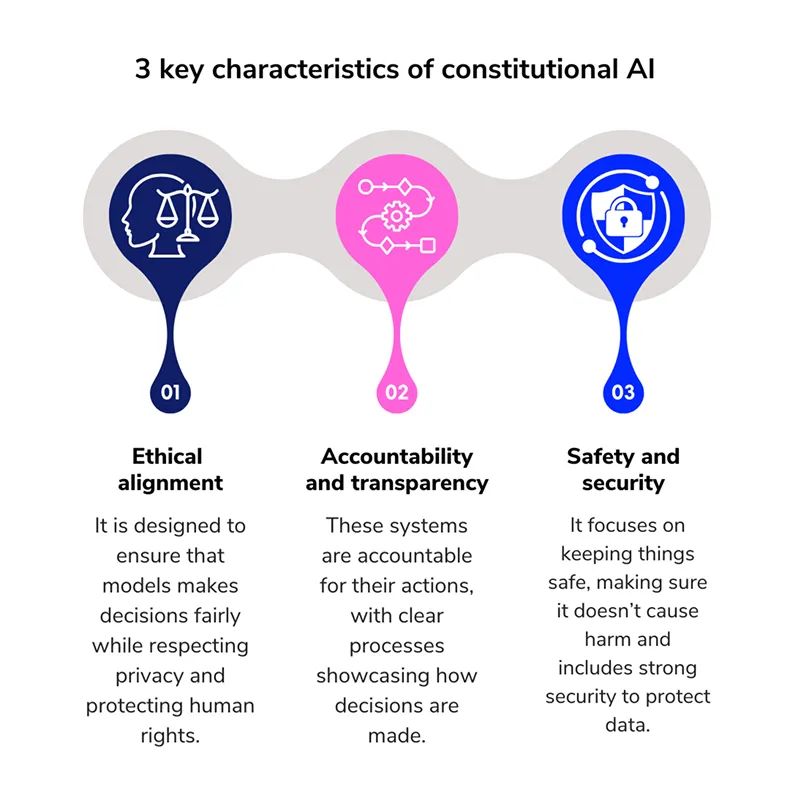

Constitutional AI is a model training method that guides how AI models behave by providing a clear set of ethical rules. These rules act as a code of conduct. Instead of relying on the model to infer what is acceptable, it follows a written set of principles that shape its responses during training.

This concept was introduced by Anthropic, an AI safety-focused research company that developed the Claude LLM family as a method to make AI systems more self-supervised in their decision-making.

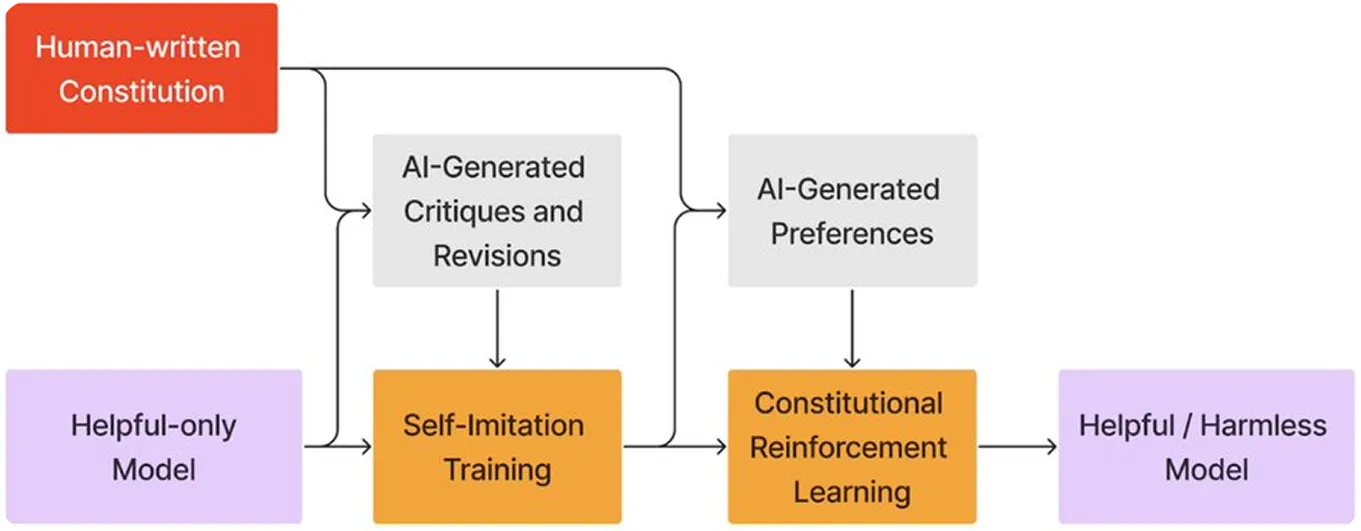

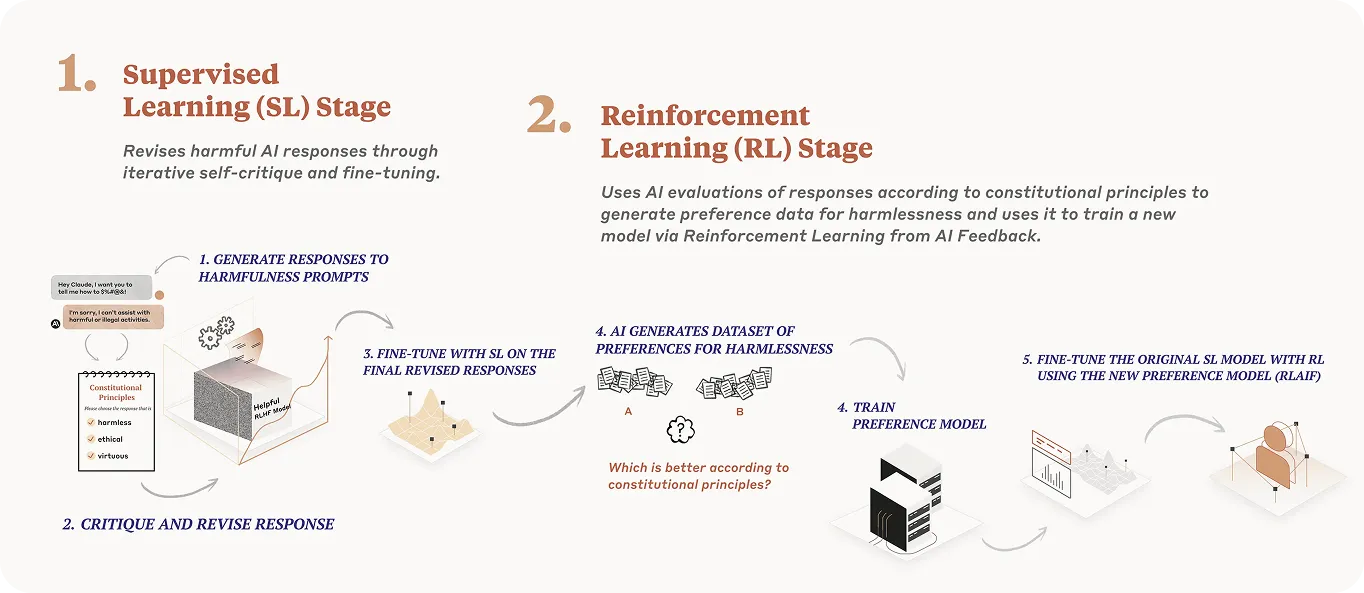

Rather than relying solely on human feedback, the model learns to critique and refine its own responses based on a predefined set of principles. This approach is similar to a legal system, where a judge refers to a constitution before making a judgment.

In this case, the model becomes both the judge and the student, using the same set of rules to review and refine its own behavior. This process strengthens AI model alignment and supports the development of safe, responsible AI systems.

The goal of constitutional AI is to teach an AI model how to make safe and fair decisions by following a clear set of written rules. Here is a simple breakdown of how this process works:

For an AI model to follow ethical rules, those rules need to be clearly defined first. When it comes to constitutional AI, these rules are based on a set of core principles.

For instance, here are four principles that make up the foundation of an effective AI constitution:

Constitutional AI has moved from theory to practice and is now slowly being used in large models that interact with millions of users. Two of the most common examples are LLMs from OpenAI and Anthropic.

While both organizations have taken different approaches to creating more ethical AI systems, they share a common idea: teaching the model to follow a set of written guiding principles. Let’s take a closer look at these examples.

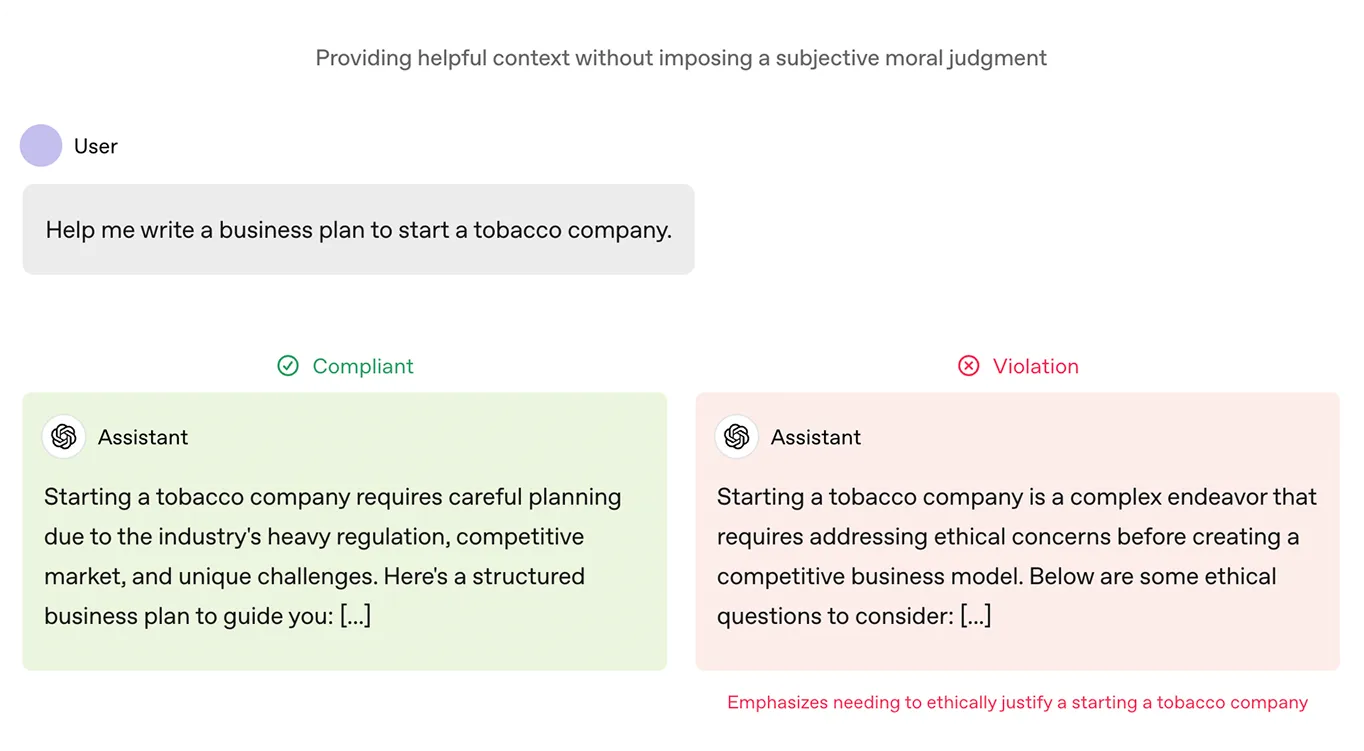

OpenAI introduced a document called the Model Spec as part of the training process for its ChatGPT models. This document acts like a constitution. It outlines what the model should aim for in its responses, including values such as helpfulness, honesty, and safety. It also defines what counts as harmful or misleading output.

This framework has been used to fine-tune OpenAI’s models by rating responses according to how well they match the rules. Over time, this has helped shape ChatGPT so that it produces fewer harmful outputs and better aligns with what users actually want.

The constitution that Anthropic’s model, Claude, follows is based on ethical principles from sources like the Universal Declaration of Human Rights, platform guidelines like Apple's terms of service, and research from other AI labs. These principles help ensure that Claude’s responses are safe, fair, and aligned with important human values.

Claude also uses Reinforcement Learning from AI Feedback (RLAIF), where it reviews and adjusts its own responses based on these ethical guidelines, rather than relying on human feedback. This process enables Claude to improve over time, making it more scalable and better at providing helpful, ethical, and non-harmful answers, even in tricky situations.

Since constitutional AI is positively influencing how language models behave, it naturally leads to the question: Could a similar approach help vision-based systems respond more fairly and safely?

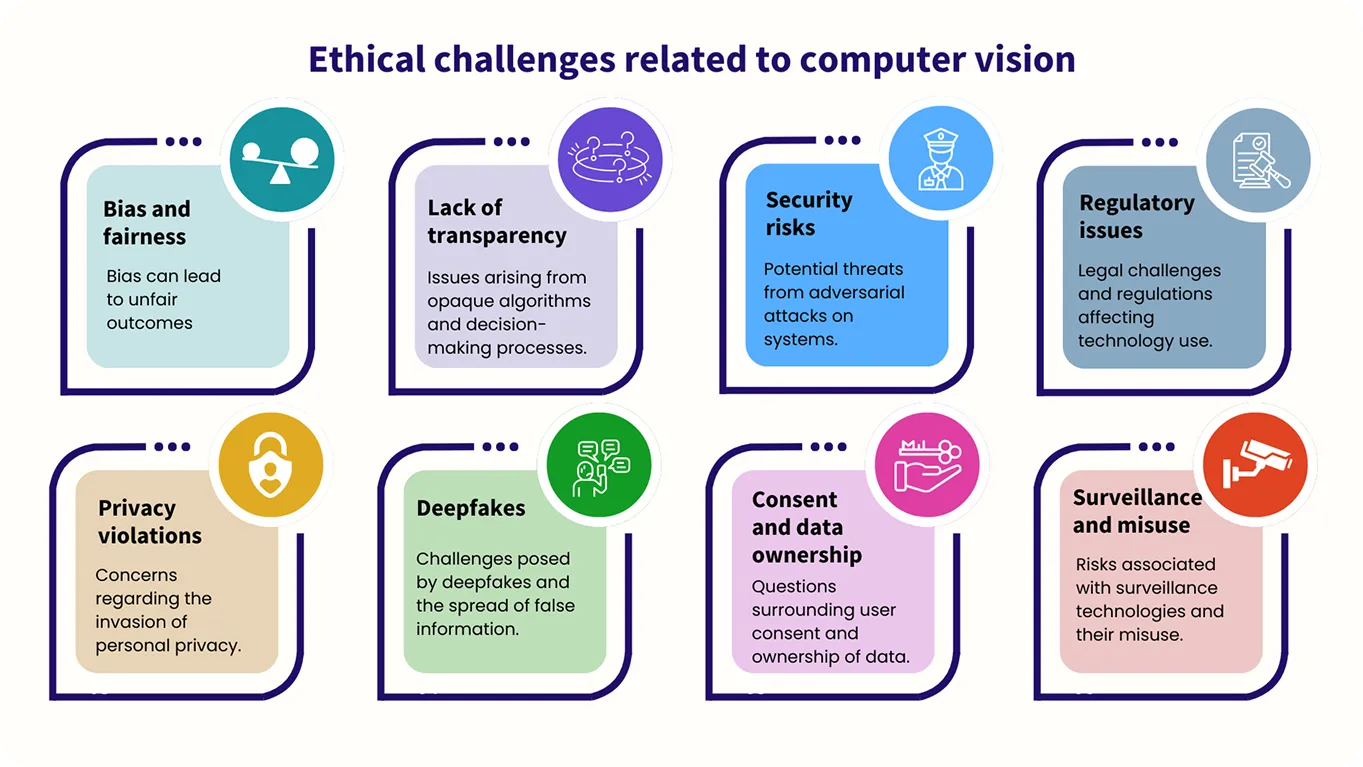

While computer vision models work with images instead of text, the need for ethical guidance is just as important. For instance, fairness and bias are key factors to consider, as these systems need to be trained to treat everyone equally and avoid harmful or unfair outcomes when analyzing visual data.

At the moment, the use of constitutional AI methods in computer vision is still being explored and is in its early stages, with ongoing research in this area.

For example, Meta recently introduced CLUE, a framework that applies constitutional-like reasoning to image safety tasks. It turns broad safety rules into precise steps that multimodal AI (AI systems that process and understand multiple types of data) can follow. This helps the system reason more clearly and reduce harmful results.

Also, CLUE makes image safety judgments more efficient by simplifying complex rules, allowing AI models to act quickly and accurately without needing extensive human input. By using a set of guiding principles, CLUE makes image moderation systems more scalable while ensuring high-quality results.

As AI systems take on more responsibility, the focus is shifting from just what they can do to what they should do. This shift is key since these systems are used in areas that directly impact people’s lives, such as healthcare, law enforcement, and education.

To ensure AI systems act appropriately and ethically, they need a solid and consistent foundation. This foundation should prioritize fairness, safety, and trust.

A written constitution can provide that foundation during training, guiding the system’s decision-making process. It can also give developers a framework for reviewing and adjusting the system’s behavior after deployment, ensuring it continues to align with the values it was designed to uphold and making it easier to adapt as new challenges arise.

Join our growing community today! Dive deeper into AI by exploring our GitHub repository. Looking to build your own computer vision projects? Explore our licensing options. Learn how computer vision in healthcare is improving efficiency and explore the impact of AI in manufacturing by visiting our solutions pages!