Die neuesten OpenAI-Updates: Canvas, Vision Fine-Tuning und mehr

Schauen Sie sich mit uns die jüngsten ChatGPT von OpenAI genauer an. Wir werden Canvas, die Feinabstimmung für die Vision-Funktionen und die neueste Suchfunktion untersuchen.

Schauen Sie sich mit uns die jüngsten ChatGPT von OpenAI genauer an. Wir werden Canvas, die Feinabstimmung für die Vision-Funktionen und die neueste Suchfunktion untersuchen.

Nachdem wir im September einen Blick auf die o1-Modelle von OpenAI geworfen haben (die das logische Denken verbessern sollen), wurden viele neue und spannende Funktionen zu ChatGPT hinzugefügt. Einige dieser Neuerungen richten sich an Entwickler, andere sind dazu gedacht, die Benutzerfreundlichkeit zu verbessern. Insgesamt trägt jede Aktualisierung dazu bei, die Interaktion mit ChatGPT intuitiver und effektiver zu gestalten.

Aktualisierungen wie Canvas, das für gemeinsames Schreiben und Programmieren entwickelt wurde, und die Feinabstimmung der Bildbearbeitungsfunktionen, die die Arbeit ChatGPT mit Bildern verbessert, haben großes Interesse geweckt und die Nutzer dazu ermutigt, mehr kreative Möglichkeiten zu erkunden. Technische Verbesserungen wie neue APIs und Fairness-Testberichte befassen sich mit Aspekten wie Modellintegration und ethischen KI-Praktiken . Lassen Sie uns eintauchen und ein besseres Verständnis der neuesten ChatGPT von OpenAI gewinnen!

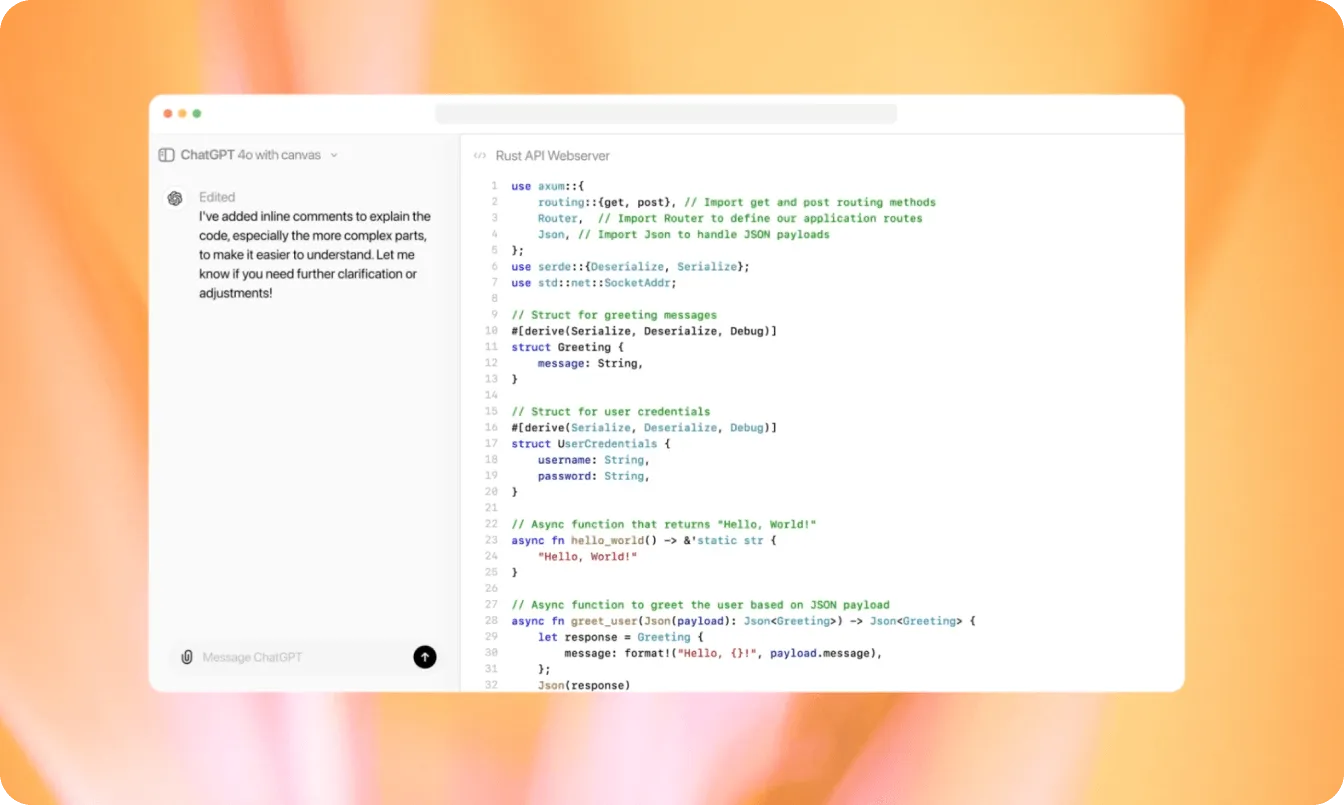

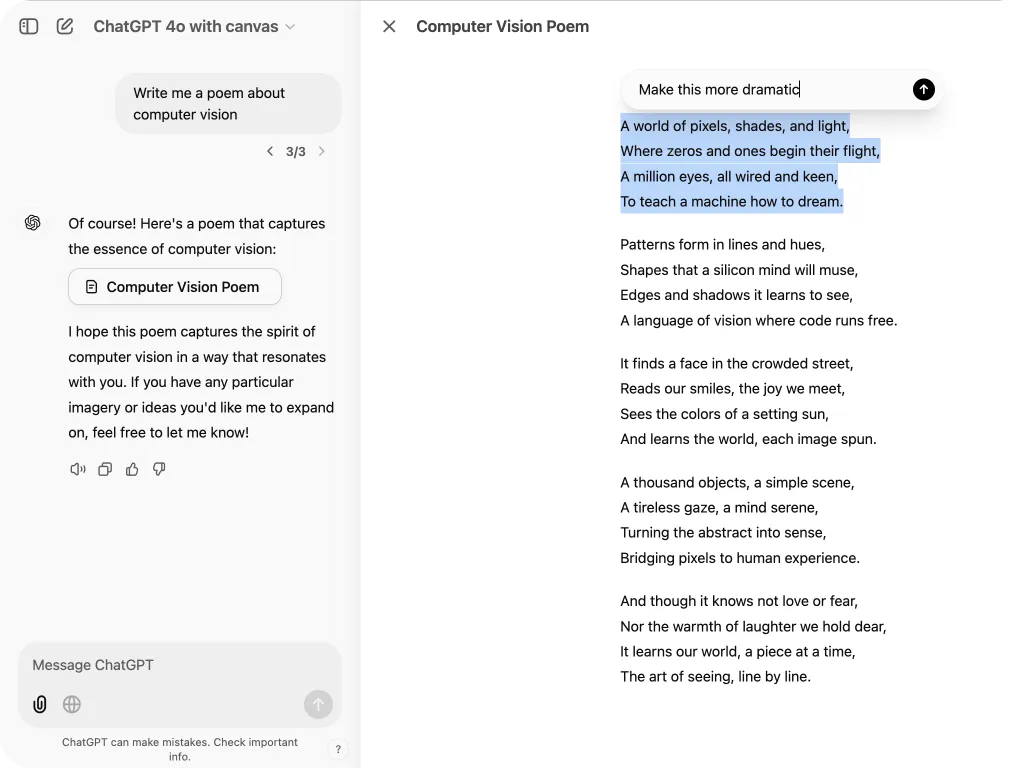

Canvas ist die erste größere Aktualisierung der ChatGPT(UI) seit ihrer Veröffentlichung. Es handelt sich um eine neue Oberfläche mit einem Zwei-Bildschirm-Layout, Eingabeaufforderungen in der linken Seitenleiste und Antworten im rechten Seitenfenster. Die neue Benutzeroberfläche beseitigt den üblichen Arbeitsablauf einer Chat-ähnlichen Ein-Bildschirm-Struktur und wechselt zu einem Zwei-Bildschirm-Layout, das für Multitasking-Zwecke geeignet ist, um die Produktivität zu steigern.

Vor der Einführung von Canvas bedeutete die Arbeit mit langen Dokumenten auf ChatGPT , dass man ziemlich viel nach oben und unten scrollen musste. Im neuen Layout werden die Eingabeaufforderungen in der linken Seitenleiste angezeigt und das Textdokument oder der Codeschnipsel nimmt den größten Teil des Bildschirms ein. Bei Bedarf können Sie sogar die Größe der linken Seitenleiste und des Ausgabebildschirms anpassen. Außerdem können Sie einen Teil des Textes oder einen Abschnitt des Codes auswählen und den betreffenden Abschnitt bearbeiten, ohne das gesamte Dokument zu verändern.

Wenn Sie Canvas verwenden, werden Sie feststellen, dass es auf der ChatGPT keine spezielle Schaltfläche oder einen Umschalter zum Öffnen gibt. Wenn Sie mit dem GPT-4o-Modell arbeiten, wird Canvas automatisch geöffnet, wenn es erkennt, dass Sie etwas bearbeiten, schreiben oder codieren. Bei einfacheren Eingabeaufforderungen bleibt es inaktiv. Wenn Sie es manuell öffnen möchten, können Sie Aufforderungen wie "Öffnen Sie das Canvas" oder "Holen Sie mir das Canvas-Layout" verwenden.

Derzeit befindet sich Canvas in der Beta-Phase und ist nur mit GPT-4o verfügbar. OpenAI hat jedoch erwähnt, dass Canvas für alle kostenlosen Benutzer verfügbar sein wird, sobald es die Beta-Phase verlässt.

OpenAI hat drei neue ChatGPT veröffentlicht, die die Effizienz, Skalierbarkeit und Vielseitigkeit verbessern sollen. Werfen wir einen genaueren Blick auf jede dieser Aktualisierungen.

Durch die Nutzung der Funktion Model Distillation über die OpenAI APIs können Entwickler die Ausgaben von fortschrittlichen Modellen wie GPT-4o oder o1-preview nutzen, um die Performance von kleineren, kosteneffizienten Modellen wie GPT-4o mini zu verbessern. Model Distillation ist ein Prozess, bei dem kleinere Modelle trainiert werden, um das Verhalten von fortschrittlicheren Modellen nachzubilden, wodurch sie für spezifische Aufgaben effizienter werden.

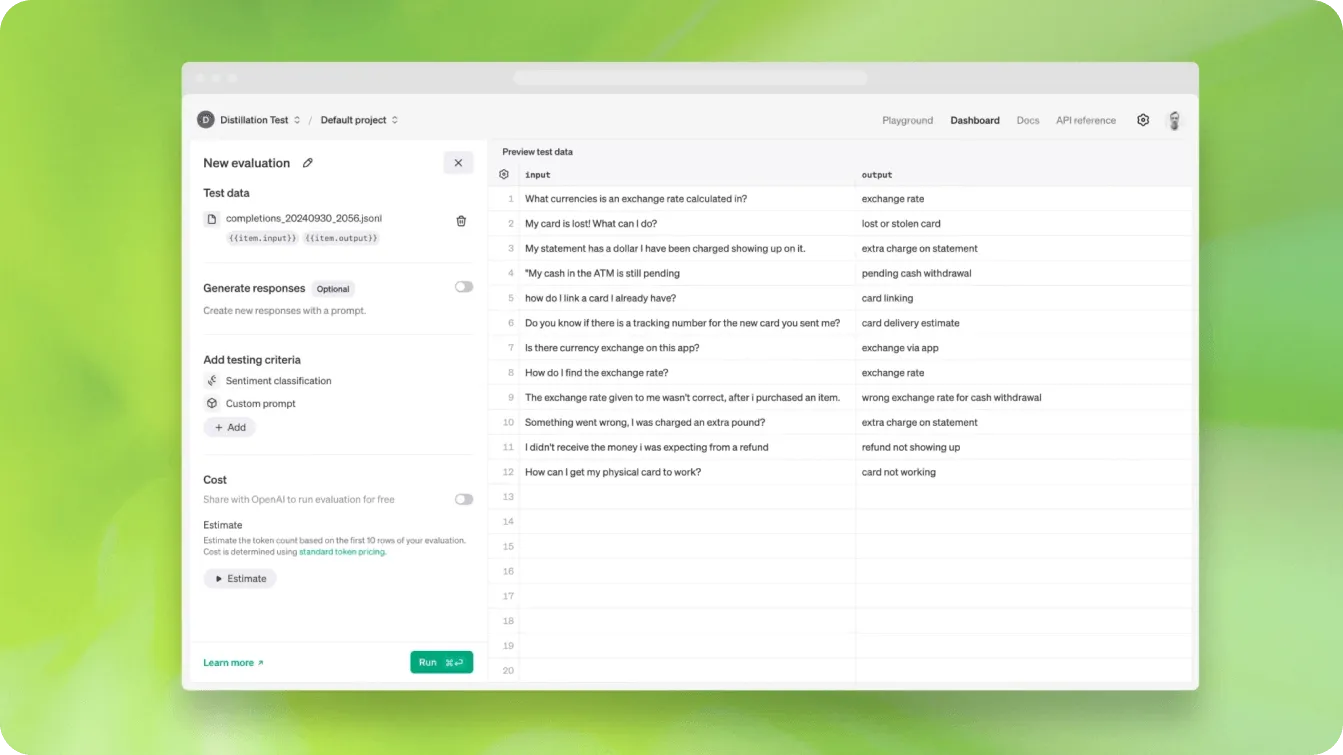

Vor der Einführung dieser Funktion mussten Entwickler eine Vielzahl von Aufgaben manuell mit verschiedenen Tools koordinieren. Zu diesen Aufgaben gehörten das Erstellen von Datensätzen, das Messen der Modellleistung und das Fine-Tuning von Modellen, was den Prozess oft komplex und fehleranfällig machte. Mit dem Model Distillation Update können Entwickler Stored Completions verwenden, ein Tool, mit dem sie automatisch Datensätze generieren können, indem sie die von fortschrittlichen Modellen über die API erzeugten Eingabe-Ausgabe-Paare erfassen und speichern.

Eine weitere Funktion von Model Distillation, Evals (derzeit in der Beta-Phase), hilft zu messen, wie gut ein Modell bei bestimmten Aufgaben funktioniert, ohne dass benutzerdefinierte Evaluierungs-Skripte erstellt oder separate Tools verwendet werden müssen. Durch die Verwendung von Datensätzen, die mit Stored Completions generiert und mit Evals die Leistung bewertet wurde, können Entwickler ihre eigenen benutzerdefinierten GPT-Modelle feinabstimmen.

Beim Aufbau von KI-Anwendungen, insbesondere von Chatbots, wird oft derselbe Kontext (die Hintergrundinformationen oder der bisherige Gesprächsverlauf, die zum Verständnis der aktuellen Anfrage erforderlich sind) wiederholt für mehrere API-Aufrufe verwendet. Prompt-Caching ermöglicht es Entwicklern, kürzlich verwendete Eingabe-Token (Textsegmente, die das Modell verarbeitet, um den Prompt zu verstehen und eine Antwort zu generieren) wiederzuverwenden, was zur Reduzierung von Kosten und Latenz beiträgt.

Seit dem 1. Oktober wendet OpenAI automatisch Prompt Caching auf seine Modelle wie GPT-4o, GPT-4o mini, o1-preview und o1-mini an. Das bedeutet, dass das System die bereits verarbeiteten Teile speichert, wenn Entwickler die API verwenden, um mit einem Modell mit einem langen Prompt (über 1.024 Token) zu interagieren.

Auf diese Weise kann das System die Neuberechnung dieser Teile überspringen, wenn dieselben oder ähnliche Prompts erneut verwendet werden. Das System speichert automatisch den längsten Teil des Prompts, dem es zuvor begegnet ist, beginnend mit 1.024 Token und fügt in Blöcken von 128 Token hinzu, wenn der Prompt länger wird.

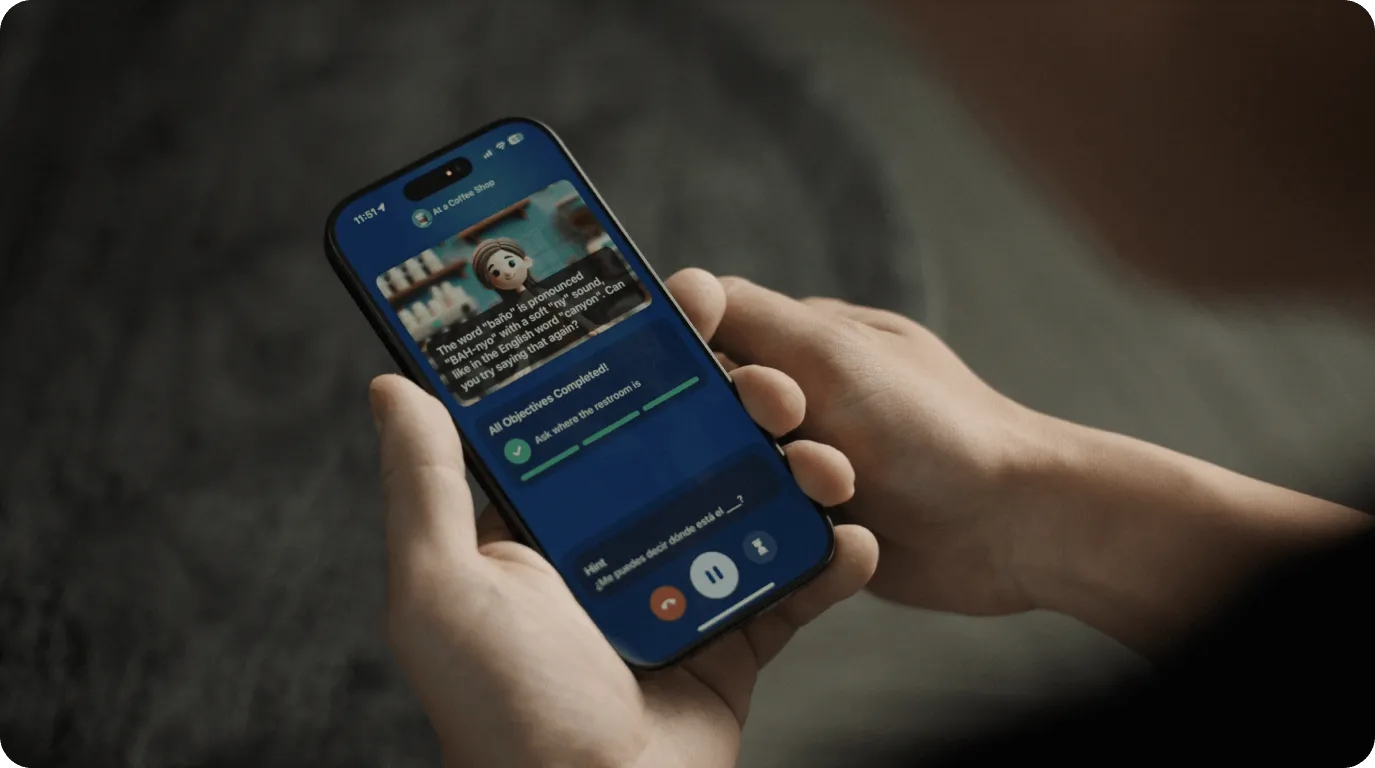

Die Erstellung eines Sprachassistenten erfordert in der Regel die Transkription von Audio in Text, die Verarbeitung des Textes und die anschließende Rückumwandlung in Audio zur Wiedergabe der Antwort. Die Realtime API von OpenAI zielt darauf ab, diesen gesamten Prozess mit einer einzigen API-Anfrage abzuwickeln. Durch die Vereinfachung des Prozesses ermöglicht die API Echtzeit-Konversationen mit KI.

Ein Sprachassistent, der in die Realtime API integriert ist, kann beispielsweise basierend auf Benutzeranfragen bestimmte Aktionen ausführen, wie z. B. eine Bestellung aufgeben oder Informationen finden. Die API macht den Sprachassistenten reaktionsschneller und ermöglicht es ihm, sich schnell an die Bedürfnisse der Benutzer anzupassen. Die Realtime API wurde am 1. Oktober als öffentliche Betaversion mit sechs Stimmen veröffentlicht. Am 30. Oktober wurden fünf weitere Stimmen hinzugefügt, so dass insgesamt elf Stimmen zur Verfügung stehen.

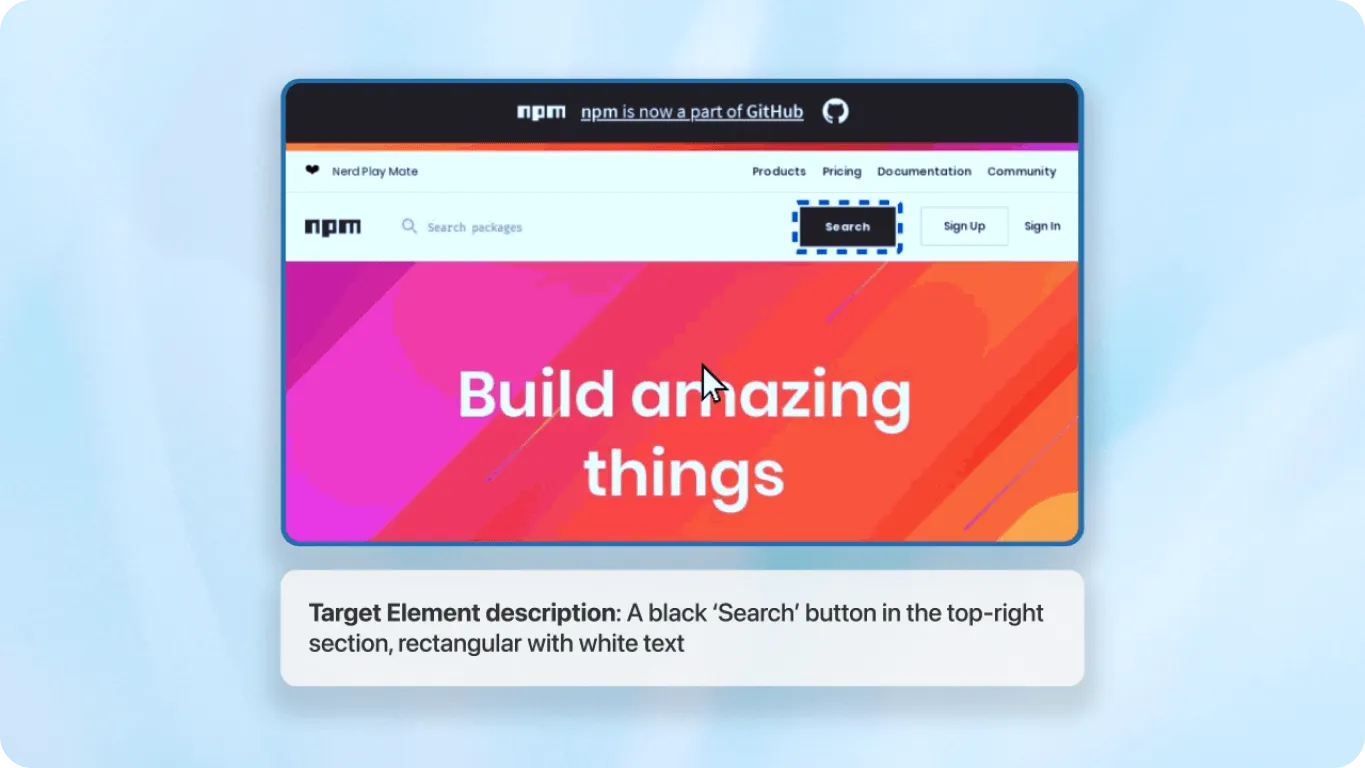

Ursprünglich konnte das GPT-4o Vision Language Model nur mit rein textbasierten Datensätzen feinabgestimmt und angepasst werden. Mit der Veröffentlichung der Vision Fine-Tuning API können Entwickler GPT-4o nun mithilfe von Bilddatensätzen trainieren und anpassen. Seit seiner Veröffentlichung hat sich Vision Fine-Tuning zu einem wichtigen Thema für Entwickler und Computer-Vision-Ingenieure entwickelt.

Um die Vision-Fähigkeiten von GPT-4o zu optimieren, können Entwickler Bilddatensätze verwenden, die von nur 100 bis zu 50.000 Bildern reichen. Nachdem sichergestellt wurde, dass das Dataset dem von OpenAI geforderten Format entspricht, kann es auf die OpenAI-Plattform hochgeladen und das Modell für spezifische Anwendungen optimiert werden.

Beispielsweise verwendete Automat, ein Automatisierungsunternehmen, einen Datensatz von Screenshots, um GPT-4o zu trainieren, um UI-Elemente auf einem Bildschirm anhand einer Beschreibung zu identifizieren. Dies trägt zur Rationalisierung der Robotic Process Automation (RPA) bei, indem es Bots erleichtert, mit Benutzeroberflächen zu interagieren. Anstatt sich auf feste Koordinaten oder komplexe Selektorregeln zu verlassen, kann das Modell UI-Elemente anhand einfacher Beschreibungen identifizieren, wodurch Automatisierungseinrichtungen anpassungsfähiger und bei Änderungen der Schnittstellen einfacher zu warten sind.

Ethische Bedenken im Zusammenhang mit KI-Anwendungen sind ein wichtiges Gesprächsthema, da die KI immer weiter fortgeschritten ist. Da die Antworten von ChatGPTauf vom Benutzer eingegebenen Eingabeaufforderungen und im Internet verfügbaren Daten basieren, kann es schwierig sein, die Sprache so abzustimmen, dass sie stets verantwortungsvoll ist. Berichten zufolge sind die Antworten vonChatGPT in Bezug auf Name, Geschlecht und Ethnie voreingenommen. Um dieses Problem zu lösen, führte das interne Team von OpenAI einen Fairness-Test in der ersten Person durch.

Namen enthalten oft subtile Hinweise auf unsere Kultur und geografische Faktoren. In den meisten Fällen wird ChatGPT die subtilen Hinweise in den Namen ignorieren. In einigen Fällen jedoch führen Namen, die Ethnie oder Kultur widerspiegeln, zu unterschiedlichen Reaktionen von ChatGPT, wobei etwa 1 % dieser Namen schädliche Sprache widerspiegeln. Die Beseitigung von Vorurteilen und schädlicher Sprache ist eine schwierige Aufgabe für ein Sprachmodell. Durch die Veröffentlichung dieser Ergebnisse und die Anerkennung der Grenzen des Modells hilft OpenAI den Nutzern, ihre Eingabeaufforderungen zu verfeinern, um neutralere, unvoreingenommene Antworten zu erhalten.

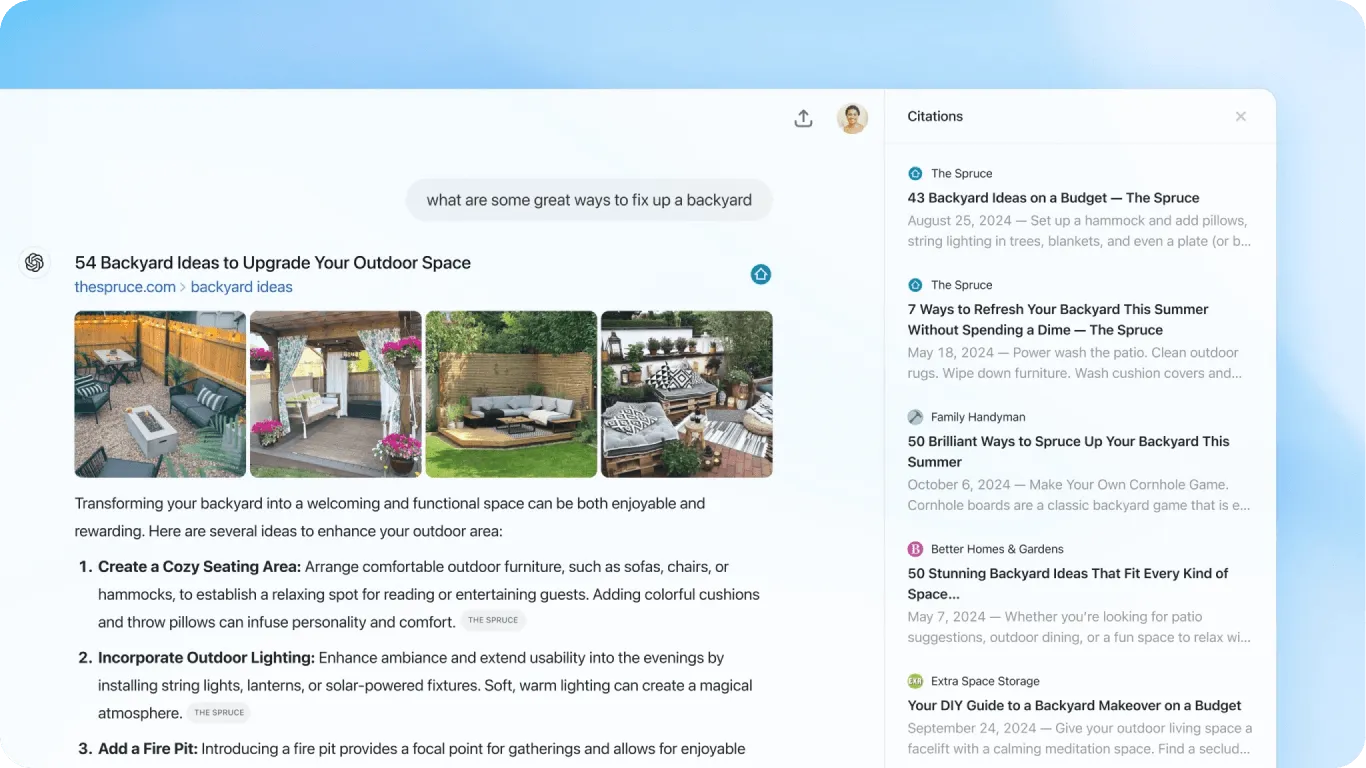

Als ChatGPT zum ersten Mal auf den Markt ChatGPT , gab es in der KI-Community Diskussionen darüber, ob es das traditionelle Surfen im Internet ersetzen könnte. Mittlerweile nutzen viele Anwender ChatGPT Google .

Das neue Update von OpenAI, die Suchfunktion, geht hier noch einen Schritt weiter. Mit der Suche generiert ChatGPT aktuelle Antworten und enthält Links zu relevanten Quellen. Seit dem 31. Oktober ist die Suchfunktion für alle ChatGPT Plus- und Team-Benutzer verfügbar, wodurch ChatGPT mehr wie eine KI-gesteuerte Suchmaschine funktioniert.

Die jüngsten Updates von ChatGPT konzentrieren sich darauf, KI nützlicher, flexibler und fairer zu machen. Die neue Canvas-Funktion hilft Nutzern dabei, effizienter zu arbeiten, während die Feinabstimmung der Vision es Entwicklern ermöglicht, Modelle so anzupassen, dass sie visuelle Aufgaben besser bewältigen können. Fairness und der Abbau von Vorurteilen sind ebenfalls wichtige Prioritäten, um sicherzustellen, dass KI für jeden gut funktioniert, unabhängig davon, wer er ist. Ganz gleich, ob Sie ein Entwickler sind, der seine Modelle verfeinert, oder einfach nur die neuesten Funktionen nutzen möchten, ChatGPT entwickelt sich weiter, um eine Vielzahl von Anforderungen zu erfüllen. Mit Echtzeit-Funktionen, visueller Integration und einem Fokus auf verantwortungsvollem Umgang bauen diese Updates eine vertrauenswürdige und zuverlässige KI-Erfahrung für alle auf.

Erfahren Sie mehr über KI, indem Sie unser GitHub-Repository besuchen und unserer Community beitreten. Erfahren Sie mehr über KI-Anwendungen in den Bereichen selbstfahrende Systeme und Gesundheitswesen.