As generative AI advances, learning to identify AI-generated images is important. Discover tips, tools, and techniques to spot fakes efficiently and effectively.

As generative AI advances, learning to identify AI-generated images is important. Discover tips, tools, and techniques to spot fakes efficiently and effectively.

Image generation models are becoming more advanced, and we are seeing an increase in life-like artificial intelligence (AI) images. The AI vs real photo debate is becoming more relevant as it gets harder to distinguish between the two. There have been multiple scenarios where AI-generated images have fooled the internet. We’ve seen Pope Francis in a puffer jacket and Katy Perry at the 2024 Met Gala. Both were images fabricated by generative AI. In other words, they were not real. However, at first glance, the internet believed that they were.

Sometimes, this mix-up can be amusing, but more often, it presents a serious ethical concern. Just like it's important to keep up with how generative AI works, it's also crucial to know how to tell if something is AI-generated. In this article, we’ll take a closer look at AI-generated images, understand the pros and cons of AI art, discuss legal issues, and explore key methods and tools to tell them apart from real images.

AI images are created using image generation models that use neural networks trained on large datasets to generate realistic images. What's impressive is their ability to mix styles, concepts, and features to create artistic and relevant images. During training, the image generation models learn different features and details from these images. Doing so helps them create new images that look similar in style and content to the ones they learned from.

There are many types of image generation models, each with its own special features. For example, Generative Adversarial Networks (GANs) use two neural networks that work in tandem to create realistic images that resemble their training data. Diffusion models generate images by gradually turning random noise into clear images. Transformers, such as those used in models like DALL-E and CLIP, use self-attention mechanisms to generate images from textual descriptions.

Anyone can create AI images using tools like OpenAI’s GPT-4o, Midjourney, Gencraft, or Stable Diffusion. These images are now appearing all over the internet, and often without any labels to indicate they are made by AI.

Like photography or painting, AI image generation is being considered a new art form by many. AI paintings are being sold for thousands of dollars and winning art competitions. This raises the question: is AI art a good thing, and what are the pros and cons of such image generation?

There are varying opinions on this. For example, small businesses on a budget might see generated art as a plus. They can create custom images that perfectly match branding and marketing needs. These tools can save time by quickly producing high-quality visuals and help keep creative projects on track. With respect to artists' inspiration, image generation can provide access to a vast library of unique options. An artist can easily visualize an idea before bringing it to life.

However, AI-generated images often lack emotional depth and can struggle to capture raw human experiences. Sometimes, the quality can be inconsistent, with images appearing pixelated or unrealistic. Relying too much on AI can stifle creativity and critical thinking. There's also the risk of misuse. AI images can be easily manipulated and lead to misinformation. Also, using these tools can involve a steep learning curve, and they may carry biases from their training data. Here are some other cons of AI art:

As AI advances, we are still actively figuring out the legal implications (like copyright issues) as a society. Unlike traditional creations, AI-generated images cannot be copyrighted in some countries like the US because they are essentially remixes of existing works, many of which are already copyrighted. It gets complicated because AI training often involves massive amounts of data scraped from the internet, potentially including copyrighted material. To this effect, many people are actively protesting against the use of copyrighted content for AI model training and want better regulations.

Some companies have even filed lawsuits. Getty Images, a stock image provider, filed a lawsuit against Stability AI, an AI art generator, for allegedly duplicating and using Getty’s image library for commercial gain. Several images produced by Stability AI’s text-to-image model bear Getty’s watermark. DeviantArt and two other AI companies are also being sued by an artist in a class-action lawsuit claiming their AI-generated artwork infringes on copyright laws.

Learning how to spot AI images is vital because their usage in fake news to mislead people has increased, especially during elections. According to BBC, 60% of researchers succeeded in using AI to create misleading images about ballots and locations.

AI images also affect consumers. A recent study by Attest revealed that most consumers (76%) can't tell the difference between authentic and AI-generated images. Here’s how you can tell if an image is AI-generated.

It may seem obvious, but the easiest way to spot AI images is to check the description and tags for ‘AI-Generated.’ Since there is still so much being questioned regarding AI images, companies that generate them and/or license them are doing everything they can to be transparent about their origin. Stock photo agencies that allow AI images in their libraries demand that contributors label the files as ‘AI-generated’ in the image title, description, and image tags (which makes it easier to search for or exclude AI images when surfing their catalogs). Looking for these labels is the simplest way to spot an AI-generated image.

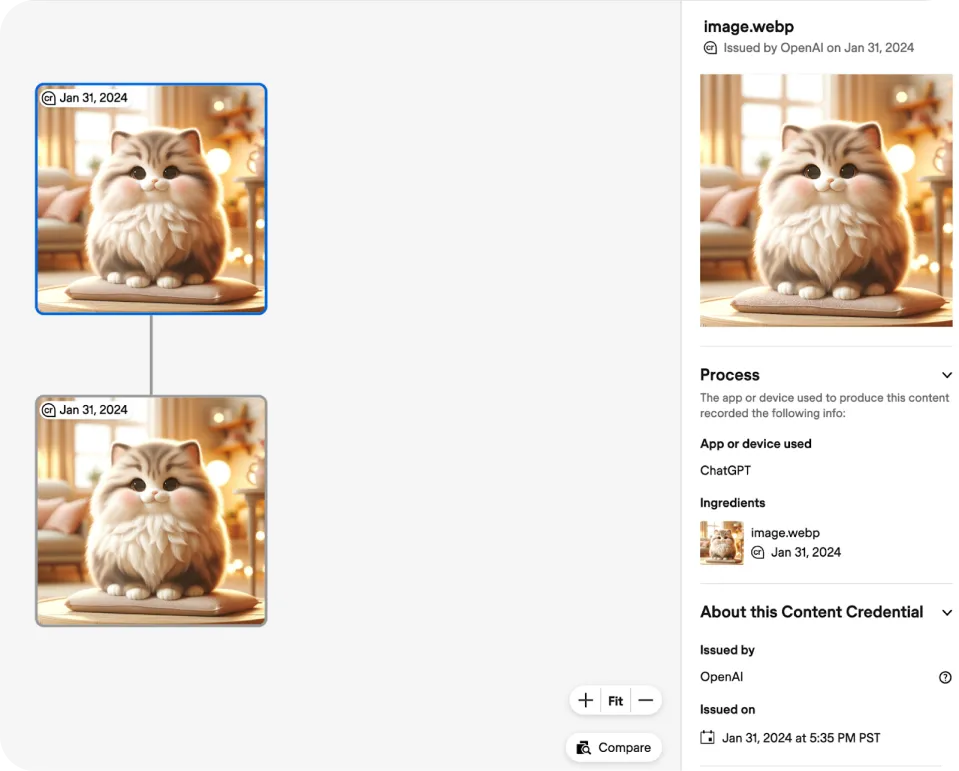

Another way to identify AI images is to look for watermarks, as many AI tools add them. These may include small logos, text, or metadata. For instance, OpenAI’s DALL-E 3 uses invisible C2PA metadata and a visible Content Credentials (CR) symbol in the top left corner. However, the logo is only visible when checking the image on a content credential verification site, such as Content Credentials Verify. Companies might mark their images differently, so you may need to familiarize yourself with various indicators.

Google recently announced SynthID, an innovative way to watermark AI images. SynthID makes it possible to embed a digital watermark directly into the pixels of AI-generated content. It is invisible to the human eye but detectable for identification. SynthID can assess whether an AI tool likely created an image by scanning for this digital watermark.

AI-generated images often have imperfections due to the limitations of deep learning algorithms. Common anomalies include:

These signs help identify AI-generated images. However, advancements in AI mean that future AI images might have fewer visible flaws.

Using AI image identification tools is another option for spotting AI images, although you should keep in mind that it may not be entirely accurate. Let’s take a look at some of the most popular tools to detect AI images:

As AI-generated media continues to spread and advance, these tools will become even more effective in the future.

As generative AI models get more intelligent, it's becoming harder to tell AI-generated images apart from actual photos. While exciting in terms of technological advancement, it is also ethically concerning. It is true that AI offers a cost-effective and innovative way to create visuals, but there are legal and practical hurdles to consider. Thankfully, there are methods and tools being developed to help us navigate this new dilemma. By staying informed, we can make sure visual content remains trustworthy.

Connect with our community to learn more about AI! Explore our GitHub repository to see how we are using AI to create innovative solutions in various industries like healthcare and agriculture. Unlock new opportunities with us!