Apple has announced new AI-enabled accessibility features, such as Eye Tracking, Music Haptics, and Vocal Shortcuts, that provide seamless user experiences.

Apple has announced new AI-enabled accessibility features, such as Eye Tracking, Music Haptics, and Vocal Shortcuts, that provide seamless user experiences.

On May 15, 2024, Apple announced an upcoming range of updates for iOS 18, with a strong focus on accessibility. Scheduled for release later this year, the new Apple updates include innovative features like Eye Tracking, Haptic Music, and Vocal Shortcuts.

These Apple accessibility features make it easier for people with mobility issues, those who are deaf or visually impaired, and those with speech difficulties to use Apple devices. Apple silicon, artificial intelligence (AI), and machine learning are the technological advancements behind such capabilities. Let’s explore Apple's new features and the impact they will have on everyday life.

As a society, we need to make sure that our technological advances are inclusive to everyone. Making technology accessible can change the lives of many people. For example, tech is a huge part of any job nowadays, but inaccessible interfaces can create career roadblocks for people with disabilities. Studies show that the unemployment rate for people with disabilities is nearly double that of those without disabilities in 2018.

Legal systems are also addressing the conversation of making technology accessible. Many countries have added laws mandating accessibility standards for websites and digital products. In the US, lawsuits related to website accessibility have been steadily increasing, with roughly 14,000 web accessibility lawsuits filed from 2017 through 2022, including over 3,000 in 2022 alone. Regardless of legal requirements, accessibility is essential for an inclusive society where everyone can participate fully. That’s where the following Apple accessibility features step in.

Apple has been working towards making technology accessible to everyone since 2009. That year, they introduced VoiceOver, a feature that allowed blind and visually impaired users to navigate their iPhones using gestures and audible feedback. Reading out what’s on screen, makes it easier for users to interact with their devices.

Since then, Apple has continuously improved and expanded its accessibility features. They introduced Zoom for screen magnification, Invert Colors for better visual contrast, and Mono Audio for those with hearing impairments. These features have significantly improved how users with various disabilities interact with their devices.

Here’s a closer look at some of the key Apple accessibility features we have seen in the past:

A person with limited hand mobility can now easily browse the internet, send messages, or even control smart home devices by simply looking at their screen. These advancements make their everyday life more manageable allowing them to independently complete tasks which they previously found difficult.

Another exciting upcoming Apple accessibility feature is Eye Tracking, which makes this a reality. Designed for users with physical disabilities, Eye Tracking provides a hands-free navigation option on iPads and iPhones. By using AI and the front-facing camera to see where you're looking, it allows you to activate buttons, swipes, and other gestures effortlessly.

The front-facing camera sets up and calibrates Eye Tracking in seconds. You can use Dwell Control to interact with app elements, accessing functions like buttons and swipes with just your eyes. Users can trust that their privacy is protected because the device securely processes all the data using on-device machine learning.. Eye Tracking works seamlessly across iPadOS and iOS apps without needing additional hardware or accessories. Apple Vision Pro’s VisionOS also, includes an eye-tracking feature for easy navigation.

Music Haptics is another of the soon-to-be-released Apple accessibility features. It has been designed for users who are deaf or hard of hearing to experience music on their iPhones. When in use, the Taptic Engine generates taps, textures, and refined vibrations that sync with the audio. You can feel the music's rhythm and nuances. It’s quite in-depth, thanks to the Taptic Engine's ability to produce various intensities, patterns, and durations of vibrations to capture the feeling of the music.

Music Haptics can connect someone who is hard of hearing to music in a way that was not possible before. This unlocks a world of experiences in their day-to-day lives. Going on a run while listening to music or enjoying a song with a friend becomes a shared and immersive experience. The vibrations can match the energy of a workout playlist, making exercise more motivating, or help calm down nerves with a favorite meditation tune. Music Haptics supports millions of songs in the Apple Music catalog and will be available as an API for developers, allowing them to create more accessible apps.

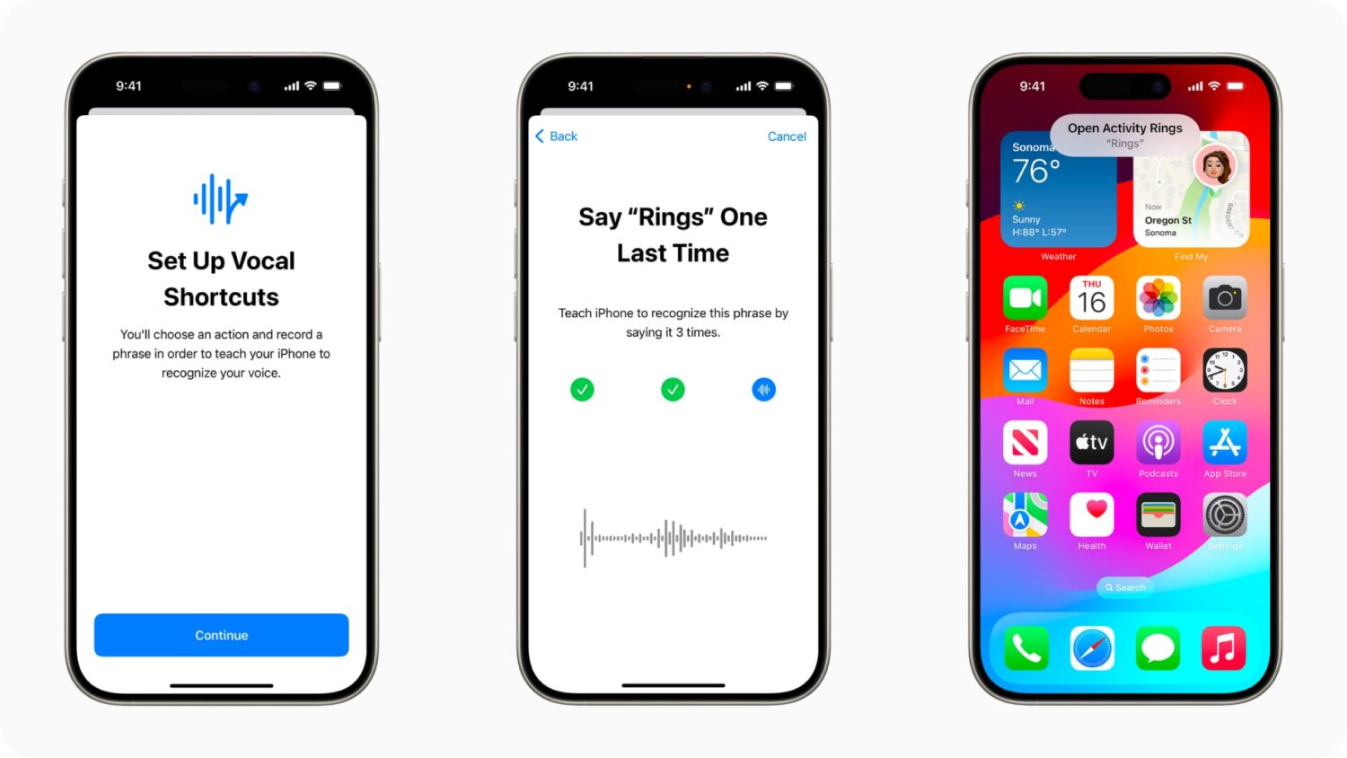

Apple designed Vocal Shortcuts to improve speech systems using AI in the list of new accessibility features.. It lets iPhone and iPad users create "custom utterances" so Siri can launch shortcuts and manage complex tasks. This is especially helpful for people with conditions like cerebral palsy, ALS, or stroke.

The "Listen for Atypical Speech" feature uses on-device machine learning to recognize and adapt to different speech patterns, making tasks like sending messages or setting reminders easier. By supporting custom vocabularies and complex words, Vocal Shortcuts provide a personalized and efficient user experience.

There are also a couple of new Apple accessibility features to help passengers use their devices more comfortably during travel. For instance, Vehicle Motion Cues is a new feature for iPhone and iPad that helps reduce motion sickness for passengers in moving vehicles.

Motion sickness often happens due to a sensory mismatch between what a person sees and what they feel, making it uncomfortable to use devices while in motion. Vehicle Motion Cues addresses this by displaying animated dots on the edges of the screen to represent changes in vehicle motion. It reduces sensory conflict without disrupting the main content. This feature uses built-in sensors in iPhones and iPads to detect when a user is in a moving vehicle and adjust accordingly.

CarPlay has also received updates to enhance accessibility. Voice Control allows users to operate CarPlay and its apps using voice commands, while Sound Recognition alerts those who are deaf or hard of hearing to car horns and sirens. For colorblind users, Color Filters improve the visual usability of the CarPlay interface. These enhancements help make all passengers more comfortable during a car ride.

Apple Vision Pro already offers Apple accessibility features like subtitles, closed captions, and transcriptions to help users follow audio and video. Now, Apple is expanding these features further. The new AI-driven Live Captions feature allows everyone to follow spoken dialog in live conversations and audio from apps by displaying text on the screen in real time. This is particularly beneficial for people who are deaf or hard of hearing.

For example, during a FaceTime call in VisionOS, users can see the spoken dialog transcribed on the screen, making it easier to connect and collaborate using their Persona (a computer-generated virtual avatar).

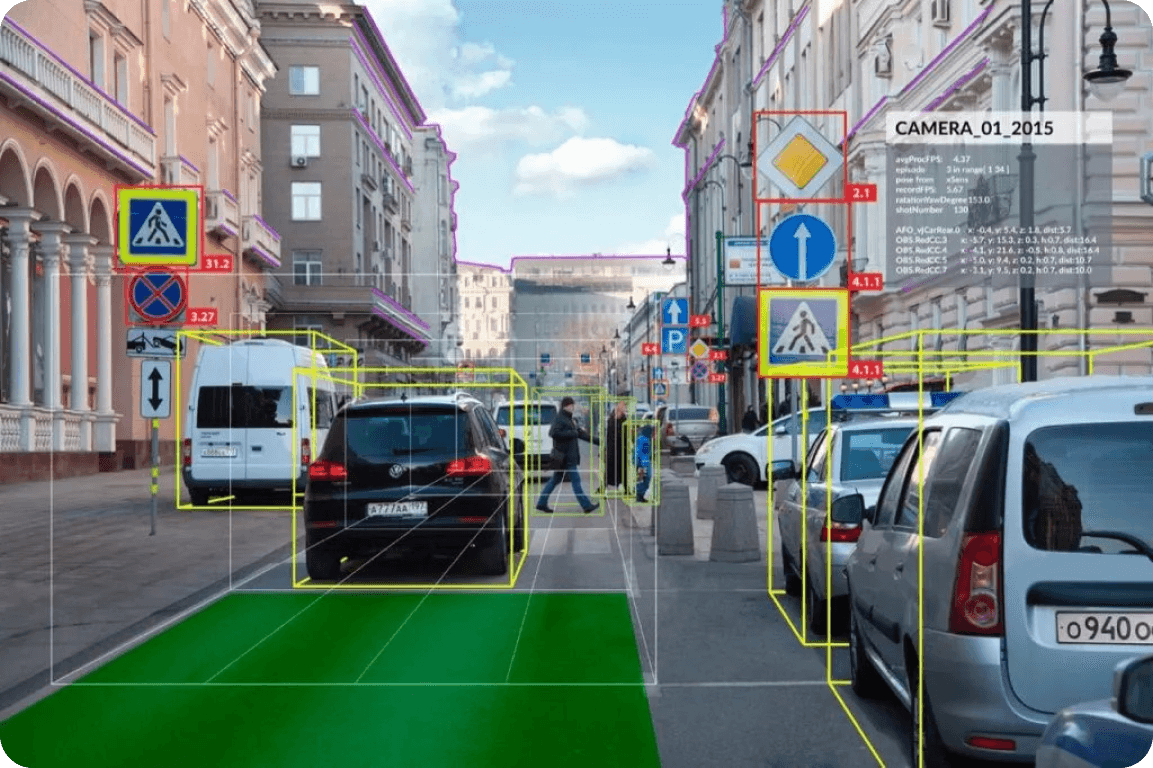

Similar to Apple's initiatives towards accessibility, many other companies are actively researching and innovating using AI to create accessible solutions. For example, AI-enabled autonomous vehicles can give people with mobility issues independence by making it easier to drive themselves. Until now, people with disabilities have been able to drive using specially modified vehicles with adaptations such as hand controls, steering aids, and wheelchair-accessible features. With the integration of AI technologies like computer vision, these vehicles can now provide even more support.

Tesla's Autopilot is a good example of AI-enhanced driving assistance. It uses advanced sensors and cameras to detect obstacles, interpret traffic signals, and provide real-time navigation assistance. With these AI inputs, the vehicle can handle various driving tasks, such as staying within lanes and adjusting speed according to traffic conditions. Computer vision insights make the driving experience safer and more accessible for everyone.

With these new Apple updates, we are ushering in a new era of AI-driven inclusion. By integrating AI into its accessibility features, Apple is making daily tasks easier for people with disabilities and promoting inclusivity and innovation in technology. These advancements will impact various markets: technology companies will set new inclusivity benchmarks, healthcare will benefit from increased independence for individuals with disabilities, and consumer electronics will see higher demand for accessible devices. It’s likely that the future of technology involves creating a seamless user experience for everyone.

Check out our GitHub repository and become part of our community to stay updated on cutting-edge AI developments. Discover how AI is reshaping self-driving tech, manufacturing, healthcare, and more.