Multi-modal models and multi-modal learning: Expanding AI’s capabilities

Explore how multi-modal models integrate text, images, audio, and sensor data to boost AI perception, reasoning, and decision-making.

Explore how multi-modal models integrate text, images, audio, and sensor data to boost AI perception, reasoning, and decision-making.

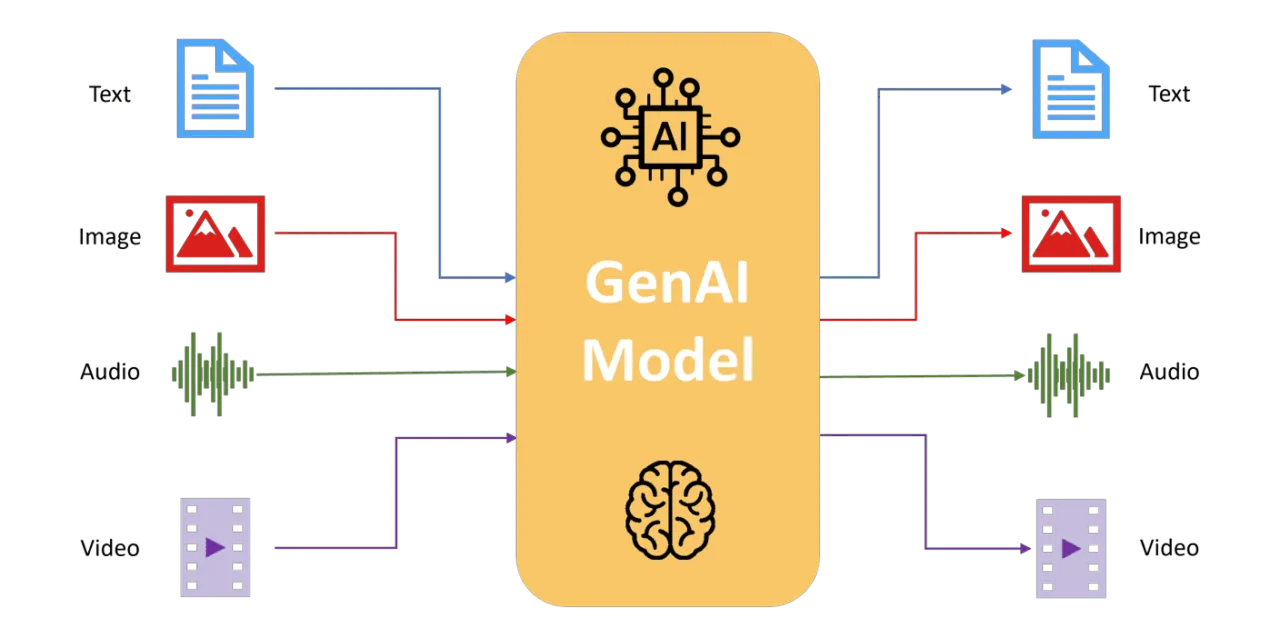

Traditional AI systems typically process information from a single data source like text, images, or audio. While these unimodal approaches excel at specialized tasks, they often fail to handle complex real-world scenarios involving multiple simultaneous inputs. Multi-modal learning addresses this by integrating diverse data streams within a unified framework, enabling richer and more context-aware understanding.

Inspired by human perception, multi-modal models analyze, interpret, and act based on combined inputs, much like humans who naturally integrate sight, sound, and language. These models allow AI to handle intricate scenarios with greater accuracy, robustness, and adaptability.

In this article, we'll explore how multi-modal models evolved, break down how they work, discuss their practical applications within computer vision, and evaluate the advantages and challenges associated with integrating multiple data types.

You might be wondering what exactly multi-modal learning is and why it matters for artificial intelligence (AI). Traditional AI models typically handle one type of data at a time, whether that's images, text, audio, or sensor inputs.

Multi-modal learning, however, goes one step further by enabling systems to analyze, interpret, and integrate multiple diverse data streams simultaneously. This approach closely mirrors how the human brain naturally integrates visual, auditory, and linguistic inputs to form a cohesive understanding of the world.

By combining these different modalities, multi-modal AI achieves a deeper and more nuanced comprehension of complex scenarios.

For example, when analyzing video footage, a multi-modal system doesn't just process visual content; it also considers spoken dialog, ambient sounds, and accompanying subtitles.

This integrated perspective allows AI to capture context and subtleties that would be missed if each data type were to be analyzed independently.

Practically speaking, multi-modal learning expands what AI can accomplish. It powers applications such as image captioning, answering questions based on visual context, generating realistic images from text descriptions, and improving interactive systems by making them more intuitive and contextually aware.

But how do multi-modal models combine these different data types to achieve these results? Let's break down the core mechanisms behind their success step by step.

Multi-modal AI models achieve their powerful capabilities through specialized processes: separate feature extraction for each modality (processing each type of data - like images, text, or audio - on its own), fusion methods (combining the extracted details), and advanced alignment techniques (ensuring that the combined information fits together coherently).

Let’s walk through how each of these processes works in more detail.

Multi-modal AI models use different, specialized architectures for each type of data. This means that visual, textual, and audio or sensor inputs are processed by systems designed specifically for them. Doing so makes it possible for the model to capture the unique details of each input before bringing them together.

Here are some examples of how different specialized architectures are used to extract features from various types of data:

Once processed individually, each modality generates high-level features optimized to capture the unique information contained within that specific data type.

After extracting features, multi-modal models merge them into a unified, coherent representation. To do this effectively, several fusion strategies are used:

Finally, multi-modal systems utilize advanced alignment and attention techniques to ensure that data from different modalities correspond effectively.

Methods such as contrastive learning help align visual and textual representations closely within a shared semantic space. By doing this, multi-modal models can establish strong, meaningful connections across diverse types of data, ensuring consistency between what the model "sees" and "reads."

Transformer-based attention mechanisms further enhance this alignment by enabling models to dynamically focus on the most relevant aspects of each input. For instance, attention layers allow the model to directly connect specific textual descriptions with their corresponding regions in visual data, greatly improving accuracy in complex tasks like visual question answering (VQA) and image captioning.

These techniques enhance multi-modal AI’s capability to understand context deeply, making it possible for AI to provide more nuanced and accurate interpretations of complex, real-world data.

Multi-modal AI has significantly evolved, transitioning from early rule-based techniques toward advanced deep-learning systems capable of sophisticated integration.

In the early days, multi-modal systems combined different data types, such as images, audio, or sensor inputs, using rules created manually by human experts or simple statistical methods. For example, early robotic navigation merged camera images with sonar data to detect and avoid obstacles. While effective, these systems required extensive manual feature engineering and were limited in their ability to adapt and generalize.

With the advent of deep learning, multi-modal models became much more popular. Neural networks like multi-modal autoencoders began learning joint representations of different data types, particularly image and text data, enabling AI to handle tasks such as cross-modal retrieval and finding images based solely on textual descriptions.

Advances continued as systems like Visual Question Answering (VQA) integrated CNNs for processing images and RNNs or transformers for interpreting text. This allowed AI models to accurately answer complex, context-dependent questions about visual content.

Most recently, large-scale multi-modal models trained on massive internet-scale datasets have further revolutionized AI capabilities.

These models leverage techniques like contrastive learning, enabling them to identify generalizable relationships between visual content and textual descriptions. By bridging the gaps between modalities, modern multi-modal architectures have enhanced AI’s ability to perform complex visual reasoning tasks with near-human precision, illustrating just how far multi-modal AI has progressed from its foundational stages.

Now that we've explored how multi-modal models integrate diverse data streams, let's dive into how these capabilities can be applied to computer vision models.

By combining visual input with text, audio, or sensor data, multi-modal learning enables AI systems to tackle increasingly sophisticated, context-rich applications.

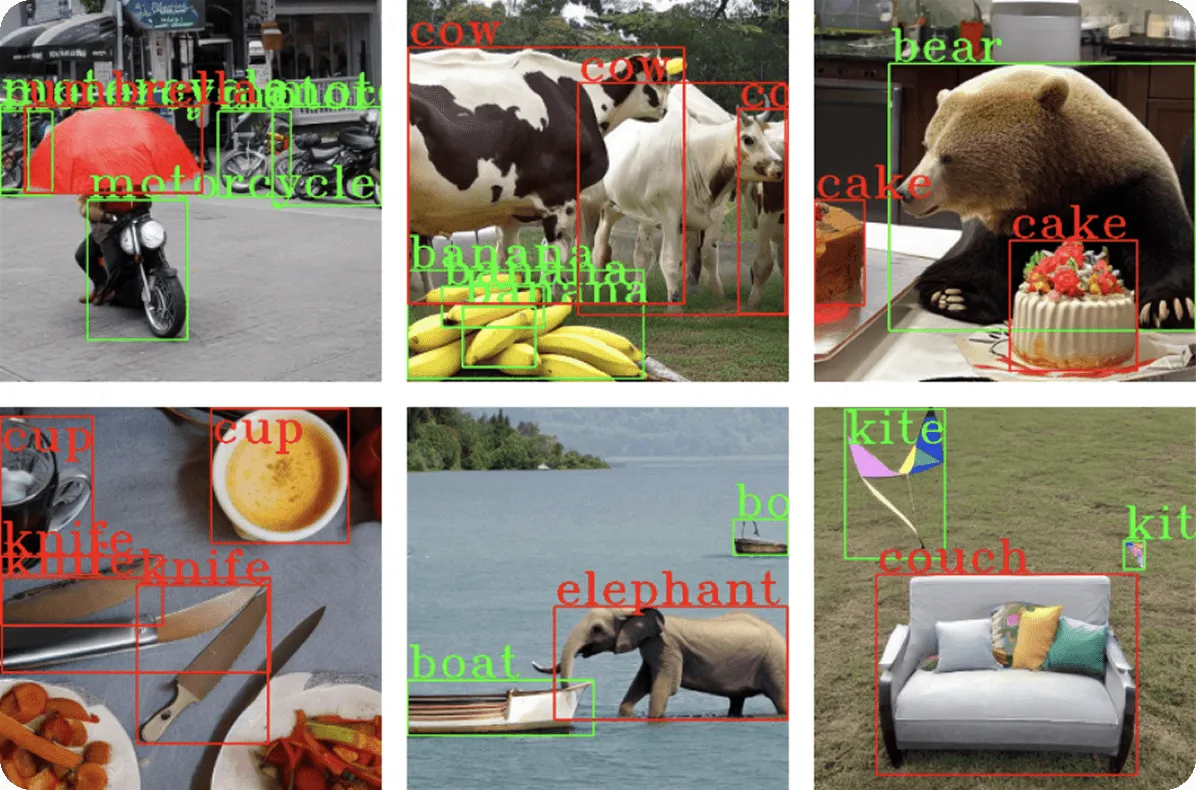

Image captioning involves generating natural language descriptions for visual data. Traditional object detection methods identify individual objects, but multi-modal captioning goes further, interpreting relationships and contexts.

For instance, a multi-modal model can analyze an image of people at a picnic and generate a descriptive caption such as “A family having a picnic in a sunny park,” providing a richer and more accessible output.

This application is important for accessibility. It can be used to generate alt-text for visually impaired individuals and content tagging for large databases. Transformer architectures play a key role here, enabling the text-generation module to focus on relevant visual areas through attention mechanisms, dynamically aligning textual descriptions with visual features.

VQA models answer natural-language questions based on visual content, combining computer vision with language understanding. These tasks require detailed comprehension of image content, context, and semantic reasoning.

Transformer architectures have enhanced VQA by enabling the model's text and visual components to dynamically interact, pinpointing exact image regions related to the question.

Google’s PaLI model, for instance, uses advanced transformer-based architectures that integrate visual transformers (ViT) with language encoders and decoders, allowing sophisticated questions such as “What is the woman in the picture doing?” or “How many animals are visible?” to be answered accurately.

Attention layers, which help models focus on the most relevant parts of an input, ensure each question word dynamically links to visual cues, enabling nuanced answers beyond basic object detection.

Text-to-image generation refers to AI’s ability to create visual content directly from textual descriptions, bridging the gap between semantic understanding and visual creation.

Multi-modal models that perform this task utilize advanced neural architectures, such as transformers or diffusion processes, to generate detailed and contextually accurate images.

For example, imagine generating synthetic training data for computer vision models tasked with vehicle detection. Given textual descriptions like "a red sedan parked on a busy street" or "a white SUV driving on a highway," these multi-modal models can produce diverse, high-quality images depicting these precise scenarios.

Such capability allows researchers and developers to efficiently expand object detection datasets without manually capturing thousands of images, significantly reducing the time and resources required for data collection.

More recent methods apply diffusion-based techniques, starting from random visual noise and progressively refining the image to align closely with textual input. This iterative process can create realistic and varied examples, ensuring robust training data covering multiple viewpoints, lighting conditions, vehicle types, and backgrounds.

This approach is particularly valuable in computer vision, enabling rapid dataset expansion, improving model accuracy, and enhancing the diversity of scenarios AI systems can reliably recognize.

Multi-modal retrieval systems make searching easier by converting both text and images into a common language of meaning. For example, models trained on huge datasets - like CLIP, which learned from millions of image-text pairs - can match text queries with the right images, resulting in more intuitive and accurate search results.

For example, a search query like “sunset on a beach” returns visually precise results, significantly improving content discovery efficiency across e-commerce platforms, media archives, and stock photography databases.

The multi-modal approach ensures retrieval accuracy even when queries and image descriptions use differing languages, thanks to learned semantic alignments between visual and textual domains.

Multi-modal learning provides several key advantages that enhance AI’s capabilities in computer vision and beyond:

Despite these strengths, multi-modal models also come with their own set of challenges:

Multi-modal learning is reshaping AI by enabling richer, more contextual understanding across multiple data streams. Applications in computer vision, like image captioning, visual question answering, text-to-image generation, and enhanced image retrieval, demonstrate the potential of integrating diverse modalities.

While computational and ethical challenges remain, ongoing innovations in architectures, such as transformer-based fusion and contrastive alignment, continue addressing these concerns, pushing multi-modal AI toward increasingly humanlike intelligence.

As this field evolves, multi-modal models will become essential for complex, real-world AI tasks, enhancing everything from healthcare diagnostics to autonomous robotics. Embracing multi-modal learning positions industries to harness powerful capabilities that will shape AI’s future.

Join our growing community! Explore our GitHub repository to learn more about AI. Ready to start your own computer vision projects? Check out our licensing options. Discover AI in manufacturing and Vision AI in self-driving by visiting our solutions pages!