Perceptrons and neural networks: Basic principles of computer vision

Understand how neural networks are transforming modern technology, from quality control in supply chains to autonomous utility inspections using drones.

Understand how neural networks are transforming modern technology, from quality control in supply chains to autonomous utility inspections using drones.

Over the last few decades, neural networks have become the building blocks of many key artificial intelligence (AI) innovations. Neural networks are computational models that attempt to mimic the complex functions of the human brain. They help machines learn from data and recognize patterns to make informed decisions. By doing so, they enable subfields of AI like computer vision and deep learning in sectors like healthcare, finance, and self-driving cars.

Understanding how a neural network works can give you a better idea of the "black box" that is AI, helping to demystify how cutting-edge technology is integrated into our daily lives functions. In this article, we’ll explore what neural networks are, how they work, and how they have evolved over the years. We’ll also take a look at the role they play in computer vision applications. Let’s get started!

Before discussing neural networks in detail, let’s take a look at perceptrons. They’re the most basic type of neural network and are the foundation for building more complex models.

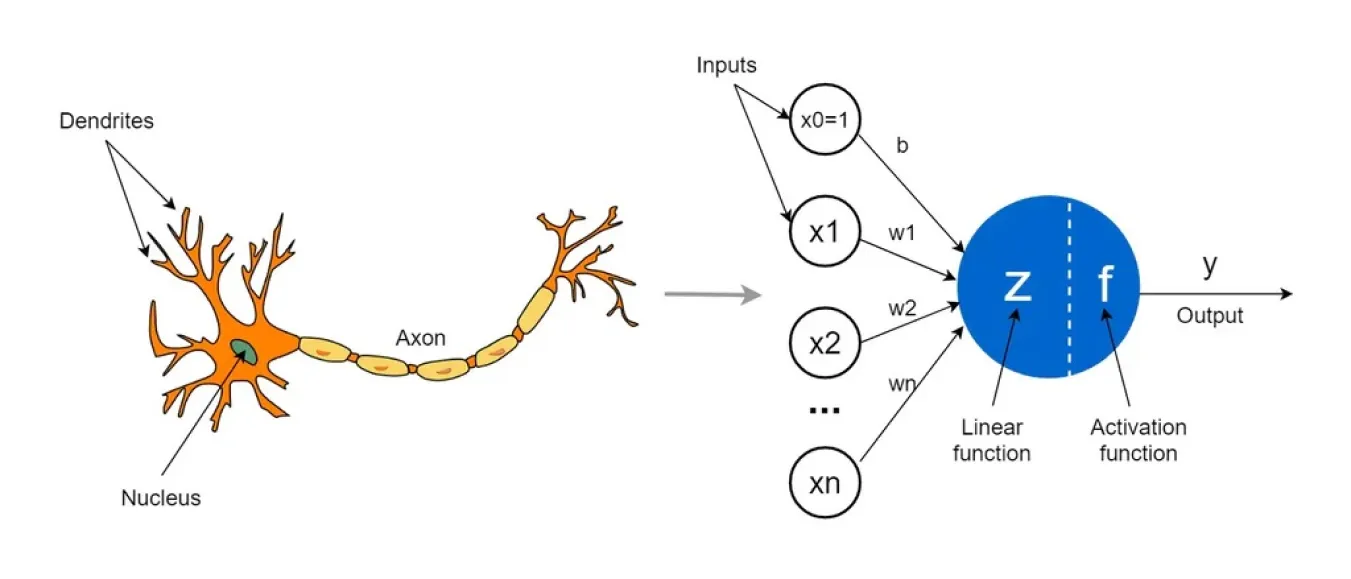

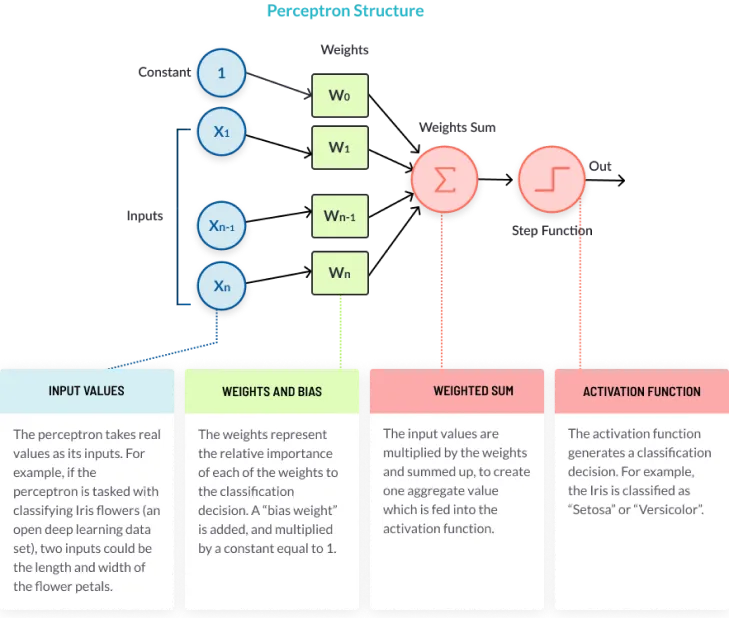

A perceptron is a linear machine learning algorithm used for supervised learning (learning from labeled training data). It is also known as a single-layer neural network and is typically used for binary classification tasks that differentiate between two classes of data. If you are trying to visualize a perceptron, you can think of it as a single artificial neuron.

A perceptron can take in several inputs, combine them with weights, decide which category they belong to, and act as a simple decision-maker. It consists of four main parameters: input values (also called nodes), weights and biases, net sum, and an activation function.

Here’s how it works:

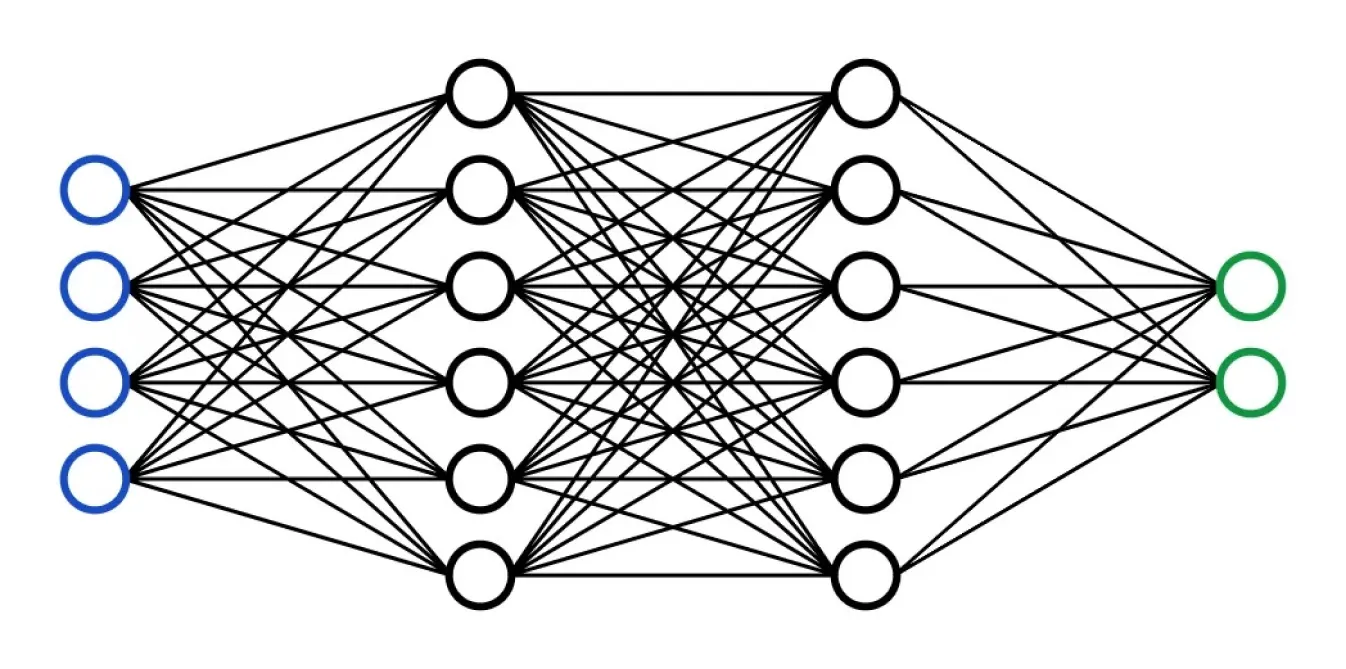

Perceptrons play an important role in helping us understand the basics of computer vision. They are the foundation of advanced neural networks. Unlike perceptrons, neural networks are not limited to a single layer. They are made up of multiple layers of interconnected perceptrons, which lets them learn complex nonlinear patterns. Neural networks can handle more advanced tasks and produce both binary and continuous outputs. For example, neural networks can be used for advanced computer vision tasks like instance segmentation and pose estimation.

The history of neural networks goes back several decades and is full of research and interesting discoveries. Let’s take a closer look at some of these key events.

Here’s a quick glimpse of the early milestones:

As we moved into the 21st century, research on neural networks took off, leading to even greater advances. In the 2000s, Hinton's work on restricted Boltzmann machines - a type of neural network that finds patterns in data - played a key role in advancing deep learning. It made training deep networks easier, helping to overcome challenges with complex models and making deep learning more practical and effective.

Then, in the 2010s, research rapidly accelerated due to the rise of big data and parallel computing. A highlight during this time was AlexNet's win in the ImageNet competition (2012). AlexNet, a deep convolutional neural network, was a major breakthrough because it showed how powerful deep learning could be for computer vision tasks, like accurately recognizing images. It helped spark the rapid growth of AI in visual recognition.

Today, neural networks are evolving with new innovations like transformers, which are great for understanding sequences, and graph neural networks, which work well with complex relationships in data. Techniques like transfer learning - using a model trained on one task for another - and self-supervised learning, where models learn without needing labeled data, are also expanding what neural networks can do.

Now that we’ve covered our bases, let’s understand what exactly a neural network is. Neural networks are a type of machine learning model that uses interconnected nodes or neurons in a layered structure that resembles a human brain. These nodes or neurons process and learn from data, making it possible for them to perform tasks like pattern recognition. Also, neural networks are adaptive, so they can learn from their mistakes and improve over time. This gives them the ability to tackle complex problems, such as facial recognition, more accurately.

Neural networks are made up of multiple processors that work in parallel, organized into layers. They consist of an input layer, an output layer, and several hidden layers in between. The input layer receives raw data, similar to how our optic nerves take in visual information.

Each layer then passes its output to the next, rather than working directly with the original input, much like how neurons in the brain send signals from one to another. The final layer produces the network’s output. Using this process, an artificial neural network (ANN) can learn to perform computer vision tasks like image classification.

Having understood what neural networks are and how they work, let’s take a look at an application that showcases the potential of neural networks in computer vision.

Neural networks form the basis of computer vision models like Ultralytics YOLO11 and can be used to visually inspect power lines using drones. The utilities industry faces logistical challenges when it comes to inspecting and maintaining their extensive networks of power lines. These lines often stretch across everything from busy urban areas to remote, rugged landscapes. Traditionally, these inspections were carried out by a ground crew. While effective, these manual methods are costly, time-consuming, and can expose workers to environmental and electrical hazards. Research shows that utility line work is among the ten most hazardous jobs in America, with an annual fatality rate of 30 to 50 workers per 100,000.

However, drone inspection technology can make aerial inspections a more practical and cost-effective option. Cutting-edge technology allows drones to fly longer distances without needing frequent battery changes during inspections. Many drones now come integrated with AI and have automated obstacle avoidance features and better fault detection capabilities. These features enable them to inspect crowded areas with many power lines and capture high-quality images from greater distances. Many countries are adopting the use of drones and computer vision for power line inspection tasks. For example, in Estonia, 100% of all power line inspections are done by such drones.

Neural networks have come a long way from research to applications and have become an important part of modern technological advancements. They allow machines to learn, recognize patterns, and make informed decisions using what they have learned. From healthcare and finance to autonomous vehicles and manufacturing, these networks are driving innovation and transforming industries. As we continue to explore and refine neural network models, their potential to redefine even more aspects of our daily lives and business operations becomes increasingly clear.

To explore more, visit our GitHub repository, and engage with our community. Explore AI applications in manufacturing and agriculture on our solutions pages. 🚀